AI Runtime Security

GCP Cloud Account Onboarding Prerequisites

Table of Contents

Expand All

|

Collapse All

AI Runtime Security Docs

GCP Cloud Account Onboarding Prerequisites

Discovery onboarding prerequisites for GCP

On this page, you'll:

- Enable VPC Flow Logs

- Enable security data access audit logs for AI Models

- Create a Cloud Storage Bucket

- Set up a Log Router to direct log entries

- Create a sink and sink destinations

- Update required IAM permissions to the user

| Where Can I Use This? | What Do I Need? |

|---|---|

|

Enable the VPC Flow Logs

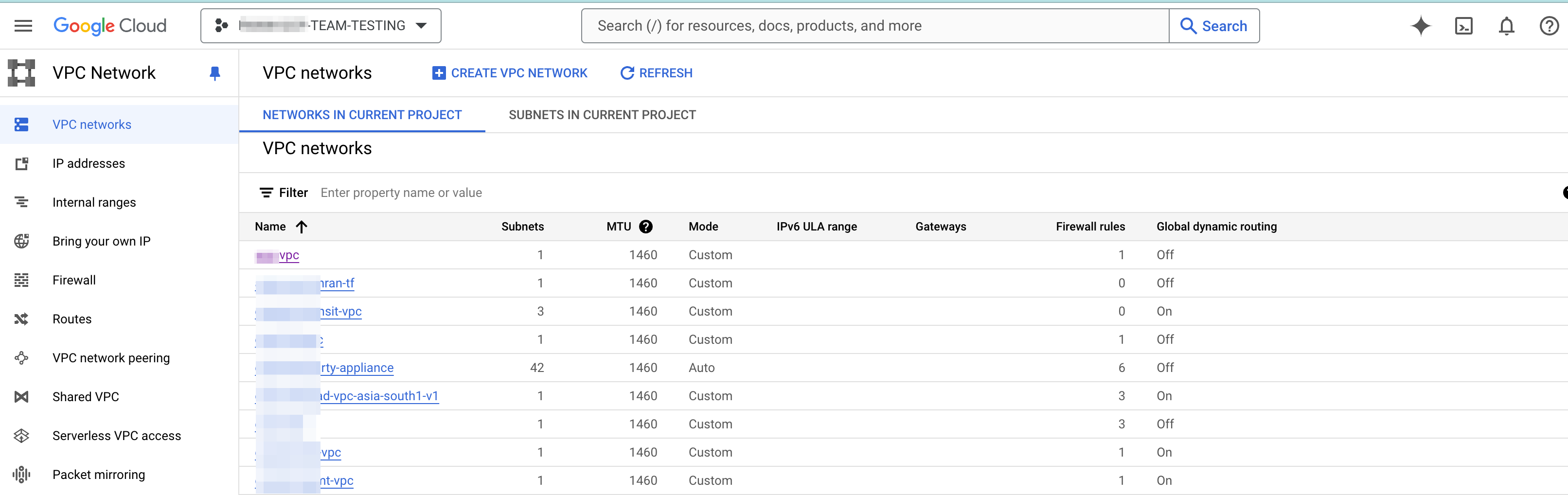

- Go to Google Cloud Console and select the project you want to onboard for discovery.Navigate to VPC Networks.Select the VPC with the workloads (VMs/Containers) to protect.

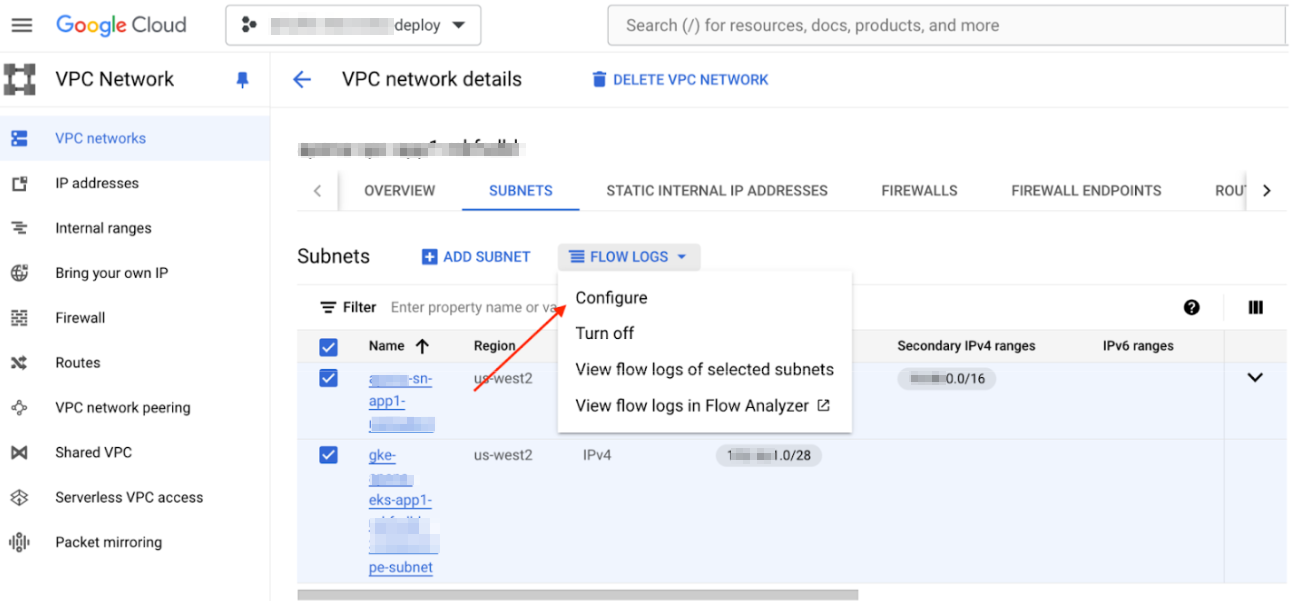

![]() SCM will discover only the running VM workloads and containers in the VPC.Click the SUBNETS tab and select all the subnets where your workloads are present.Click on the FLOW LOGS drop-down.Select Configure.

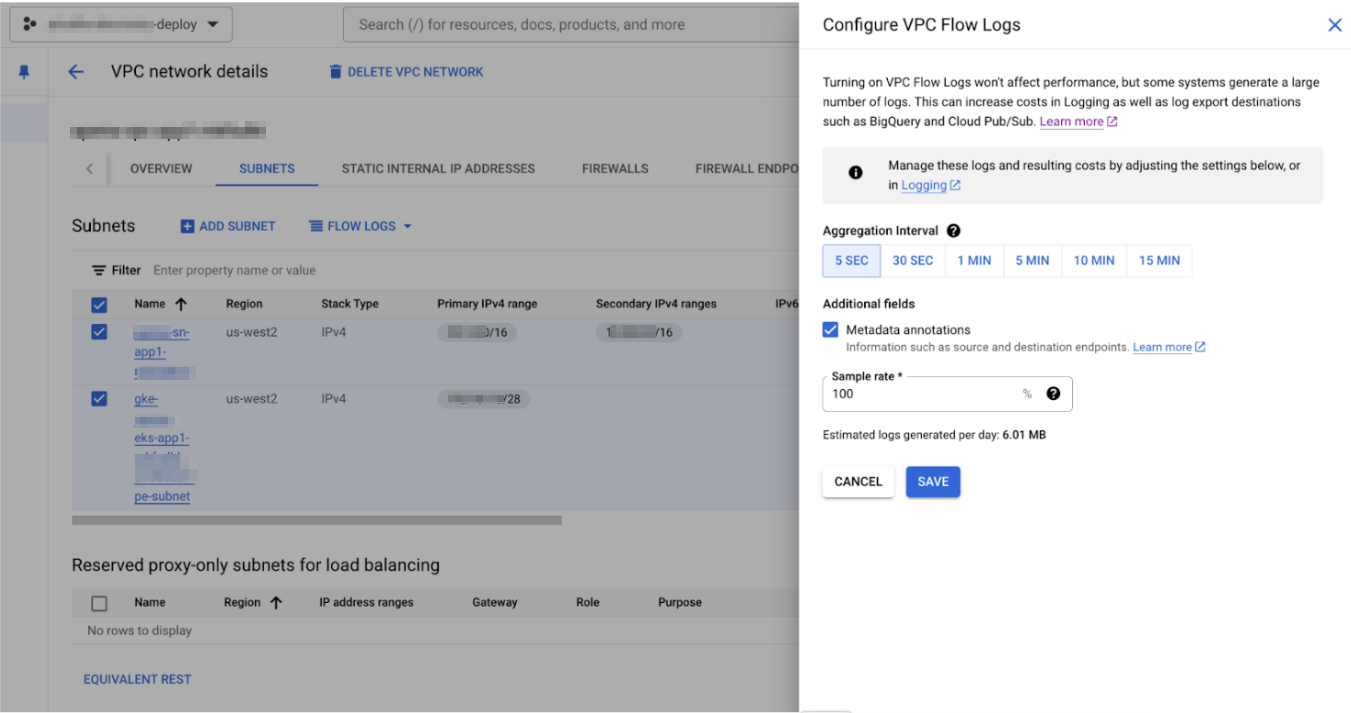

SCM will discover only the running VM workloads and containers in the VPC.Click the SUBNETS tab and select all the subnets where your workloads are present.Click on the FLOW LOGS drop-down.Select Configure.![]() In Configure VPC Flow Logs, set the Aggregation Interval of 5 Sec, enable the Metadata annotations, and use a Sample rate of 100%.

In Configure VPC Flow Logs, set the Aggregation Interval of 5 Sec, enable the Metadata annotations, and use a Sample rate of 100%.![]() SAVE.To view the logs, click FLOW LOGS and select View flow logs of selected subnets.

SAVE.To view the logs, click FLOW LOGS and select View flow logs of selected subnets.Enable Data Access Audit Logs

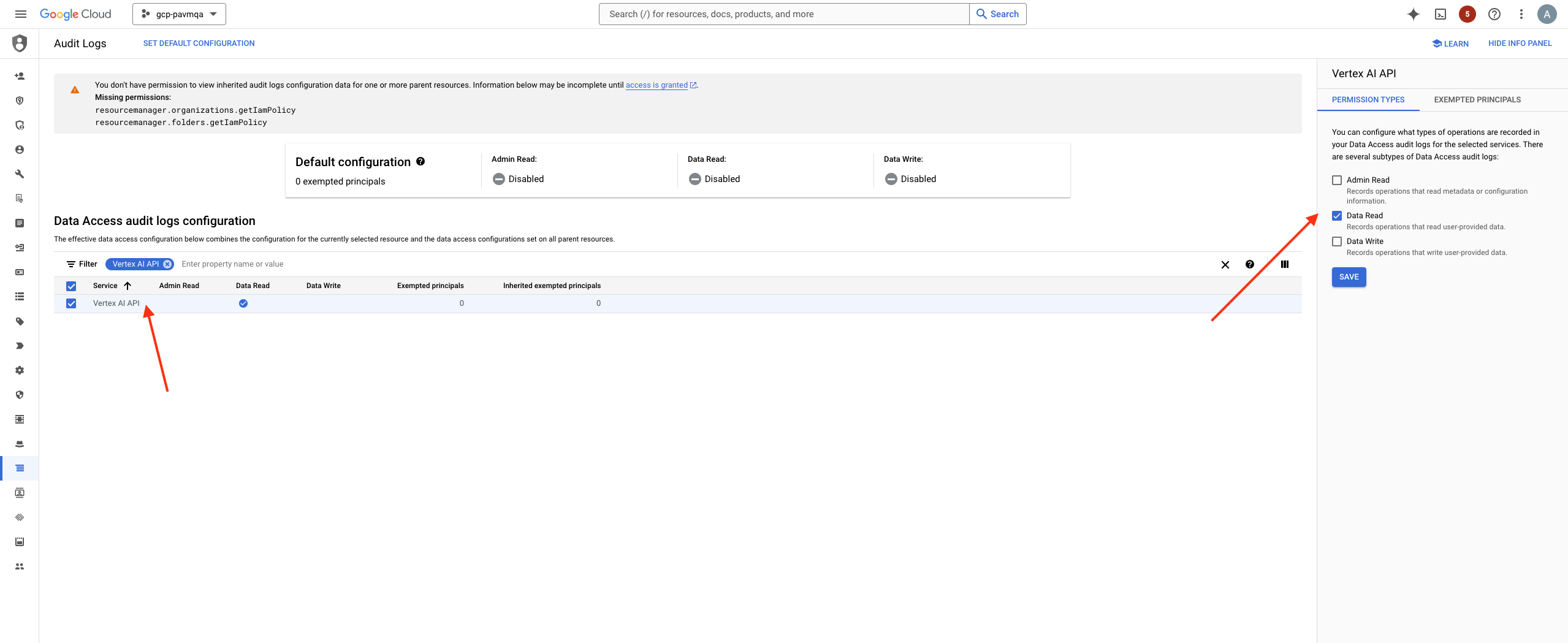

Before you create a Cloud Storage bucket and ensure you enable the data access audit logs in IAM for the project where the AI models are present, specifically for unprotected AI model traffic. - Go to the Google Cloud Console and select your project.In the search bar at the top, type Audit Logs and select it.Search for and click Vertex AI API from the list of available audit logs.Enable the Data Read log under PERMISSION TYPE.

![]() SAVE.

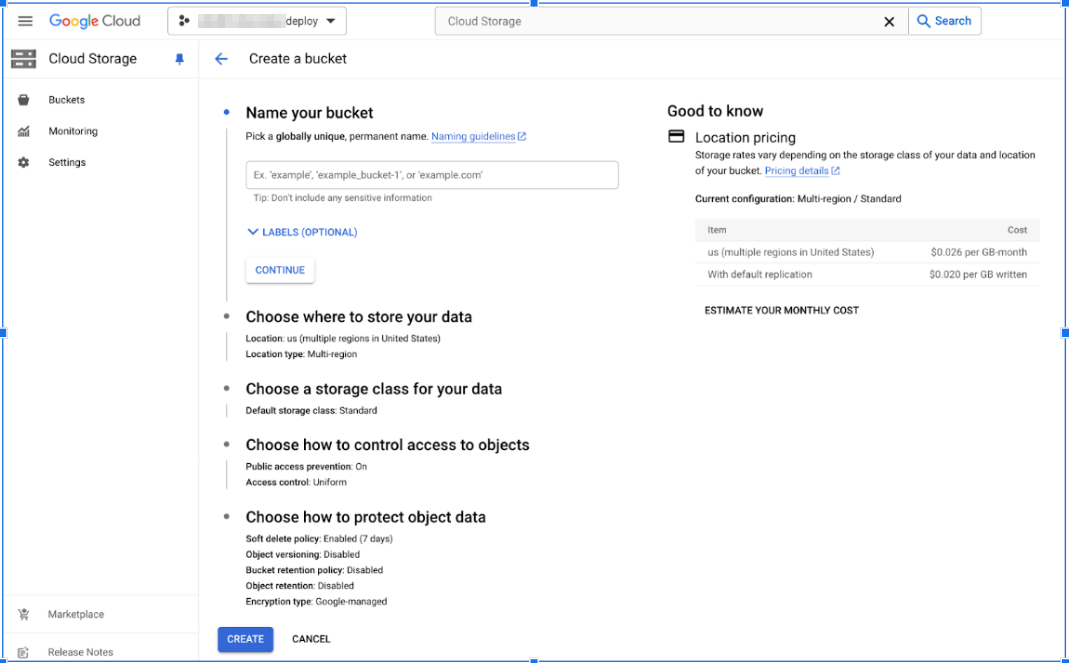

SAVE.Create a Cloud Storage Bucket

Create a cloud storage bucket to securely store the VPC flow logs and audit logs. The bucket acts as a central repository for the data collected from your GCP environment and is used for traffic analysis. Prerequisite:- Go to Cloud Storage and click CREATE:

![]()

- Enter a globally unique name for the bucket and click CONTINUE.

- Choose Multi-region for high availability and click

CONTINUE. The Multi-region selection will incur higher costs than other options.

- Choose the Standard option for the storage class and click CONTINUE.

- For access control, select the Uniform

configuration and click CONTINUE. Making this bucket publicly accessible is optional.

- Use default settings for data protection.

- Click CREATE.

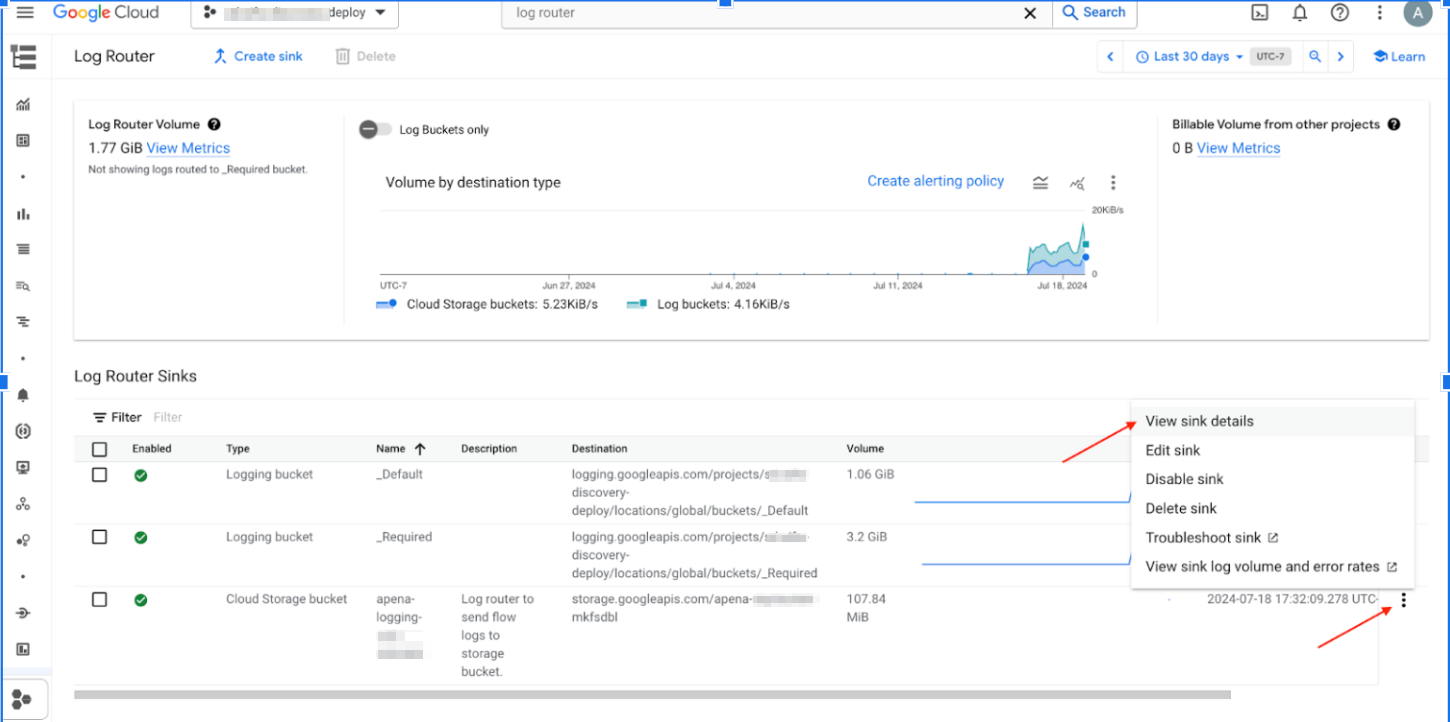

In the Google Cloud Console search for Log Router:- Select Create sink.

![]()

- Enter a Sink name and optionally enter a Sink description. Click Next.

- In the Sink destination, choose Cloud Storage Bucket for the sink service and specify the Cloud Storage bucket name.

- In the next section, provide a filter that matches with all the:

- VPC flow logs generated by the workloads

- Audit logs for GCP Vertex-AI models API calls.

(logName =~ "logs/cloudaudit.googleapis.com%2Fdata_access" AND protoPayload.methodName:("google.cloud.aiplatform.")) OR ((logName="projects/<GCP_PROJECT_ID>/logs/compute.googleapis.com%2Fvpc_flows") AND (resource.labels.subnetwork_name="<SUBNET_1>" OR resource.labels.subnetwork_name="<SUBNET_2>"))- <GCP Project ID>: Replace it with your GCP project ID.

- <SUBNET_1>, <SUBNET_2>: Replace these with the values for your subnets.

- Click Preview logs and run the query to verify the filter settings and ensure the logs are correctly routed.

- Click Create sink.

Logs may take up to one hour to appear in the bucket. Hence the cloud assets discovery may be delayed in the SCM.![]()

- (Optional) If the GCP AI models accessed by your workloads are in a different GCP project, forward those logs to your bucket from that other project.

- In the other GCP project, repeat the log router setup

using the same bucket and

filter:(logName =~ "logs/cloudaudit.googleapis.com%2Fdata_access" AND protoPayload.methodName:("google.cloud.aiplatform."))

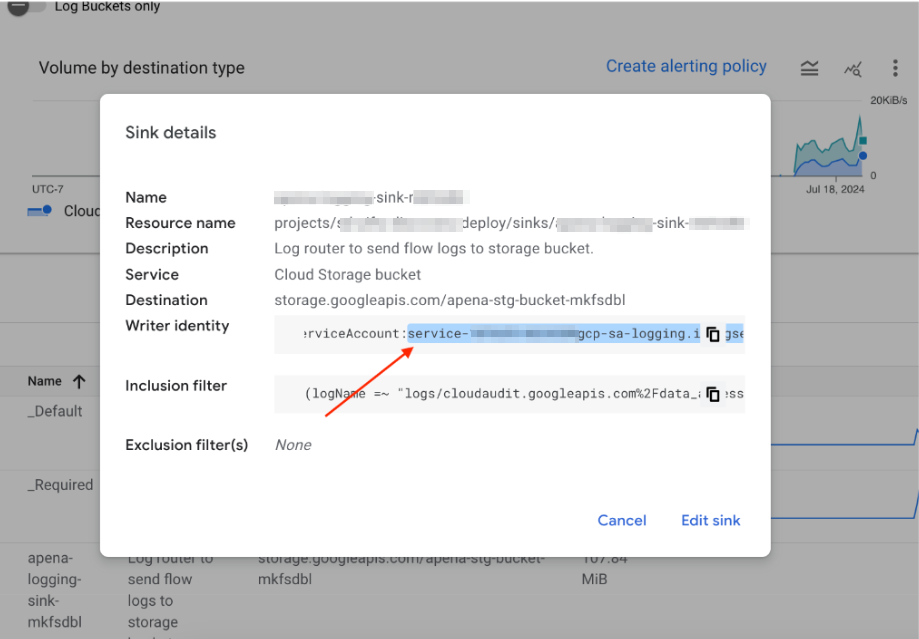

- Click the 3 dots `...` and select View sink details.

- Copy the sink writer identity email from the sink details.

![]()

![]()

- Navigate to the bucket you created and select the PERMISSIONS tab.

- Click GRANT ACCESS.

- In New principals enter the Writer identity email ID.

- Assign the Storage Object Creator role.

![]()

- Click Save.

IAM Permissions

- Assign the following permissions to the user deploying Terraform in the cloud environment:cloudasset.assets.listResource cloudasset.assets.listAccessPolicy cloudasset.feeds.get cloudasset.feeds.list compute.machineTypes.list compute.networks.list compute.subnetworks.list container.clusters.list pubsub.subscriptions.consume pubsub.topics.attachSubscription storage.buckets.list aiplatform.models.list

- In the other GCP project, repeat the log router setup

using the same bucket and

filter: