Prisma AIRS

Understanding Model Security Rules

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

Understanding Model Security Rules

Learn about Model Security rules that scans AI/ML models for major threats while

validating metadata like licenses and file formats to ensure secure model usage.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

|

Model security rules serves as the central mechanism for securing model access. Model

security scanning covers several key areas: thread and metadata. To learn more about

threat categories and risk mitigation strategies for AI and machine learning systems,

refer Model Threats.

Following are the three major threat categories:

| Threat | Description |

|---|---|

| Deserialization threats | Issues that arise when you load a model into memory. |

| Backdoor Threats | Issues that arise when a model was specifically designed to support alternative or hidden paths in its behavior. |

| Runtime Threats | Issues that arise when you use a model to perform inference. |

Model security also examine specific metadata fields in models to address security

considerations, such as verifying a model's license to ensure it's appropriate for

commercial use. Model security rules enables you to validate the following concerns:

| Metadata in Models | Security Rule Validation |

|---|---|

| Open Source License | Ensures that the model you are using is licensed for your use case. |

| Model Format | Ensures that the model you are using is in a format that is supported by your environment. |

| Model Location | Ensures that the model you are using is hosted in a location that is secure and trusted. |

| Verified Organizations in Hugging Face | Ensures that the model you are using is from a trusted source. |

| Model is Blocked | Overrides all other checks to ensure that a model is blocked no matter what. |

Our managed rules integrate all of these checks to provide comprehensive security for

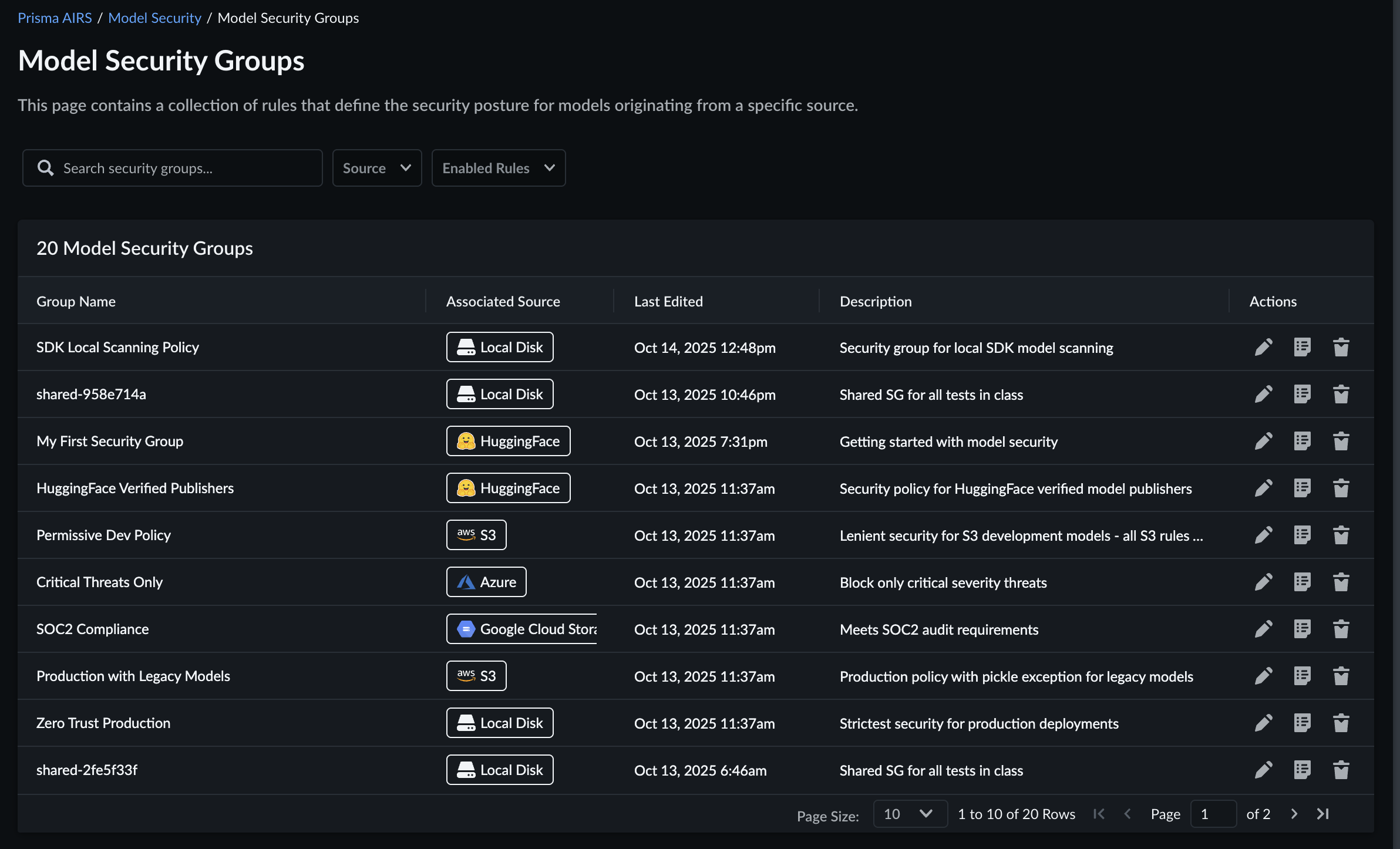

model usage. To get started, you can explore your defaults in your Model Security at AI SecurityAI Model SecurityModel Security Groups.

How Scans, Security Groups, and Rules Work Together

With Model Security, you initiate a model scan and associate it with a security

group. Model Security evaluates the model against all enabled rules within that

security group to assess whether it satisfies your security requirements. This

assessment relies on the results from enabled rules in the security group and the

group's configured threshold.

When you scan a model as follows:

# Import the Model Security SDK/Client from model_security_client.api import ModelSecurityAPIClient # Load your scanner URL scanner_url = os.environ["MODEL_SECURITY_TOKEN_ENDPOINT"] # Define your model's URI model_uri = "s3://demo-models/unsafe_model.pkl" # Set your security group's UUID security_group_uuid = "6e2ccc3a-db57-4901-a944-ce65e064a3f1" # Create a Model Security Client guardian = ModelSecurityAPIClient(base_url=scanner_url) # Scan your model response = client.scan(model_uri=model_uri, security_group_uuid=security_group_uuid)

The response will appear as follows (showing the security rules results):

{ "http_status_code": 200, "scan_status_json": { "aggregate_eval_outcome": "FAIL", "aggregate_eval_summary": { "critical_count": 1, "high_count": 0, "low_count": 0, "medium_count": 1 }, "violations": [ { "issue": "Model file 'ykilcher_totally-harmless-model/retr0reg.gguf' is stored in an unapproved format: gguf", "threat": "UNAPPROVED_FORMATS", "operator": null, "module": null, "file": "ykilcher_totally-harmless-model/retr0reg.gguf", "hash": "f59ad9c65c5a74b0627eb6ca5c066b02f4a76fe6", "threat_description": "Model is stored in a format that your Security Group does not allow", "policy_name": "Stored In Approved File Format", "policy_instance_uuid": "34ef1ddc-0b7a-45b8-a84a-c96b1d8383d0", "remediation": { "steps": [ "Store the model in a format approved by your organization" ] } }, { "issue": "The model will execute remote code since it contains operator `__class__` in Jinja template.", "threat": "PAIT-GGUF-101", "operator": "__class__", "module": null, "file": "ykilcher_totally-harmless-model/retr0reg.gguf", "hash": "f59ad9c65c5a74b0627eb6ca5c066b02f4a76fe6", "threat_description": "GGUF Model Template Containing Arbitrary Code Execution Detected", "policy_name": "Load Time Code Execution Check", "policy_instance_uuid": "09780b9f-c4f7-4e0b-ad21-7ff779472283", "remediation": { "steps": [ "Use model formats that disallow arbitrary code execution" ] } } ] } }

Note the FAIL status in the aggregate_eval_outcome

field. This indicates the model did not pass the scan because security rule failures

surpassed your security group's threshold, with the violations

field providing details about which rules were breached.

Each model security rule contains the following fields.

| Rule Field | Description |

|---|---|

| Rule Name | Specifies the name of the security rule. |

| Rule Description | Specifies the description of the security rule. |

| Compatible Sources | Specifies the model source types that this rule is compatible with. |

| Status | Specifies the status of the security rule, either Enabled or Disabled. This can be set globally, or at the security group level. |

Connecting Scans to Rules

When scanning a model, model security first identifies its format through

introspection. After determining the model type, model security maps it to the

taxonomy of model vulnerability threats and coordinate the specific deeper scans

required for that model.

A series of specific threats like Arbitrary Code Execution At

Runtime are grouped together when they are reported and are shown in a

specific rule. This allows you to block specific types of threats without having to

manage the complexity of all the various formats and permutations.

You can also configure common rules for all models

regardless of their source type.

Security Rule Checks

| Rule Name | Description | Remediation | Example |

|---|---|---|---|

| Runtime Code Execution Check |

This rule detects Arbitrary Code Execution that can occur during

model inference through various methods.

|

Avoid running inference with this model

until the finding has been investigated. |

These attacks mean the model will execute code without your

knowledge during use, making this a Critical

issue to block. Learn more about this threat type here: Runtime Threats.

|

| Known Framework Operators Check |

Machine learning model formats often include built-in operators

to support common data science tasks during model operation.

Some frameworks allow custom operator definitions, which poses

risks when executing unknown third-party code.

|

|

When TensorFlow SavedModel Contains Unknown

Operators is detected, it indicates that the model

creator is using non-standard tooling approaches.

For more information refer, SavedModel Contains Unknown

Operators.

|

| Model Architecture Backdoor Check |

A model's behavior can contain a backdoor embedded in its

architecture, specifically through a parallel data flow path

within the model. For most inputs, the model operates as

expected, but for certain inputs containing a trigger, the

backdoor activates and effectively alters the model's

behavior.

|

Avoid running inference with this model

until the finding has been investigated. |

When ONNX Model Contains Architectural Backdoor

is detected, it warns you that a model has at least one

non-standard path requiring further investigation.

For more information refer, ONNX Model Contains

Architectural Backdoor.

|

| Load Time Code Execution Check |

This rule checks for code that executes immediately upon model

loading — before any inference occurs. Simply loading the model

(for example, pickle.load(),

torch.load()) is enough to trigger the

exploit.

|

Avoid running inference with this model

until the finding has been investigated. |

For more information refer, PyTorch Model Arbitrary Code

Execution.

|

| Suspicious Model Components Check |

Not all rules target specific threats; some, like this one,

assess a model's potential for future exploitation. This check

identifies components within the model that could enable

malicious code execution later.

Example:

A violation here should prompt you to be cautious and to evaluate

all relevant components around the model before making a

decision on whether or not the model is safe for use.

More information can be found here: Keras Lambda Layers Can

Execute Code

|

|

This violation prompts caution and thorough evaluation of

all relevant model components before determining whether the

model is safe for use. For more information refer, Keras Lambda Layers Can

Execute Code . |

| Stored In Approved File Format |

This rule verifies whether the model is stored in a format that

you've approved within the rule's list.

We recommend enabling these formats by default:

By default, Model Security reports that pickle

and keras_metadata are not approved

formats.

|

If your organization can support models in this format, follow

these steps:

Alternatively, convert the model to an approved format — we

recommend safetensors.

Default Approved Formats:

| — |

Hugging Face Model Rules

There are rules specifically scoped to Hugging Face models. These rules target the

particular metadata you control or that is consistently provided by Hugging

Face.

| Hugging Face Model Rule | Description | Remediation | Default |

|---|---|---|---|

| License Exists |

The simplest rule in the application. This rule checks to see if

the model has a license associated with it.

If no license is present, then this rule will fail.

|

| — |

| License Is Valid For Use |

This rule gives you more control for the models that your

organization will run or test. As a commercial entity, you may

not want to run models with non-permissive open-source licenses

like GPLv3 or others.

Adding licenses to the rule will expand the list of licenses that

are allowed for use.

Refer Hugging Face Licenses

for the full list of license options.

We use the License

identifier field to map to the license in the model

metadata. |

If your organization can use the license, follow these steps:

Alternatively, find an equivalent model that uses a

license already on your approved list.

The scan results for this violation will show your

organization's current approved licenses list.

|

Default approved licenses:

|

| Model Is Blocked |

There may be cases where you need to prevent a specific model

from being used. This rule enables you to block particular

models as needed.

To block a model from Hugging Face, use the format

Organization/ModelName.

For example, entering

opendiffusion/sentiment-check will block

the sentiment-check model from the

opendiffusion organization.

When a model is both blocked and allowed

simultaneously, the block takes precedence. |

| All models are allowed by default. You must explicitly add models to the blocklist. |

| Organization Verified By Hugging Face |

Hugging Face is an excellent site for the latest models from all

over the world, giving you access to cutting edge research.

You may want to restrict organizations from providing unverified

models from Hugging Face. This prevents accidentally running

models from deceptively similar sources like

facebook-llama instead of the

legitimate meta-llama (where

legitimate meta-llama is the correct

model).

This rule simply checks that Hugging Face has verified the

organization, if that passes the models from the organization

will pass this check.

|

| — |

| Organization Is Blocked |

If you find a particular organization that just delivers

problematic models or for any other reason, you'd like to block

them, this is your rule.

Enter the organization name into the rule and all models from

that organization will be blocked.

For example facebook-llama would block ALL of

the models provided by that organization.

|

| All models are allowed by default. You must explicitly add models to the blocklist. |

Governance Rules

These rules enforce organizational policies around model storage and provenance. They

are not specific to any one model framework.

| Governance Rules | Description | Remediation | Default |

|---|---|---|---|

| Stored In Approved Location |

This rule validates that the model's storage location matches one

of the approved location prefixes. Models stored in non-approved

locations will be flagged. You can modify the approved locations

list by adding or removing location prefixes as needed.

|

If the model is stored in a trusted location within your

organization, follow these steps:

Alternatively, move the model to a location already

approved by your organization.

The scan results for this violation will show your

organization's current approved locations list.

| Approved locations: s3, gs, and root directory(/). |