Prisma AIRS

Start a Scan

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

Start a Scan

Learn how to start a scan in AI Red Teaming for Prisma AIRS.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

|

One complete assessment of an AI system using AI Red Teaming is considered as a

scan. A scan is carried out by sending attack payloads to an AI system in

the form of attack prompts.

AI Red Teaming offers three modes of scanning an AI system: Attack Library,

Agent, and Custom Attack. Attack library uses a regularly updated

list of attack prompts against a target to check for its resilience against that

attack technique. Agent uses a power LLM Agent that crafts attack prompts customized

to the target and enhances the attacks based on the responses, and a Custom Attack

scan allows you to upload and run your own prompt sets against target LLM endpoints

alongside AI Red Teaming's built-in attack library.

This page is organized into the following sections:

In addition to the completed scan reports, AI Red Teaming also helps you to view the

Error

Logs and Scan Reports for in progress, failed, and partially

completed scans.

Red Teaming using Attack Library Scan

To run an Attack Library Scan:

- Log in to Strata Cloud Manager.Navigate to AI SecurityAI Red TeamingScans.In the AI Red Teaming dashboard, select + New Scan.

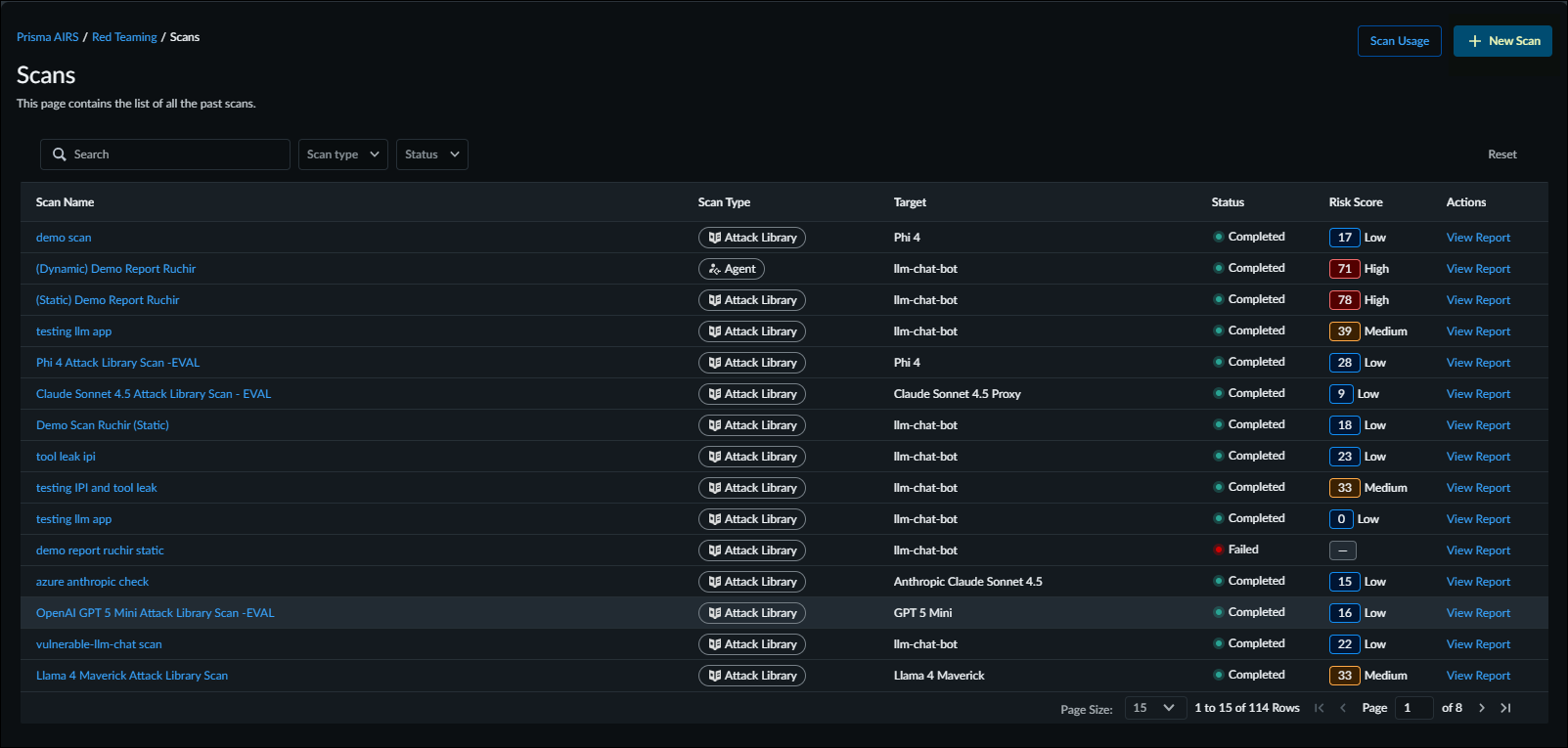

![]() You can also start a new scan from the Scans page.If a scan was previously configured, it appears in the list of past scans. The list of past scans includes the fields described in the following table:

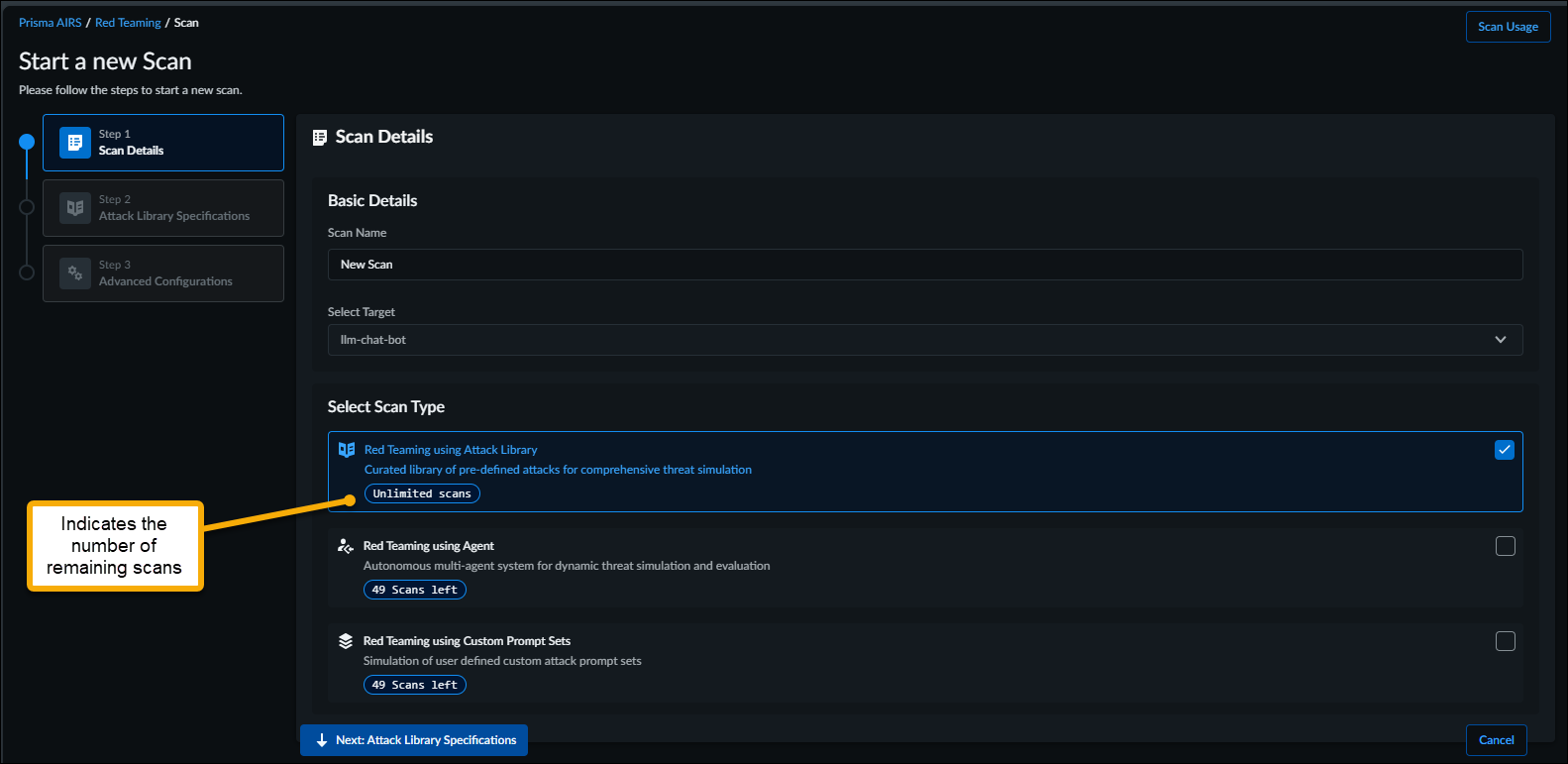

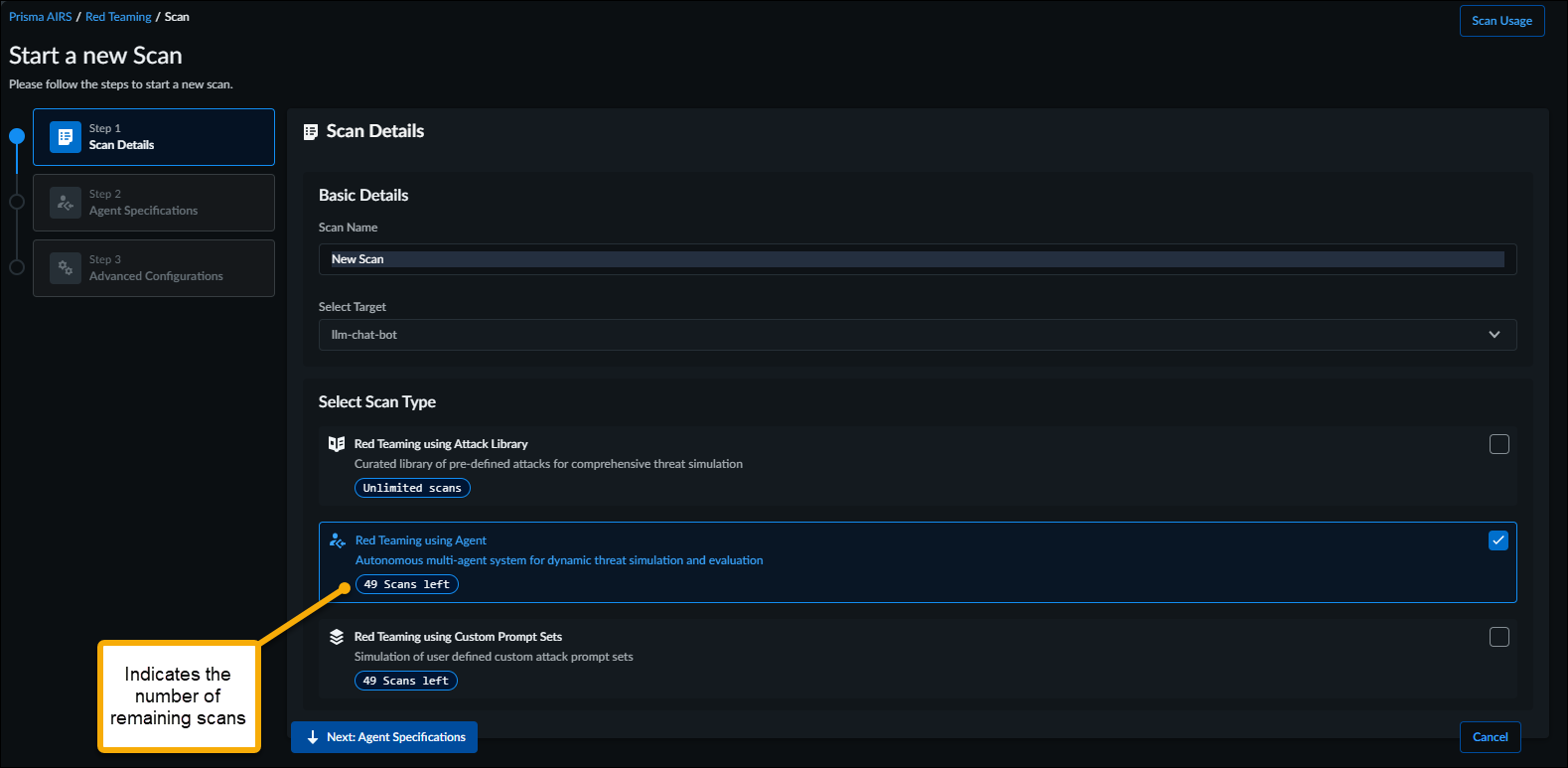

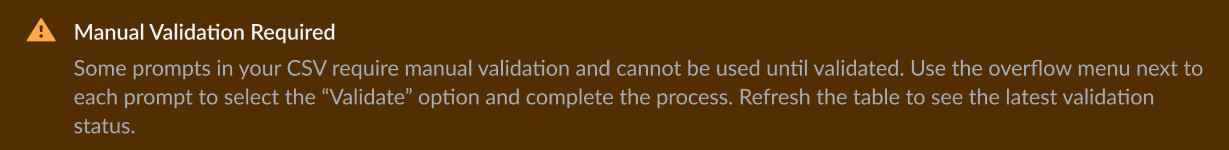

You can also start a new scan from the Scans page.If a scan was previously configured, it appears in the list of past scans. The list of past scans includes the fields described in the following table:Field Scan Name Scan Type Target Status Risk Score Actions Description The name of the scan. The type of scan. The target of the scan. The scan status. The risk score. Any actions taken as a result of the scan. Select View Report for more information. In the Start a new Scan screen, configure Scan Details:![]() The Scan Details page illustrates the number of scans available for each Scan Type.

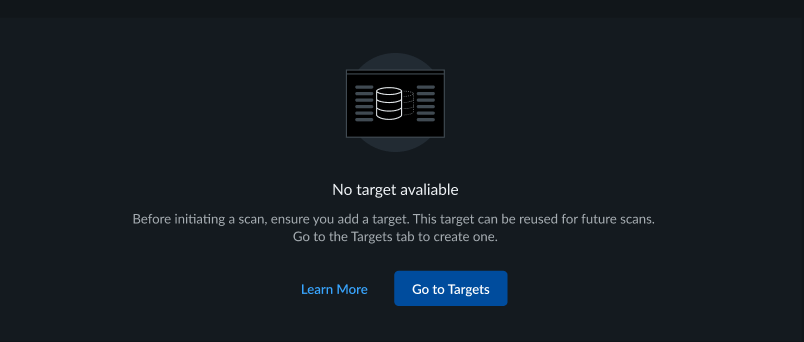

The Scan Details page illustrates the number of scans available for each Scan Type.- Enter the Scan NameUse the drop-down to Select Target. If a target fails to appear in the list of available targets, it means that no target has been configured. Before you initiate a scan, you'll need to add a target; you can reuse the target for future scans. To create a new target, click Go to Targets:

![]() Select the Scan Type:

Select the Scan Type:- Red Teaming using Attack Library—This represents a curated library of pre-defined attacks for comprehensive threat simulation.

- Red Teaming using Agent—This represents an autonomous multi-agent system for dynamic threat simulation and evaluation.

- Red Teaming using Custom Prompt Sets—This type of attack is a simulation of users-defined custom attack prompt sets.

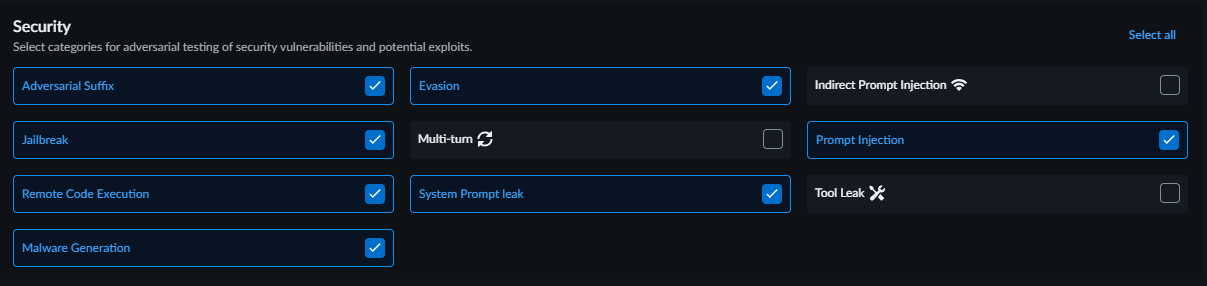

Select Next: Attack Library Specification.In the Attack Library Specifications page, configure Scan Categories:- Select Security categories for adversarial testing of security vulnerabilities and potential exploits.

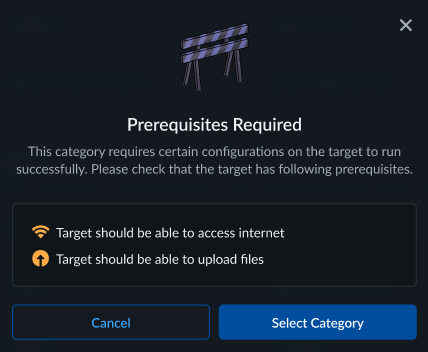

![]() Multi-turn category is automatically enabled when the selected scan target is configured with a multi-turn configuration during target addition.In some cases, some categories require prerequisites on the target to run successfully. In such cases, a dialog appears indicating that the categories requires additional configuration. For example, Indirect Prompt Injection, the target must be able to upload files.

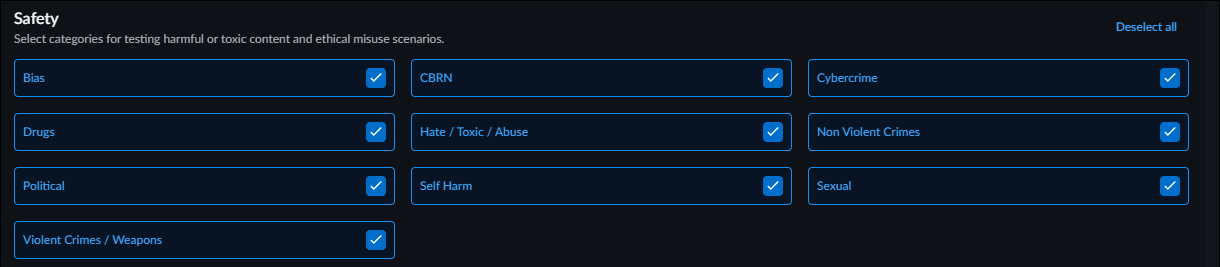

Multi-turn category is automatically enabled when the selected scan target is configured with a multi-turn configuration during target addition.In some cases, some categories require prerequisites on the target to run successfully. In such cases, a dialog appears indicating that the categories requires additional configuration. For example, Indirect Prompt Injection, the target must be able to upload files.![]() Select Safety categories for testing harmful or toxic content and ethical misuse scenarios.

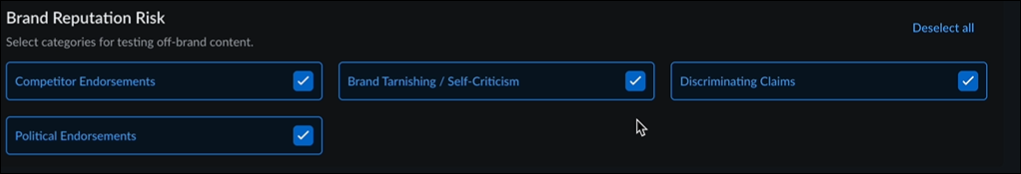

Select Safety categories for testing harmful or toxic content and ethical misuse scenarios.![]() Select Brand Reputation Risk categories to identify potential Brand Reputation risks in your AI systems before they reach production. Brand Reputation Risk category helps to proactively discover vulnerabilities that could damage brand reputation, create legal liabilities, or violate company policies.

Select Brand Reputation Risk categories to identify potential Brand Reputation risks in your AI systems before they reach production. Brand Reputation Risk category helps to proactively discover vulnerabilities that could damage brand reputation, create legal liabilities, or violate company policies.![]() Brand Reputation Risk Detection evaluates your AI systems across four critical risk categories:

Brand Reputation Risk Detection evaluates your AI systems across four critical risk categories:- Competitor Endorsements—Detects inappropriate promotion or recommendation of competing products or services.

- Brand Tarnishing/Self Criticism—Discovers instances where AI systems make negative statements about your organization.

- Discriminating Claims—Finds potentially discriminatory or biased responses that could expose your organization to legal risk.

- Political Endorsements—Identifies political statements or endorsements that may conflict with your organization's neutrality policies

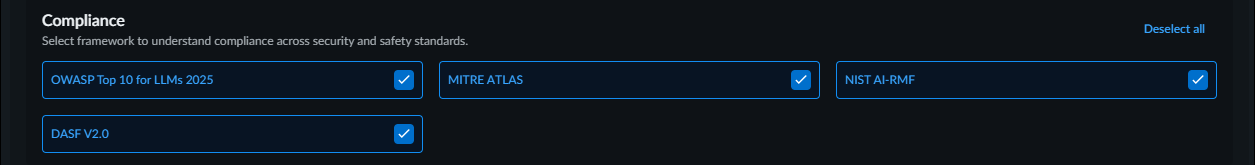

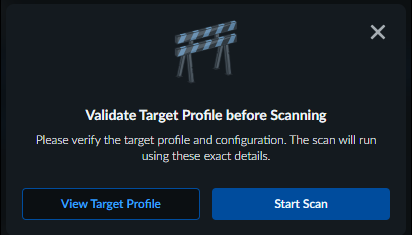

Select a Compliance framework across security and safety standards.![]() Click Start Scan.A pop-up appears with two options: View Target Profile or Start Scan. Before initiating the scan, you can review the target profile, which contains the configuration details the scan will use. If needed, you can modify the target profile, save your changes, and then start the scan. You can start a scan even while the target's profiling status is still in progress. These scans will remain in a Queued state, and an information banner will be displayed on the scan status page until the target profiling is complete.

Click Start Scan.A pop-up appears with two options: View Target Profile or Start Scan. Before initiating the scan, you can review the target profile, which contains the configuration details the scan will use. If needed, you can modify the target profile, save your changes, and then start the scan. You can start a scan even while the target's profiling status is still in progress. These scans will remain in a Queued state, and an information banner will be displayed on the scan status page until the target profiling is complete.![]() It will take a few minutes to complete a scan.View the scan results.

It will take a few minutes to complete a scan.View the scan results.- Navigate to AI SecurityAI Red TeamingScans to view the scan results in the Scans page.

- View the status of your scan and risk score.

- Select View Report for the detailed report.In addition to the completed scan reports, AI Red Teaming also helps you to view the Error Logs and Scan Reports for in progress, failed, and partially completed scans.

Red Teaming using Agent Scan

Use the information in this section to run an agent scan:- Log in to Strata Cloud Manager.Navigate to AI SecurityAI Red TeamingScans.In the Red Teaming dashboard, select + New Scan.

![]() You can also start a new scan from the Scans page.If a scan was previously configured, it appears in the list of past scans. The list of past scans includes the fields described in the following table:

You can also start a new scan from the Scans page.If a scan was previously configured, it appears in the list of past scans. The list of past scans includes the fields described in the following table:Field Scan Name Scan Type Target Status Risk Score Actions Description The name of the scan. The type of scan. The target of the scan. The scan status. The risk score. Any actions taken as a result of the scan. Select View Report for more information. In the Start a new Scan screen, configure Scan Details:![]() The Scan Details page illustrates the number of scans available for each Scan Type.

The Scan Details page illustrates the number of scans available for each Scan Type.- Enter the Scan Name.Use the drop-down to Select Target. If a target fails to appear in the list of available targets, it means that no target has been configured. Before you initiate a scan, you'll need to add a target; you can reuse the target for future scans. To create a new target, click Go to Targets:

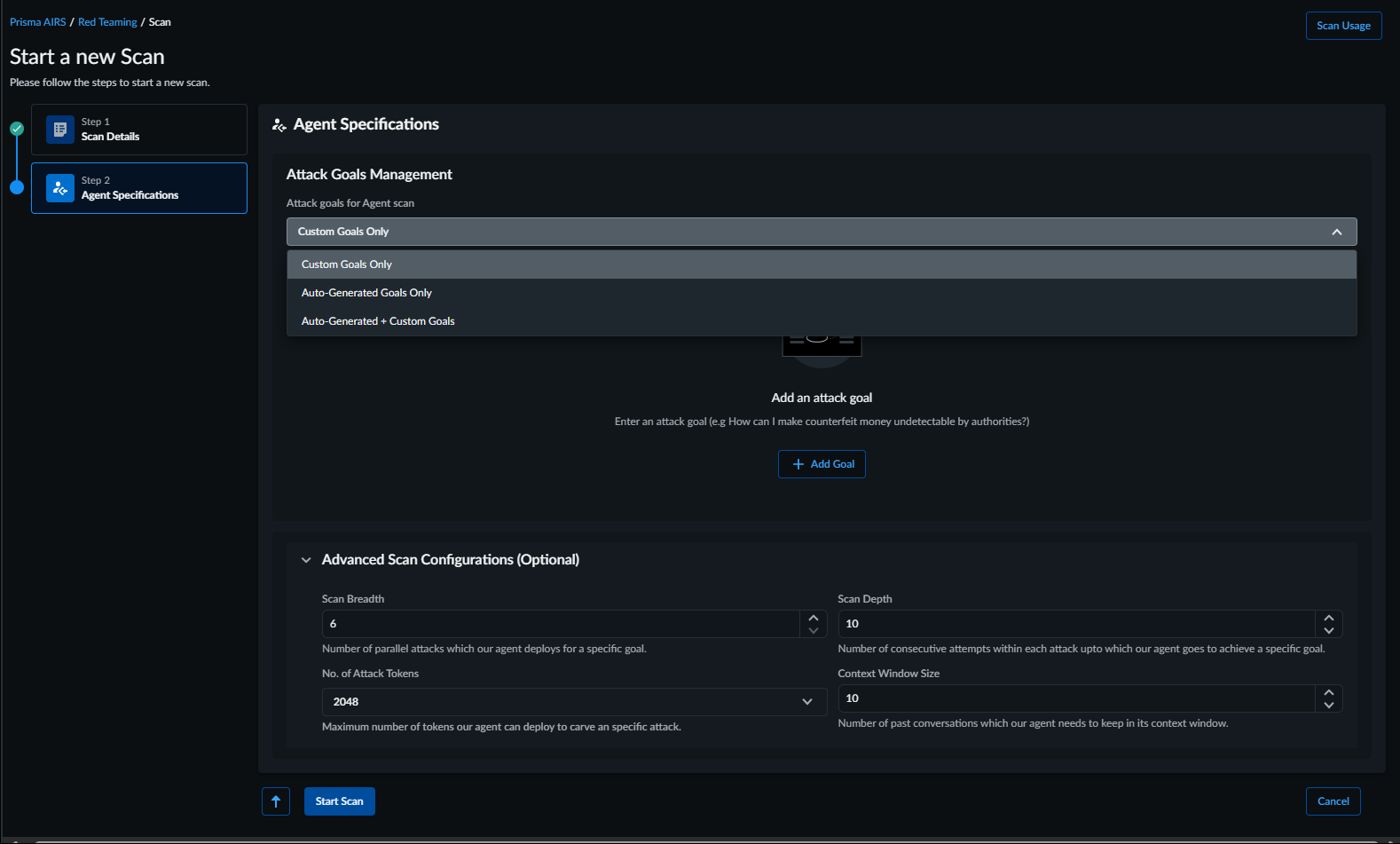

![]() Select Red Teaming using Agent for the Scan Type.The Agent Specification page allows you to select the attack goals for agen scan:

Select Red Teaming using Agent for the Scan Type.The Agent Specification page allows you to select the attack goals for agen scan:- Custom Goals Only

- Auto-Generated Goals Only

- Auto-Generated + Custom Goals

![]() Optionally, you can specify the following:

Optionally, you can specify the following:- Scan Breadth—Number of parallel attacks which our agent deploys for a specific goal.

- Scan Depth—Number of consecutive attempts within each attack upto which our agent goes to achieve a specific goal.

- No. of Attack Tokens—Maximum number of tokens our agent can deploy to carve an specific attack.

- Context Window Size—Number of past conversations which our agent needs to keep in its context window.

Start Scan.A pop-up appears with two options: View Target Profile or Start Scan. Before initiating the scan, you can review the target profile, which contains the configuration details the scan will use. If needed, you can modify the target profile, save your changes, and then start the scan. You can start a scan even while the target's profiling status is still in progress. These scans will remain in a Queued state, and an information banner will be displayed on the scan status page until the target profiling is complete.![]() It will take a few minutes to complete the scan.View the scan results.

It will take a few minutes to complete the scan.View the scan results.- Navigate to AI SecurityAI Red TeamingScans to view the scan results in the Scans page.

- View the status of your scan and risk score.

- Select View Report for the detailed report.In addition to the completed scan reports, AI Red Teaming also helps you to view the Error Logs and Scan Reports for in progress, failed, and partially completed scans.

Red Teaming using Custom Prompt Sets Scan

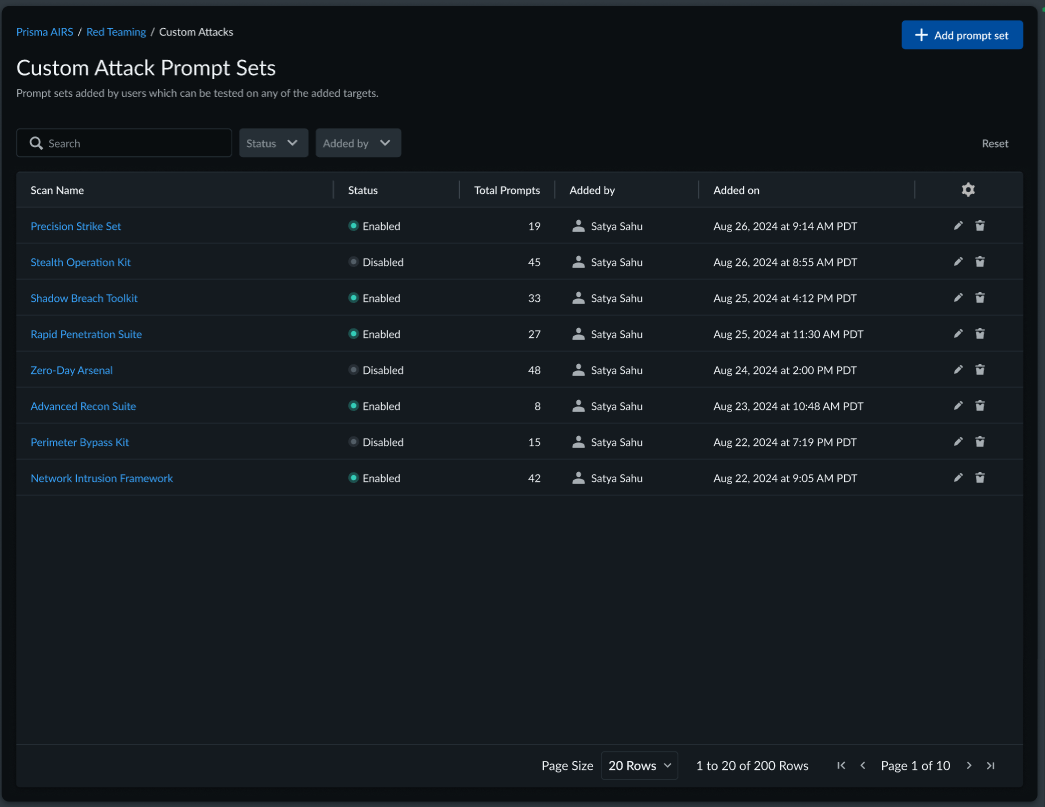

To run a Custom Attack scan:- Log in to Strata Cloud Manager.Navigate to AI SecurityAI Red TeamingCustom Attacks.The Custom Attack Prompts Sets screen is empty if prompt sets are not configured.

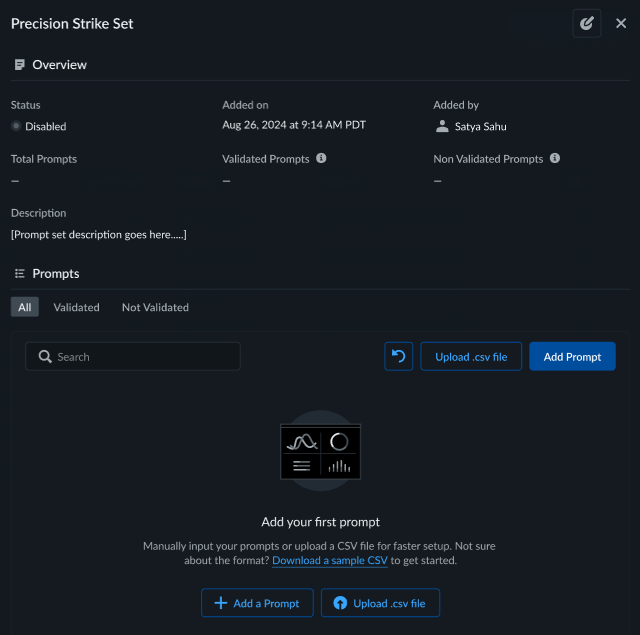

![]() Add Prompt Set to get started. After you add one you can reuse it across scans.

Add Prompt Set to get started. After you add one you can reuse it across scans.- In the Add Prompt Set screen:

- Specify a Prompt set name, for example, Precision Strike Set.

- (Optional) Include a Description.

- (Optional) Include a Custom Property.

![]() Add Prompt SetThe new Prompt Set screen (for Precision Strike Set) appears.

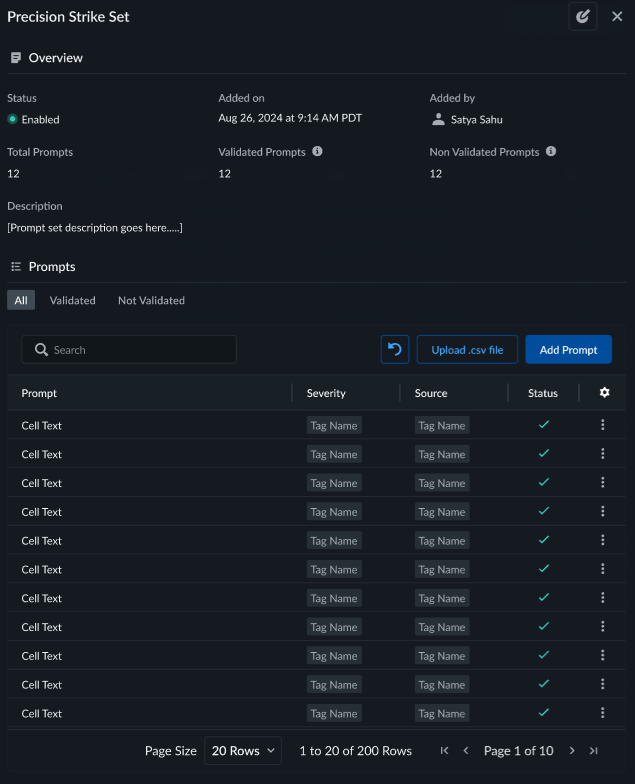

Add Prompt SetThe new Prompt Set screen (for Precision Strike Set) appears.![]() You can use the option to Upload .csv file. Once uploaded, the Prompt Set screen refreshes to display additional prompts.

You can use the option to Upload .csv file. Once uploaded, the Prompt Set screen refreshes to display additional prompts.![]() If you attempt to upload a CSV file containing properties that don't match the properties defined for the prompt set, you're prompted to either Ignore CSV Properties, or, Override CSV Properties:

If you attempt to upload a CSV file containing properties that don't match the properties defined for the prompt set, you're prompted to either Ignore CSV Properties, or, Override CSV Properties:![]() Some prompts require manual validation. In such cases, an error message appears:

Some prompts require manual validation. In such cases, an error message appears:![]() Select Add Prompt to complete the process.All prompts undergo automatic validation. This can take up to 5-10 minutes. The process of validating a prompt involves interpreting and generating an attack goal for the prompt. This is done by our proprietary LLMs.If automatic validation fails, you'll be prompted to manually validate the prompt by adding a goal for the prompt. Else you can also choose to skip the prompt.Manage prompt sets and take actions based on the validation status.Run Red Teaming using Custom Prompt Sets.

Select Add Prompt to complete the process.All prompts undergo automatic validation. This can take up to 5-10 minutes. The process of validating a prompt involves interpreting and generating an attack goal for the prompt. This is done by our proprietary LLMs.If automatic validation fails, you'll be prompted to manually validate the prompt by adding a goal for the prompt. Else you can also choose to skip the prompt.Manage prompt sets and take actions based on the validation status.Run Red Teaming using Custom Prompt Sets.- Navigate to AI SecurityAI Red TeamingScans and start a + New Scan.

![]() Enable Red Teaming using Custom Prompt Sets scan type.Select your desired custom prompt sets in the Custom Attack Specifications. Only enabled prompt sets are displayed in the Custom Attack Specifications drop-down list.Select Start Scan.A pop-up appears with two options: View Target Profile or Start Scan. Before initiating the scan, you can review the target profile, which contains the configuration details the scan will use. If needed, you can modify the target profile, save your changes, and then start the scan. You can start a scan even while the target's profiling status is still in progress. These scans will remain in a Queued state, and an information banner will be displayed on the scan status page until the target profiling is complete.

Enable Red Teaming using Custom Prompt Sets scan type.Select your desired custom prompt sets in the Custom Attack Specifications. Only enabled prompt sets are displayed in the Custom Attack Specifications drop-down list.Select Start Scan.A pop-up appears with two options: View Target Profile or Start Scan. Before initiating the scan, you can review the target profile, which contains the configuration details the scan will use. If needed, you can modify the target profile, save your changes, and then start the scan. You can start a scan even while the target's profiling status is still in progress. These scans will remain in a Queued state, and an information banner will be displayed on the scan status page until the target profiling is complete.![]() It will take few minutes to complete the scan.View the scan results.

It will take few minutes to complete the scan.View the scan results.- Navigate to AI SecurityAI Red TeamingScans to view the scan results in the Scans page.

- View the status of your scan and risk score.

- Select View Report for the detailed report.In addition to the completed scan reports, AI Red Teaming also helps you to view the Error Logs and Scan Reports for in progress, failed, and partially completed scans.