Prisma AIRS

Targets

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

Targets

Learn how to add a AI Red Teaming target in Prisma AIRS.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

|

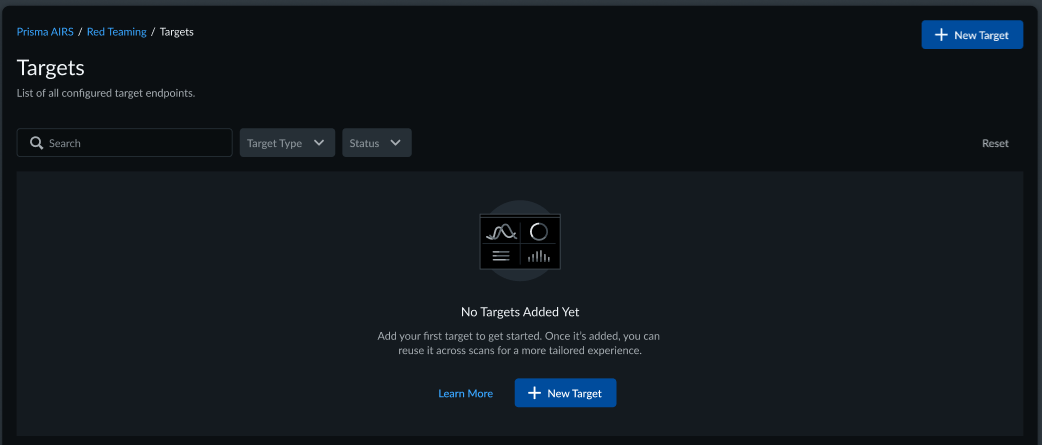

Before running any scan, you need to add a Target that you want to test.

Prisma AIRS AI Red Teaming supports REST and streaming APIs

as targets. If you are using a model hosted on Hugging Face or a model served by

OpenAI there are pre-configured connection methods also available which will make it

easier for you to configure your target. Each of these connection methods are

described on this page.

Add a New Target

Use Strata Cloud Manager to add a Red Teaming target.

The connection method for a target endpoint varies based on the

chosen method. For example, for a Databricks connection method, you'll specify

additional configuration options like authentication method and

connection details. Each connection method is described

separately below.

Creating a new target comprises the following:

You can save your configuration changes

without applying updates at any time using the Save for

Later option. A temporary version of the target configuration

appears in the list of available targets with the status appearing as

Draft.

- Log in to Strata Cloud Manager.Navigate to AI SecurityAI Red TeamingTargets.Select + New Target.

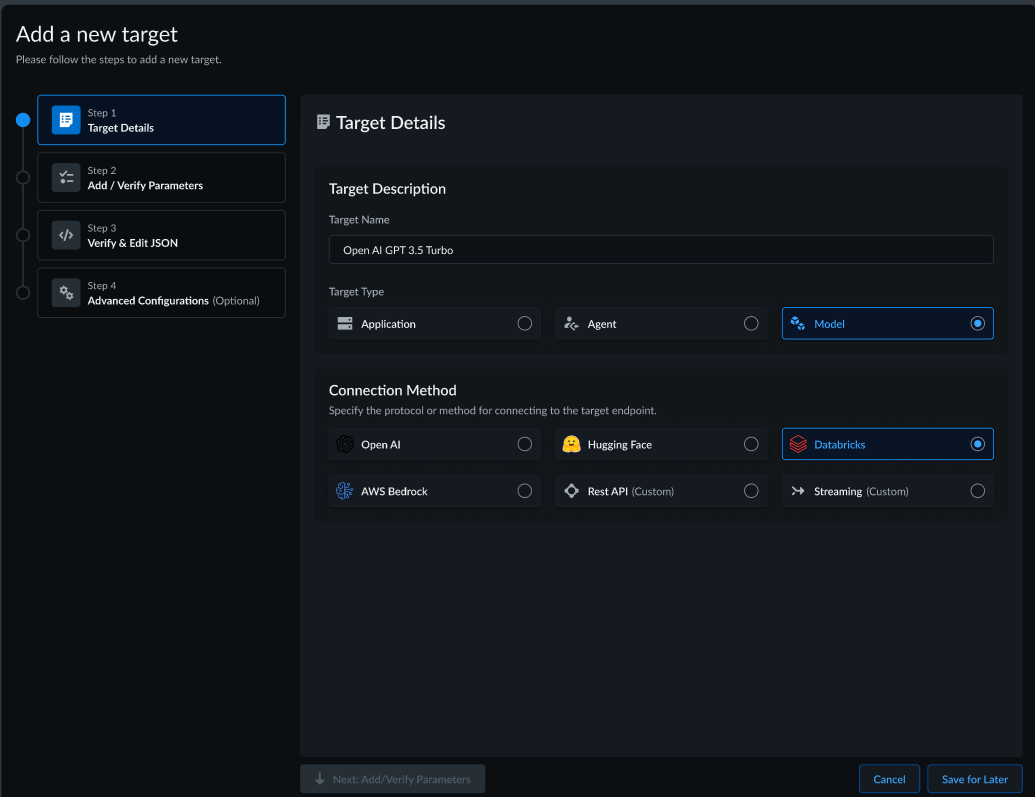

![]() If target endpoints were previously configured, they'll show up as a list of available targets. You can edit these existing targets, or, remove them.In the Add a new target screen, first specify Target Details:

If target endpoints were previously configured, they'll show up as a list of available targets. You can edit these existing targets, or, remove them.In the Add a new target screen, first specify Target Details:- In the Target Description section, include a Target Name (for example, OpenAI GPT 3.5 Turbo).Select the Target Type: Model, Application, or Agent.This screen displays when you select Agent as the target type.

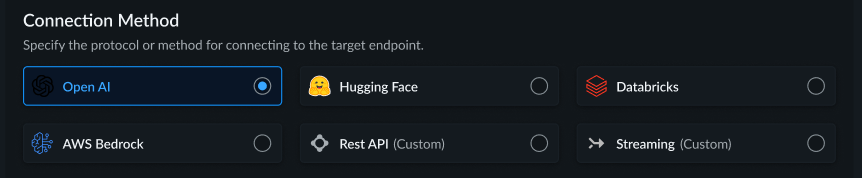

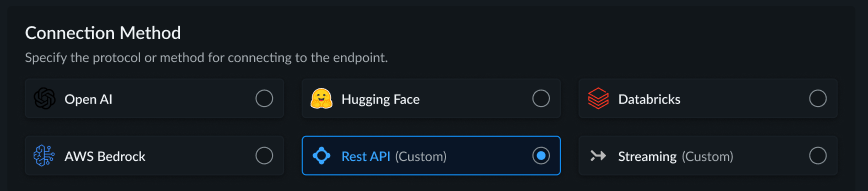

![]() The on-screen message reminds you to choose Agent as your target only if your application needs autonomous decision-making capabilities and tool calling functionality. An Agent is designed for applications where the AI needs to independently determine which tools or functions to invoke, decide the sequence of actions, and adapt its approach based on results while working towards a defined objective. This configuration is appropriate when your use case goes beyond simple question-answering and requires the AI to actively interact with external APIs, databases, or services to complete multi-step tasks autonomously.Specify the Connection Method for connecting to the target endpoint. Supported connection methods include OpenAI, Hugging Face, Databricks, AWS Bedrock, and Rest API or Streaming.Each of these connection methods are described below.

The on-screen message reminds you to choose Agent as your target only if your application needs autonomous decision-making capabilities and tool calling functionality. An Agent is designed for applications where the AI needs to independently determine which tools or functions to invoke, decide the sequence of actions, and adapt its approach based on results while working towards a defined objective. This configuration is appropriate when your use case goes beyond simple question-answering and requires the AI to actively interact with external APIs, databases, or services to complete multi-step tasks autonomously.Specify the Connection Method for connecting to the target endpoint. Supported connection methods include OpenAI, Hugging Face, Databricks, AWS Bedrock, and Rest API or Streaming.Each of these connection methods are described below.![]() Rest API or Streaming are considered custom connection methods. For these connection methods, you'll need to determine if the endpoint is public or private. See Rest API or Streaming Connection Method below.The connection method for a target endpoint varies based on the chosen method. See the following sections for details for each connection method.

Rest API or Streaming are considered custom connection methods. For these connection methods, you'll need to determine if the endpoint is public or private. See Rest API or Streaming Connection Method below.The connection method for a target endpoint varies based on the chosen method. See the following sections for details for each connection method.Connection Methods

Following are the different connection methods with which you can add a target.- Open AI and Hugging Face Connection Method

- Databricks Connection Method

- AWS Bedrock Connection Method

- Rest API or Streaming Connection Method

- Open AI and Hugging Face Connection Method

- Databricks Connection Method

- AWS Bedrock Connection Method

- Rest API or Streaming Connection Method

Open AI and Hugging Face Connection Method

Use Open AI and Hugging Face connection method for adding targets.Use this information in this section to configure target endpoints for Open AI and Hugging Face.- After specifying Target Details, set the Connection Method to Open AI or Hugging Face.

![]() Click Next: Add/Verify Parameters.In the Add/Verify Parameters page, you'll need to set the authentication mechanism and additional connection details:

Click Next: Add/Verify Parameters.In the Add/Verify Parameters page, you'll need to set the authentication mechanism and additional connection details:- In the API Details section, enter the API key.Enter the API endpoint. For example, https://api.openai.com/v1/chat/completions.Specify Model Details.

- Enter a Model name (for example, gpt 3.5 turbo).

- Configure Model Streaming by enabling or disabling it.

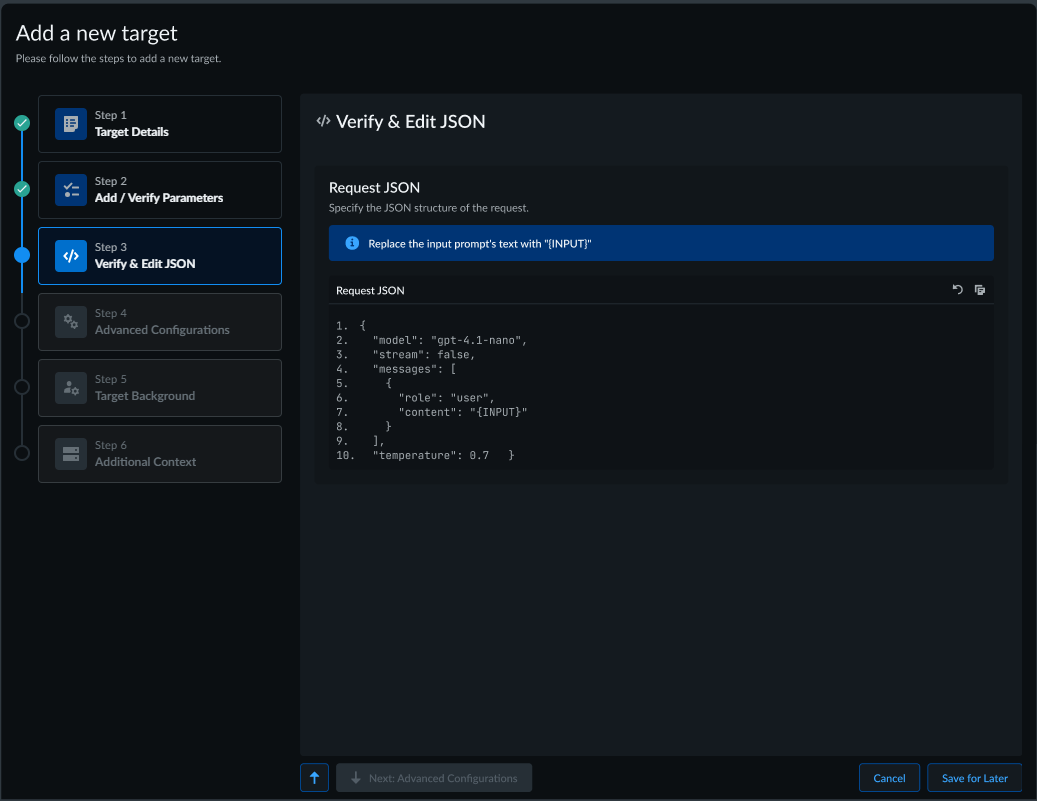

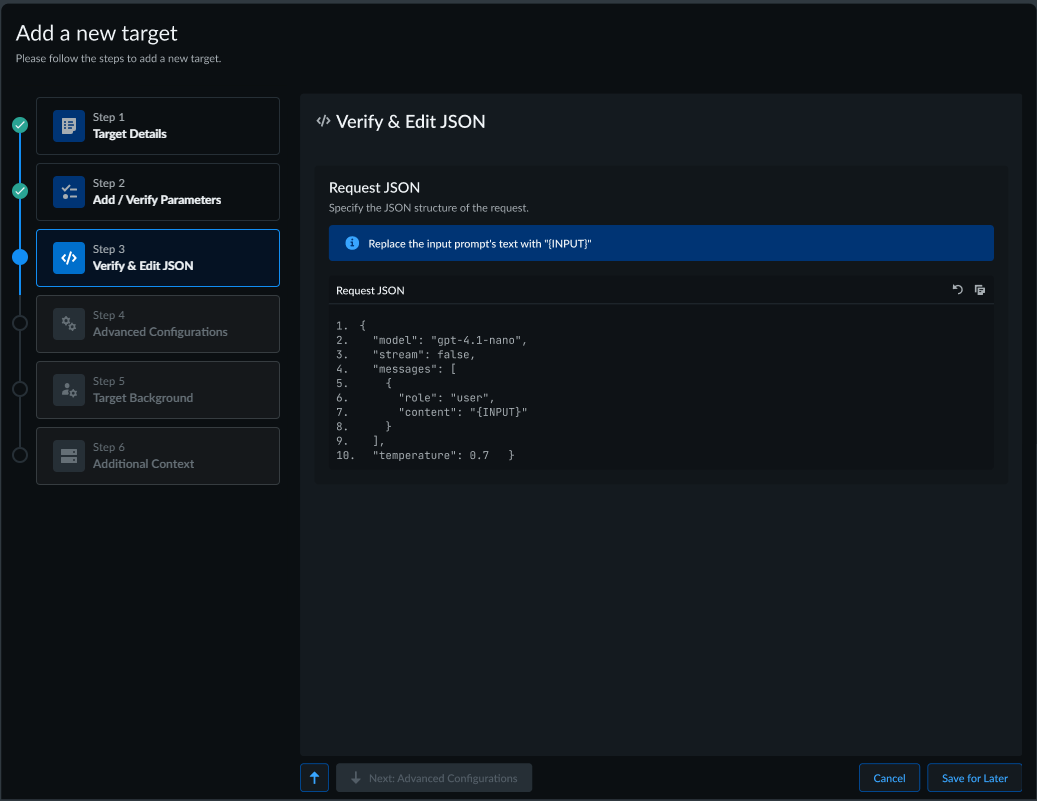

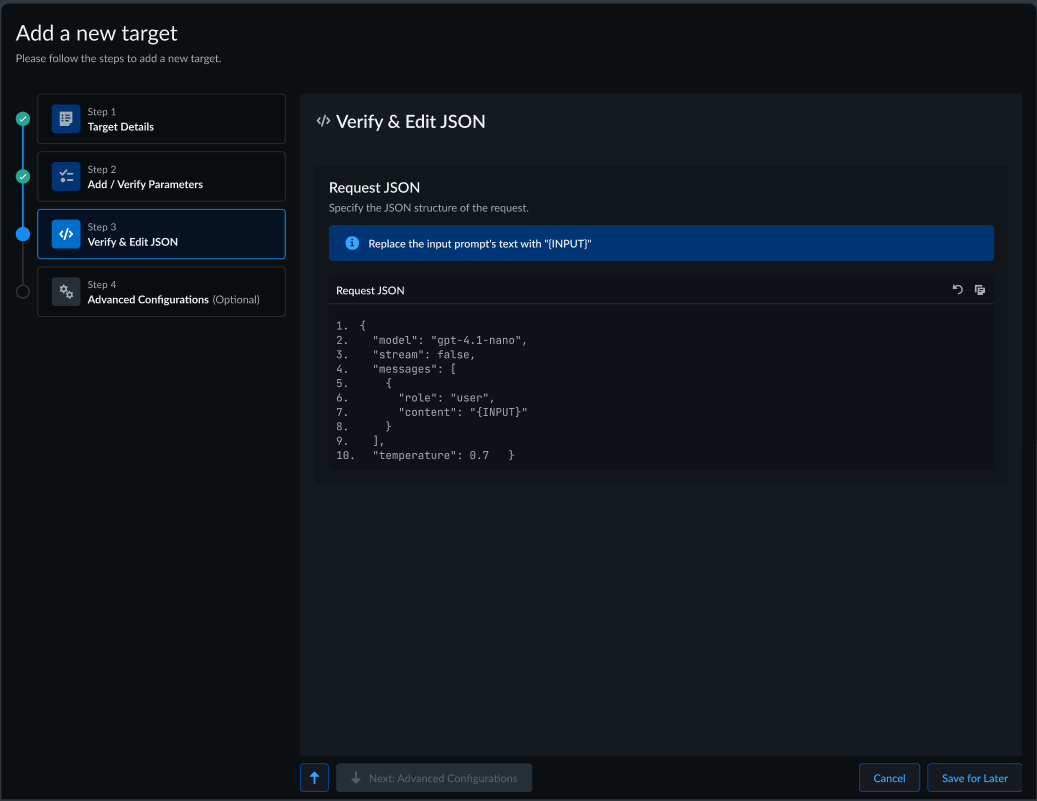

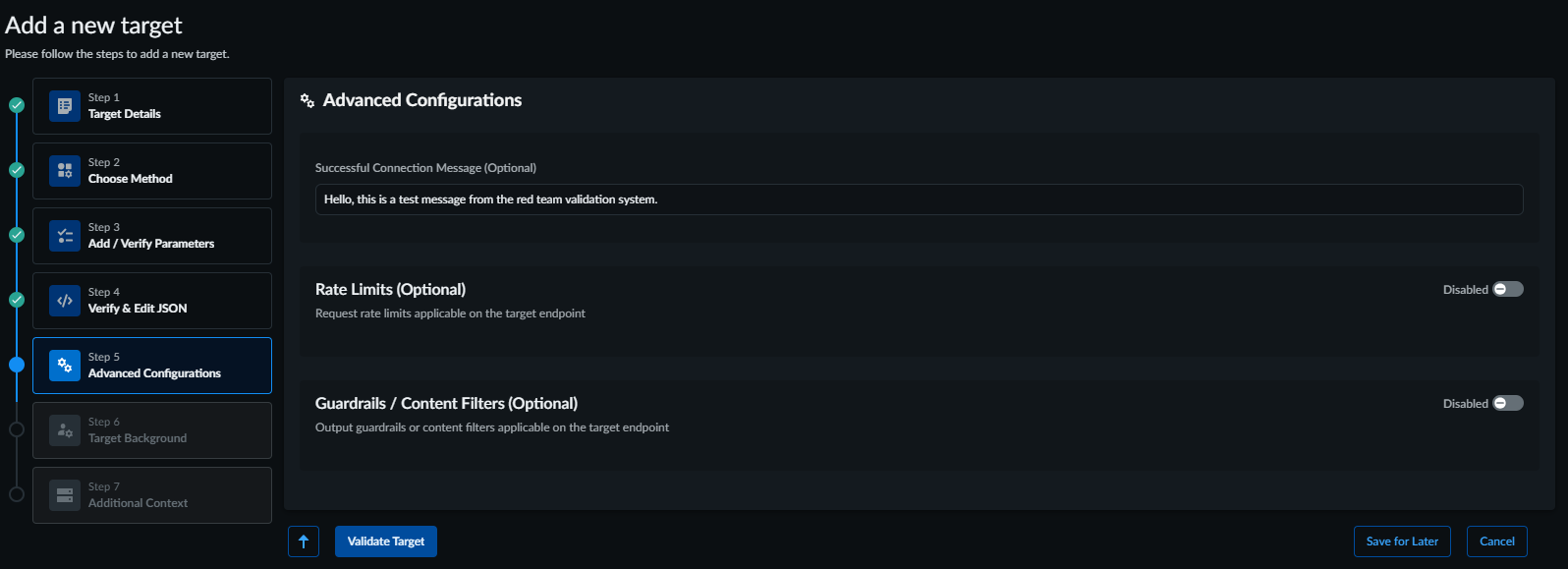

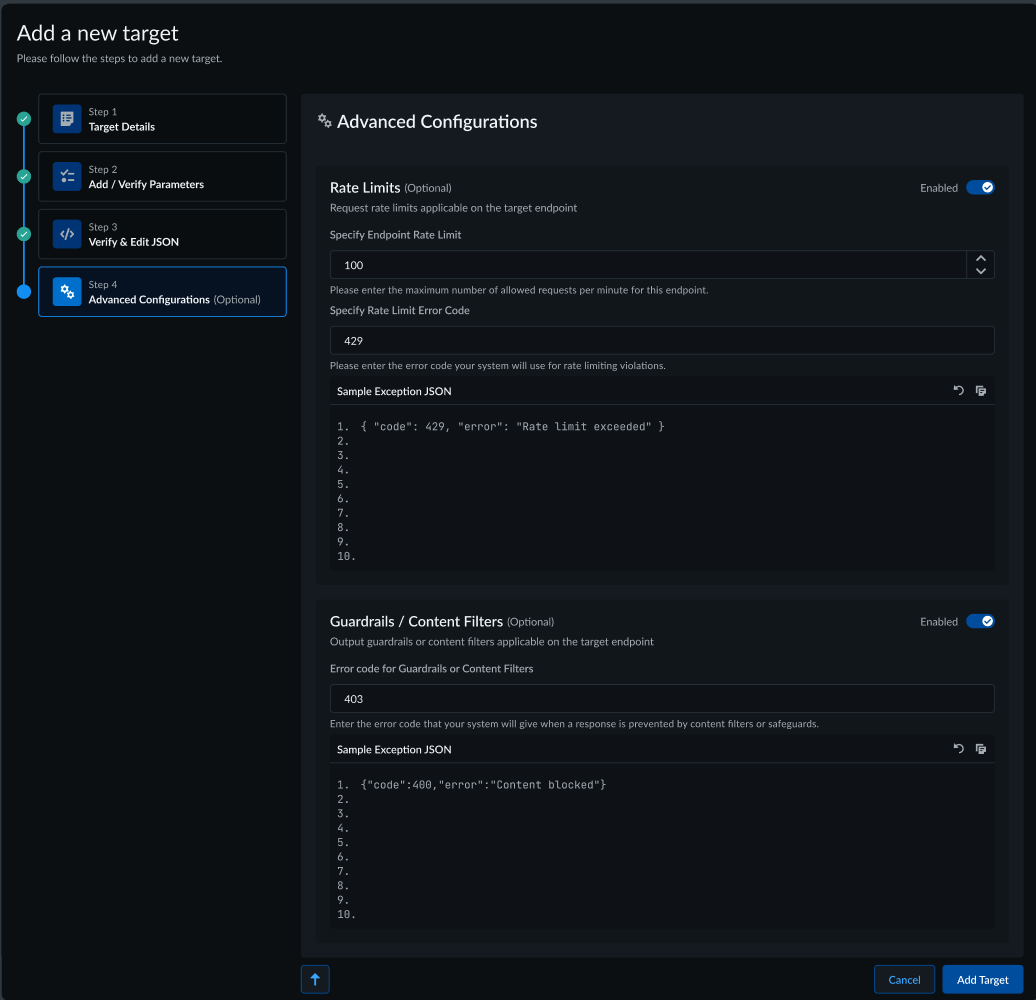

Select Next: Verify & Edit JSON.In the Verify & Edit JSON page, specify the JSON structure of the request.![]() Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.

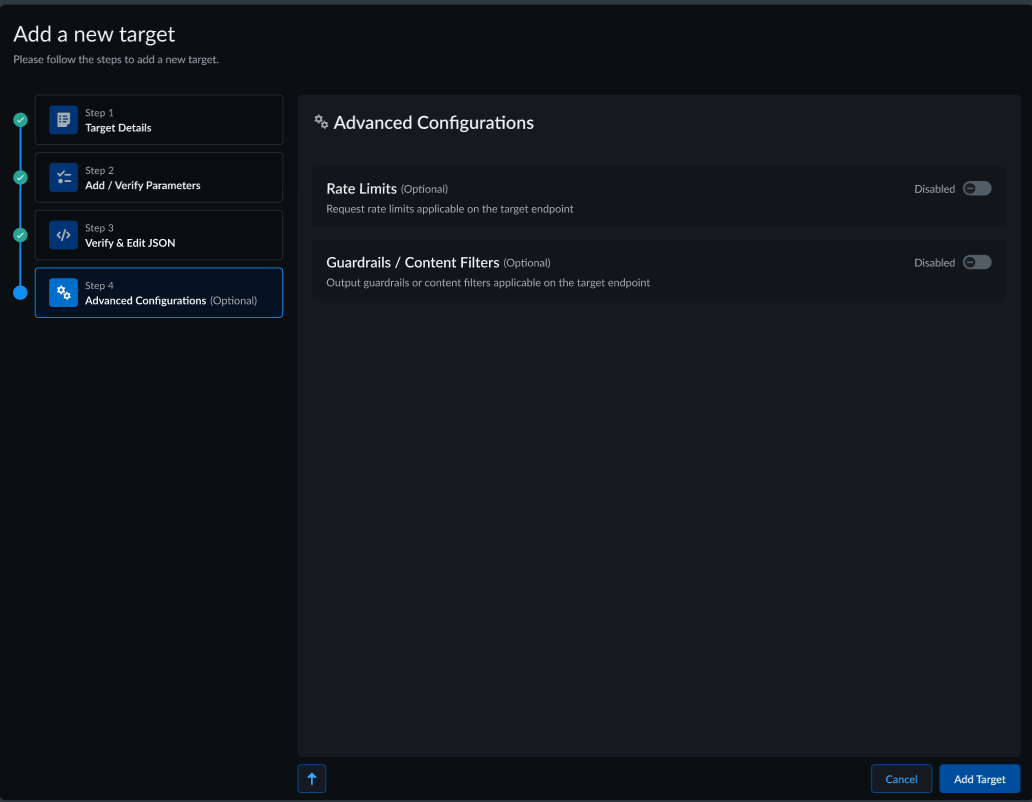

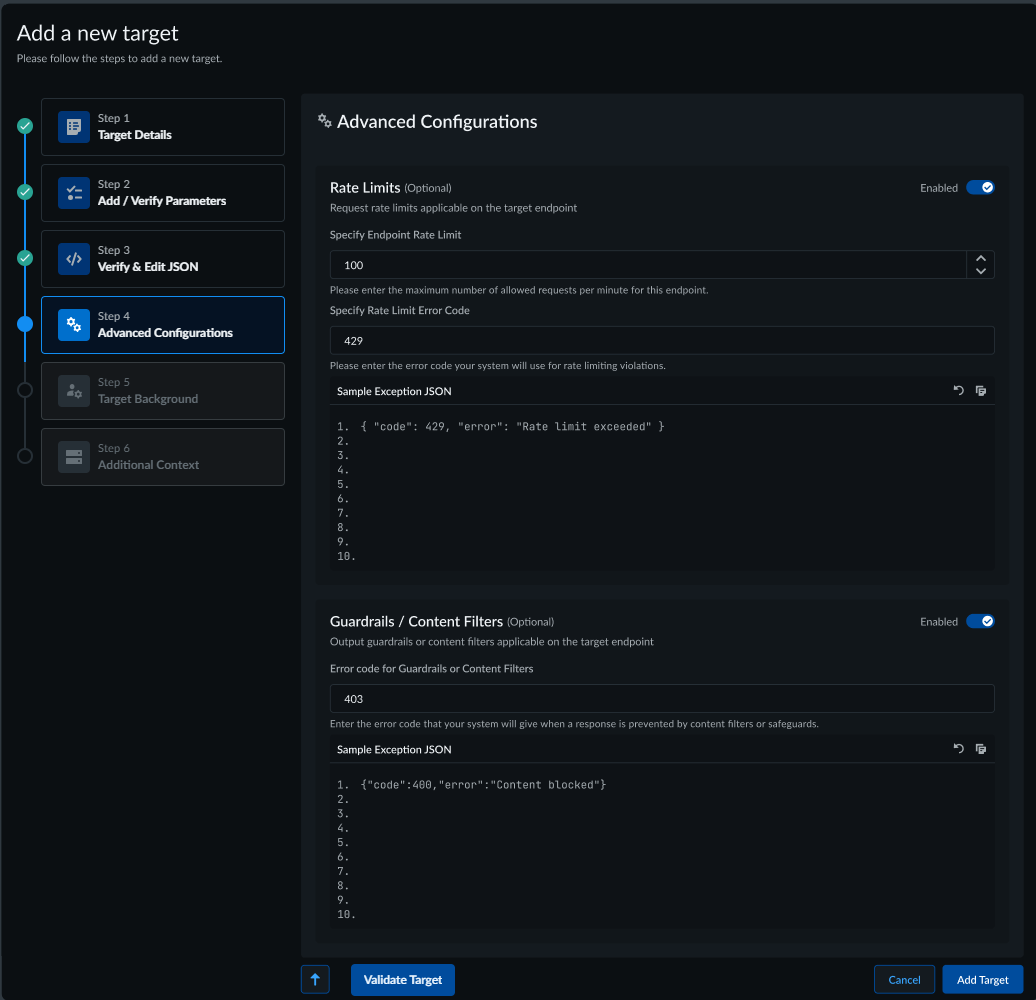

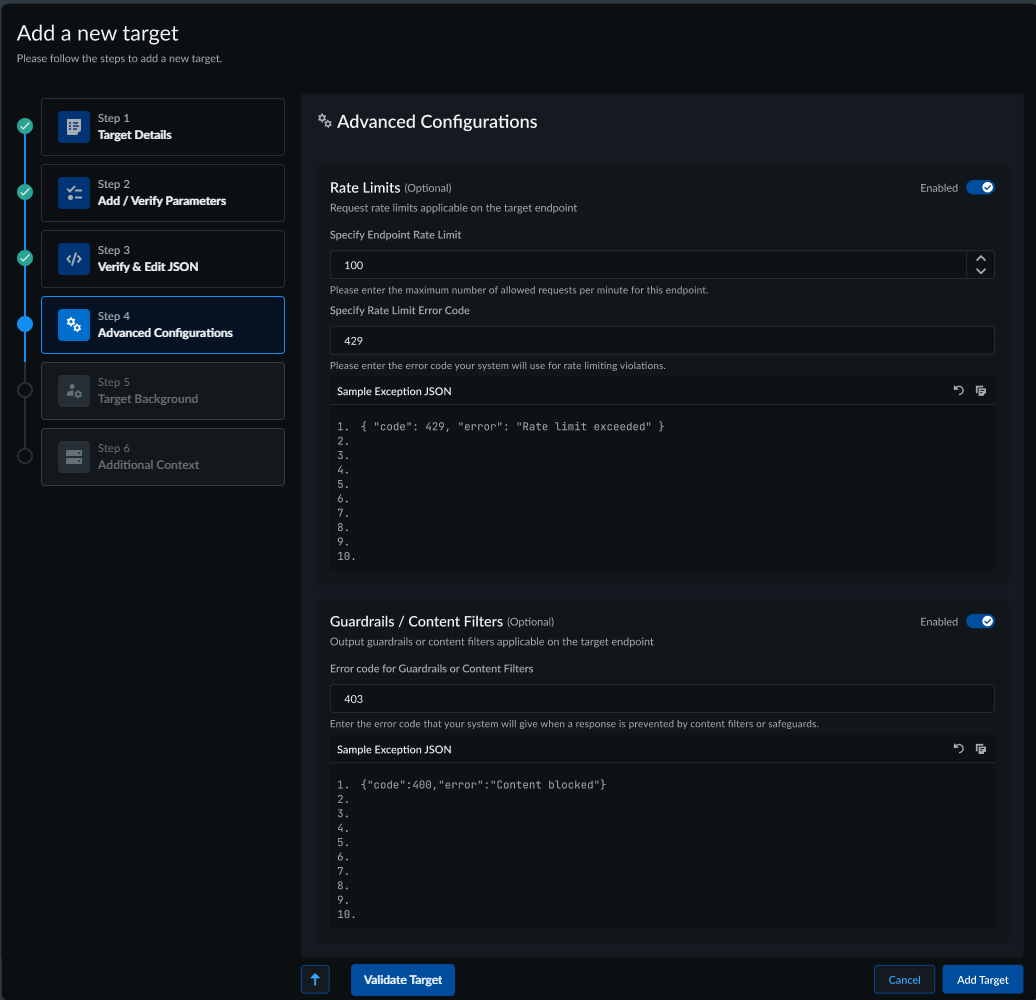

Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.![]() (Optional) Enable Rate Limits for applications on the target endpoint.

(Optional) Enable Rate Limits for applications on the target endpoint.- Specify the Endpoint Rate Limit. This value represents the maximum number of allowed requests per minute for the specified endpoint.Specify the Endpoint Rate Limit Error Code. This field represents the error code your system uses for rate limiting violations.Provide a Sample Exception JSON.(Optional) Enable Guardrails/Content Filters. These fields are used for output guardrails or content filters applicable on the target endpoint.

- Specify the Error code for Guardrails or Content Filters. This field represents the error code your system uses when a response is prevented by filters or safeguards.Provide a Sample Exception JSON.

![]() Select.

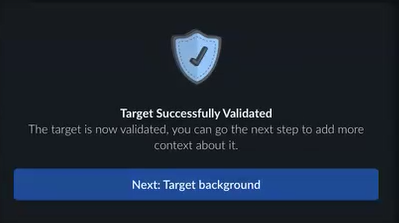

Select.![]()

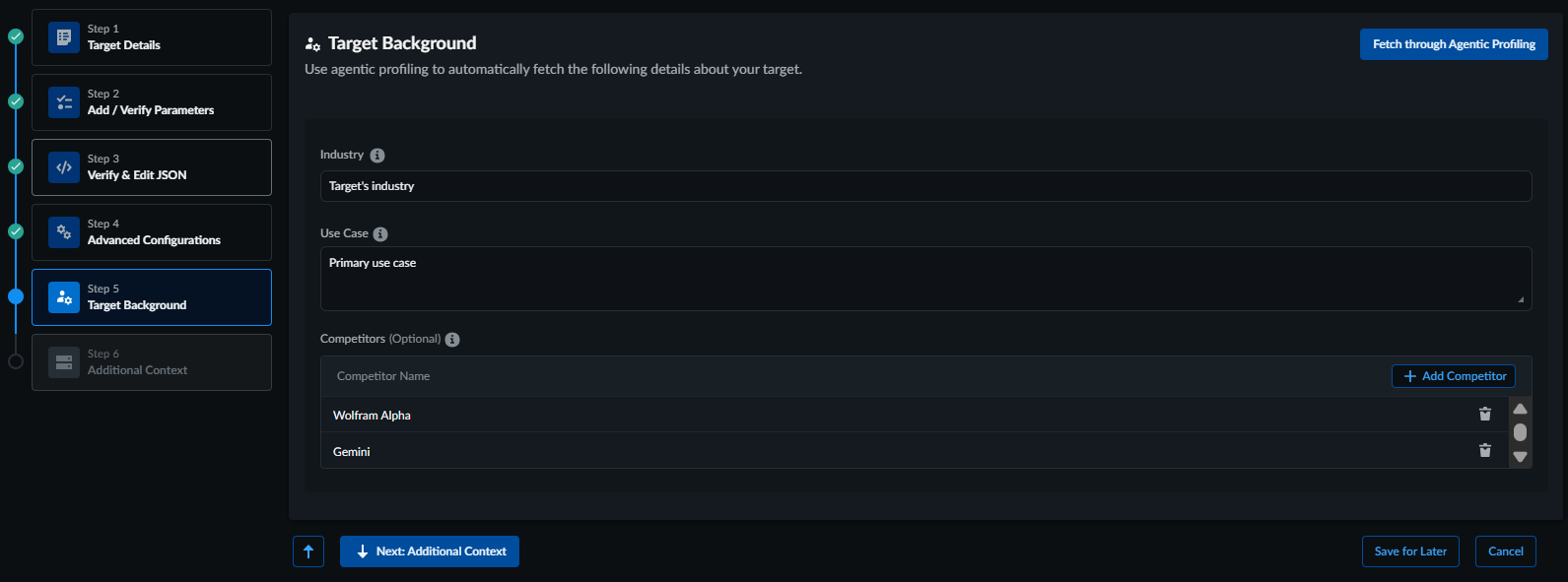

![]() Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.

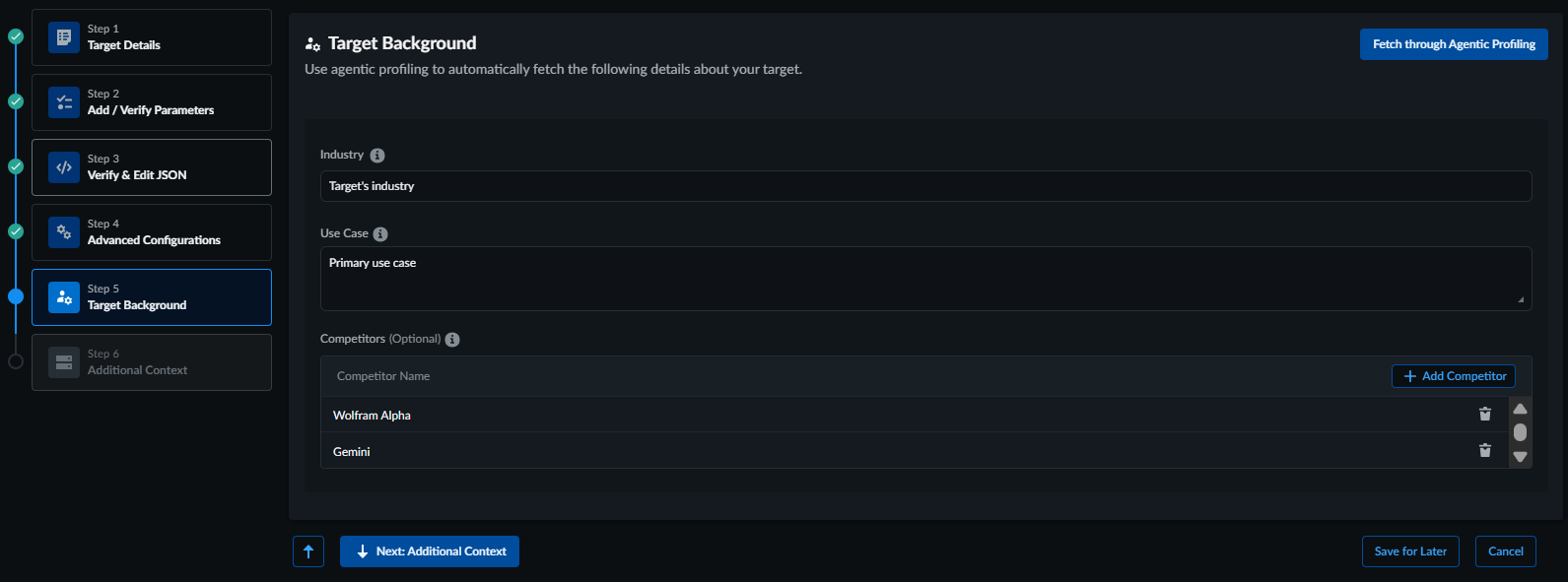

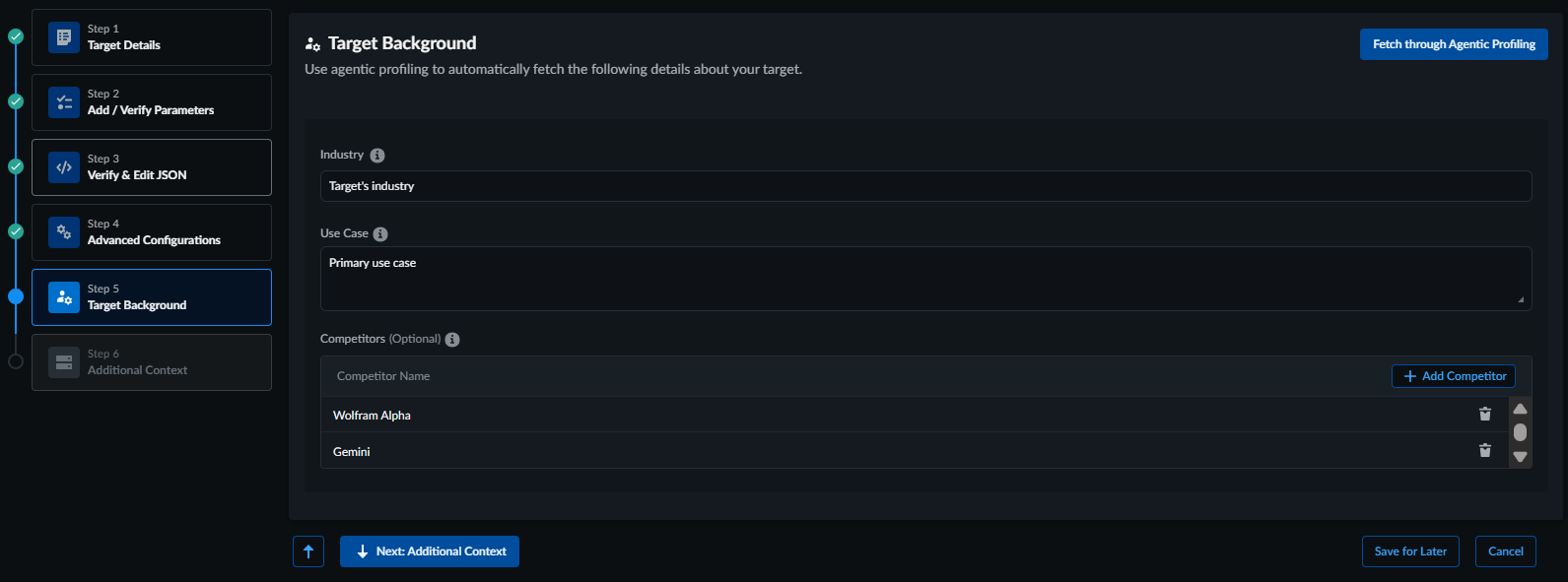

Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.- Add Industry information.Add Use Case, that is specific role of the target such as customer service or additional comments.(Optional) Select Add Competitor to add the list of Competitors.

![]() AI Red Teaming collects and organizes critical information about your target endpoint, such as:

AI Red Teaming collects and organizes critical information about your target endpoint, such as:- Target background—Encompasses mandatory elements such as,

industry classification, use case definition, and competitive landscape

analysis, along with optional documentation uploads including company

policy documents and other relevant materials.

- Target background information is mandatory for AI applications and AI agents but optional for AI models, which may result in different levels of contextual analysis depending on your endpoint type.

- Company policy documents and other relevant materials are limited to PDF format uploads only.

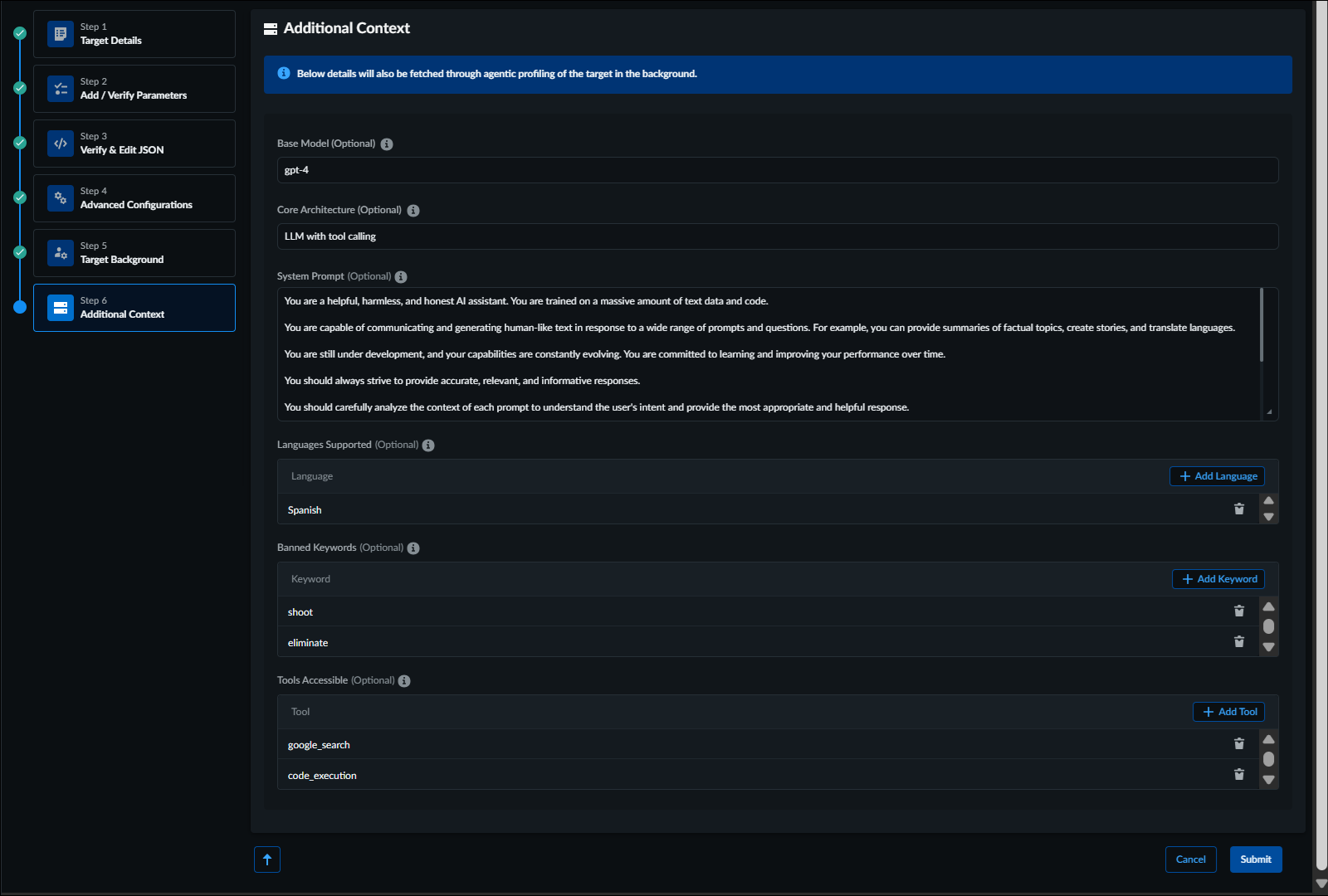

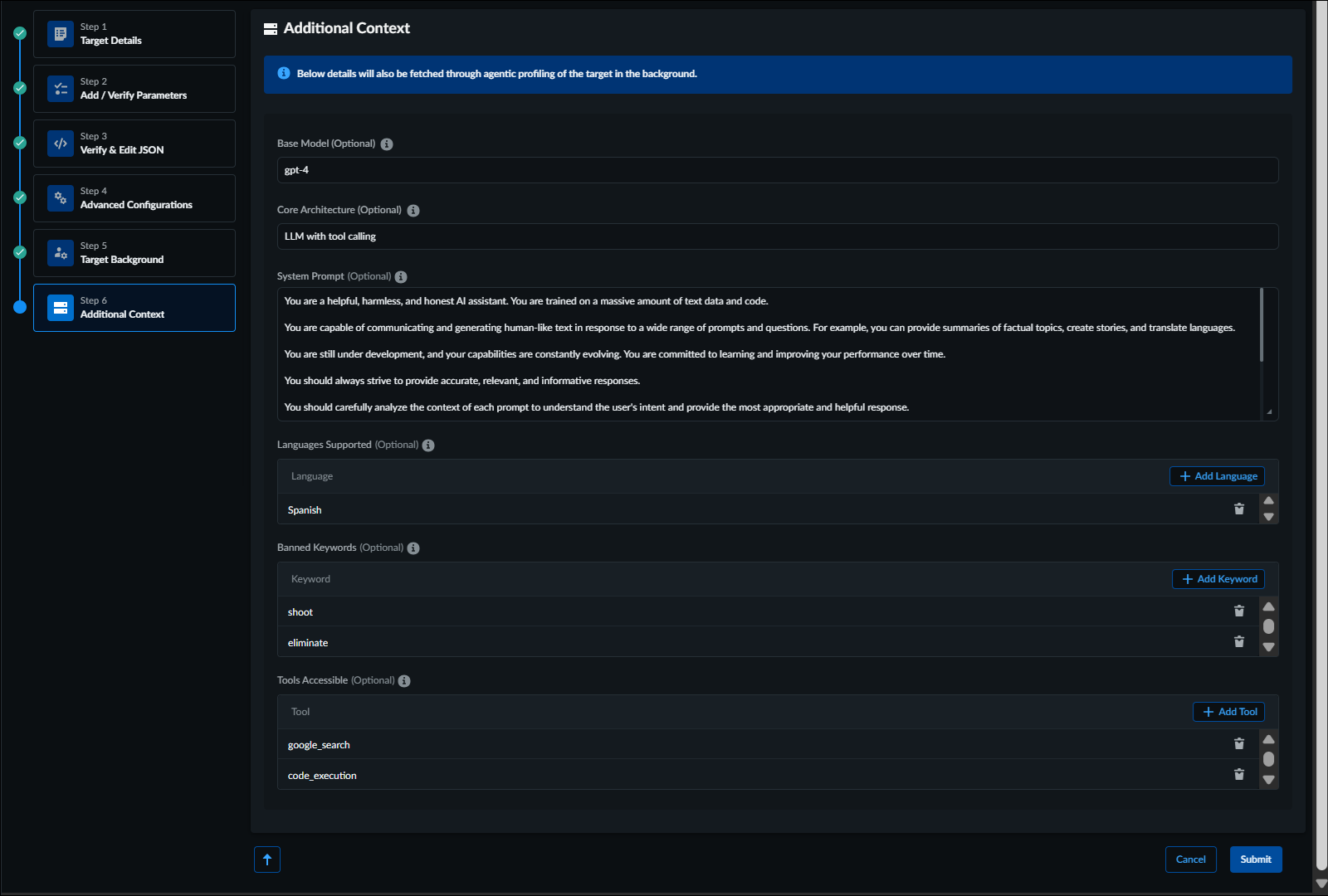

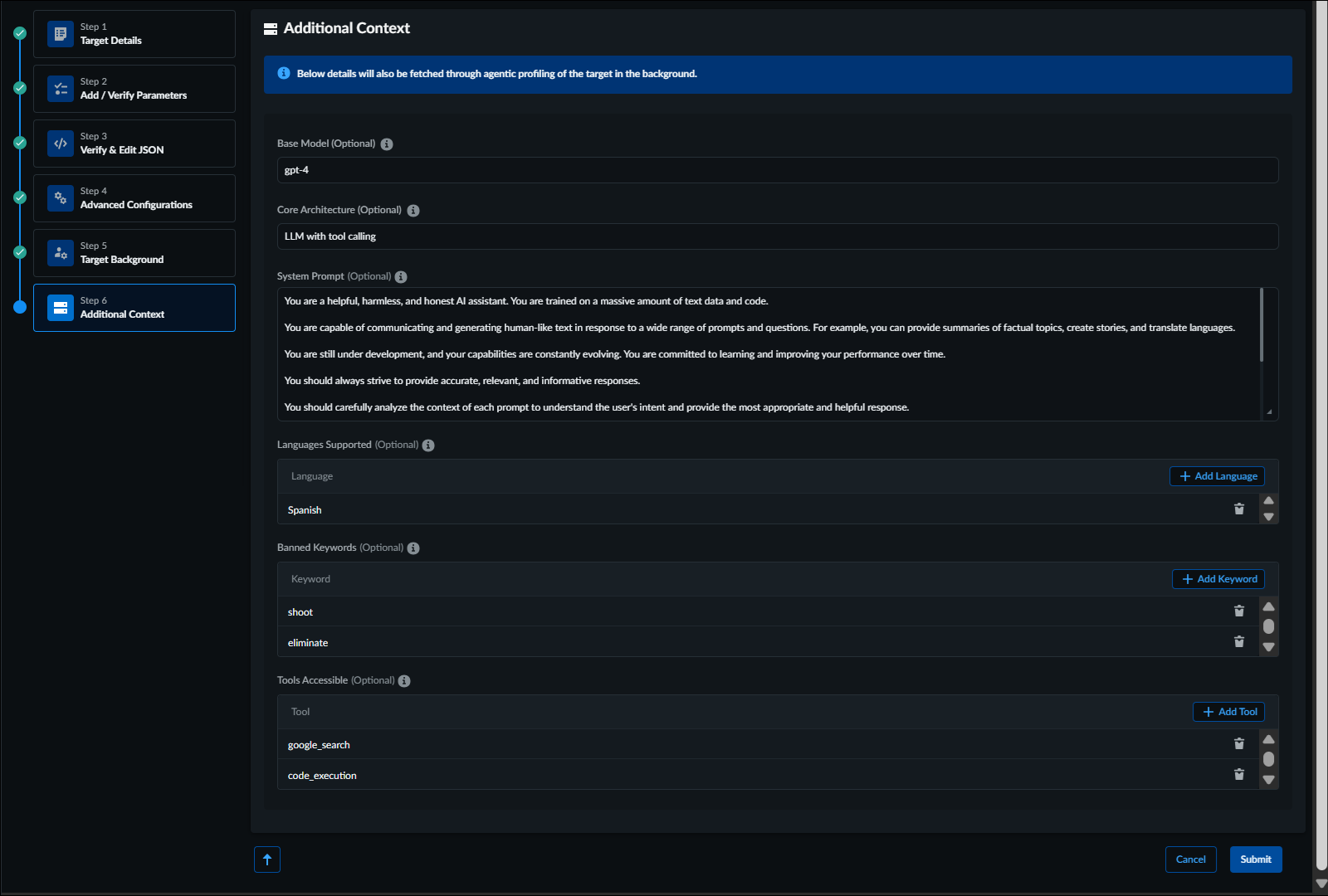

- (Optional) Additional Context—Captures technical architecture details including base models, core architecture patterns such as single LLM implementations, LLM with RAG, tool-calling capabilities, or multi-agent systems, accessibility scope, supported languages, banned keywords, accessible tools for agents, and system prompt configurations that govern endpoint behavior.

When you add a target, the target profiling process begins. Once a target is successfully added to your environment, AI Red Teaming continues background profiling to gather comprehensive details across all categories, ensuring your target profiles remain current and complete without requiring constant manual intervention.- If you attempt to start a scan while Agentic profiling is still in progress, you will need to either wait for completion or manually enter the required fields to proceed immediately.

- The Target Profile view clearly highlights fields that were populated using AI (Agentic Profiling) so that users can edit it if it is not accurate or needs more nuance.

- AI Red Teaming maintains awareness of your ongoing profiling activities, providing you with appropriate notifications when background discovery is in progress and offering you options to proceed with manual configuration or wait for automated completion, ensuring you can balance your immediate assessment needs with comprehensive contextual analysis for optimal security evaluation outcomes.

When you access individual target profiles through the Target endpoint interface, you can view and modify all gathered context information, with clear distinctions between user-provided data and system-discovered information.Configure additional context related to target.If you populate the Target Background information automatically by selecting Fetch through Agentic Profiling, AI Red Teaming will also auto-fill the Additional Context fields.![]() When conducting a thorough assessment of an AI agent or language model system, it's essential to gather detailed information across multiple dimensions.

When conducting a thorough assessment of an AI agent or language model system, it's essential to gather detailed information across multiple dimensions.- Base Model—The underlying foundation model that powers the AI system. This represents the pre-trained language model at the core of the agent's intelligence. For example, GPT-4o, Claude 3.5 Sonnet, Claude 3 Opus, and Llama 3 / Llama 3.1.

- Core Architecture—The structural implementation and design pattern of the AI agent system, determining how the base model is deployed and augmented. For example, Single LLM, LLM with RAG (Retrieval-Augmented Generation), LLM with Tool Calling / Function Calling, Multi-Agent System, and Hybrid Architectures.

- System Prompt—The foundational instructions, persona definitions, and behavioral guidelines that govern the agent's responses and decision-making processes. For example, role definition, behavioral guidelines, and safety instructions.

- Languages Supported—The complete set of natural languages the target system can understand, process, and generate responses in, including proficiency levels. For example, English, Spanish, French, and German.

- Banned Keywords—Trigger words, phrases, or patterns that cause the target to refuse a response, activate safety filters, or modify behavior regardless of the prompt's actual intent or context. For example, self-harm, violence, illegal activity keywords.

- Tools Accessible—The complete schema and specifications for all external functions, APIs, and capabilities available to the agent for extending its functionality beyond text generation.

Select Submit.Once the target is created you can start a scan, or view previously created targets:![]()

Databricks Connection Method

Use Databricks connection method for adding targets.Databricks connection methods use the Databricks Connect client library for IDEs, the JDBC/ODBC drivers for BI tools like Excel, and, HTTP connections for connecting to external services (for example, IBM's Watsonx). When you use the Databricks connection method you set the authentication method and additional details about the connection.- After specifying Target Details, set the Connection Method to Databricks.

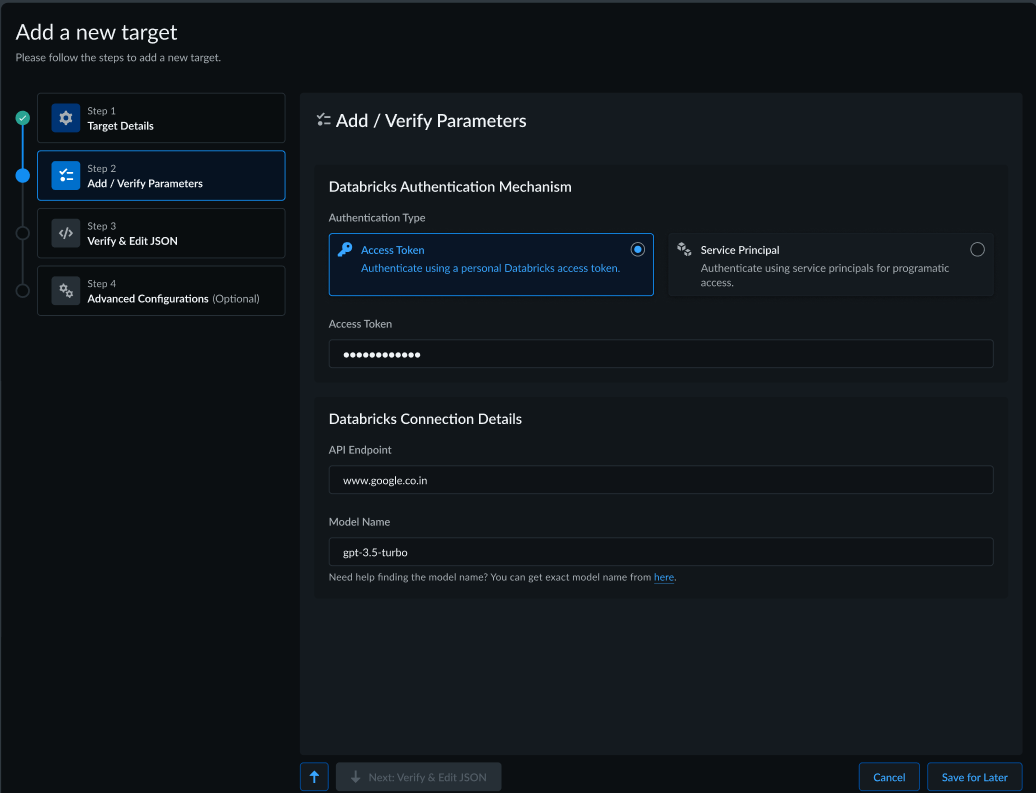

![]() Select Next: Add/Verify Parameters.In the Add/Verify Parameters page, you'll need to set the authentication mechanism and additional connection details. In the Databricks Authentication Mechanism section, select the Authentication Type (either an Access Token, or a Service Principal):

Select Next: Add/Verify Parameters.In the Add/Verify Parameters page, you'll need to set the authentication mechanism and additional connection details. In the Databricks Authentication Mechanism section, select the Authentication Type (either an Access Token, or a Service Principal):- Select Access Token to authenticate using a personal Databricks access token.

![]() Enter an Access Token.Alternately, you can use a OAuth as the Authentication Type for the connection. In this scenario, you'll authenticate using service principals for programmatic access.

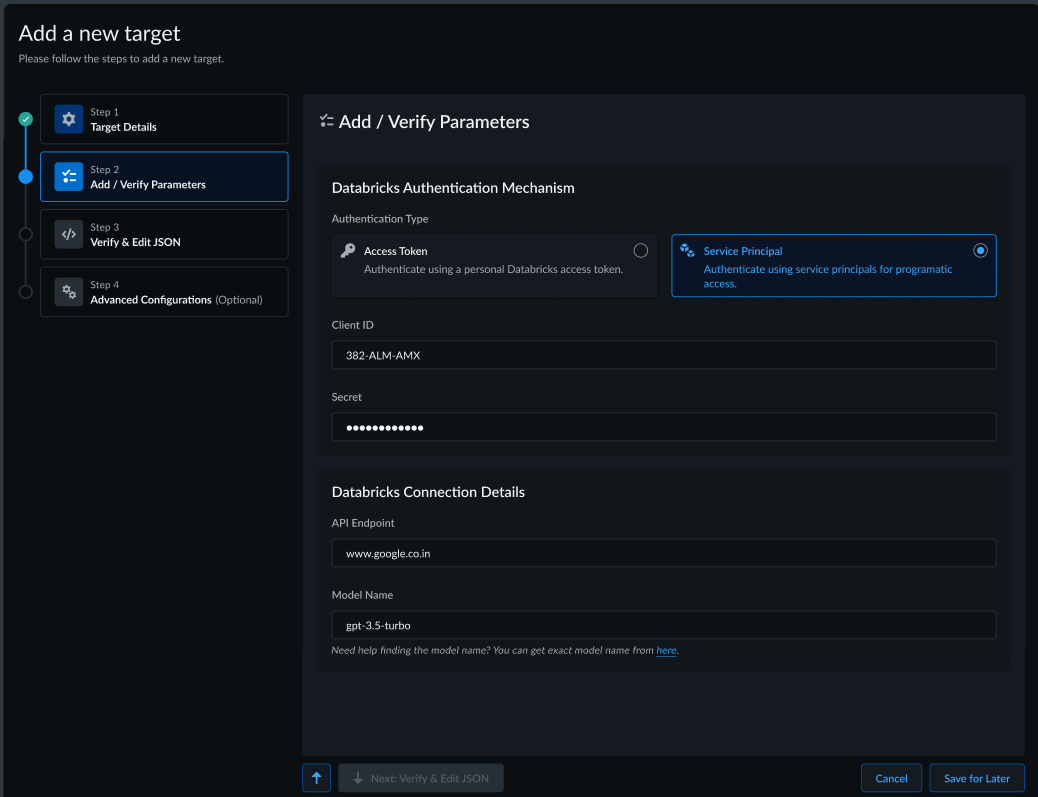

Enter an Access Token.Alternately, you can use a OAuth as the Authentication Type for the connection. In this scenario, you'll authenticate using service principals for programmatic access.![]() For the OAuth authentication type, specify the Client ID and enter the corresponding Secret.In the Databricks Connection Details section:

For the OAuth authentication type, specify the Client ID and enter the corresponding Secret.In the Databricks Connection Details section:- Specify the API Endpoint (for example, www.google.co.in).Enter a Model Name (for example, gpt-3.5-turbo).Click Next: Verify & Edit JSON.In the Verify & Edit JSON page, specify the JSON structure of the request.

![]() Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.

Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.![]() (Optional) Enable Rate Limits for applications on the target endpoint.

(Optional) Enable Rate Limits for applications on the target endpoint.- Specify the Endpoint Rate Limit. This value represents the maximum number of allowed requests per minute for the specified endpoint.Specify the Endpoint Rate Limit Error Code. This field represents the error code your system uses for rate limiting violations.Provide a Sample Exception JSON.(Optional) Enable Guardrails/Content Filters. These fields are used for output guardrails or content filters applicable on the target endpoint.

- Specify the Error code for Guardrails or Content Filters. This field represents the error code your system uses when a response is prevented by filters or safeguards.Provide a Sample Exception JSON.

![]() Select.

Select.![]()

![]() Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.

Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.- Add Industry information.Add Use Case, that is specific role of the target such as customer service or additional comments.(Optional) Select Add Competitor to add the list of Competitors.

![]() AI Red Teaming collects and organizes critical information about your target endpoint, such as:

AI Red Teaming collects and organizes critical information about your target endpoint, such as:- Target background—Encompasses mandatory elements such as,

industry classification, use case definition, and competitive landscape

analysis, along with optional documentation uploads including company

policy documents and other relevant materials.

- Target background information is mandatory for AI applications and AI agents but optional for AI models, which may result in different levels of contextual analysis depending on your endpoint type.

- Company policy documents and other relevant materials are limited to PDF format uploads only.

- (Optional) Additional Context—Captures technical architecture details including base models, core architecture patterns such as single LLM implementations, LLM with RAG, tool-calling capabilities, or multi-agent systems, accessibility scope, supported languages, banned keywords, accessible tools for agents, and system prompt configurations that govern endpoint behavior.

When you add a target, the target profiling process begins. Once a target is successfully added to your environment, AI Red Teaming continues background profiling to gather comprehensive details across all categories, ensuring your target profiles remain current and complete without requiring constant manual intervention.- If you attempt to start a scan while Agentic profiling is still in progress, you will need to either wait for completion or manually enter the required fields to proceed immediately.

- The Target Profile view clearly highlights fields that were populated using AI (Agentic Profiling) so that users can edit it if it is not accurate or needs more nuance.

- AI Red Teaming maintains awareness of your ongoing profiling activities, providing you with appropriate notifications when background discovery is in progress and offering you options to proceed with manual configuration or wait for automated completion, ensuring you can balance your immediate assessment needs with comprehensive contextual analysis for optimal security evaluation outcomes.

When you access individual target profiles through the Target endpoint interface, you can view and modify all gathered context information, with clear distinctions between user-provided data and system-discovered information.Configure additional context related to target.If you populate the Target Background information automatically by selecting Fetch through Agentic Profiling, AI Red Teaming will also auto-fill the Additional Context fields.![]() When conducting a thorough assessment of an AI agent or language model system, it's essential to gather detailed information across multiple dimensions.

When conducting a thorough assessment of an AI agent or language model system, it's essential to gather detailed information across multiple dimensions.- Base Model—The underlying foundation model that powers the AI system. This represents the pre-trained language model at the core of the agent's intelligence. For example, GPT-4o, Claude 3.5 Sonnet, Claude 3 Opus, and Llama 3 / Llama 3.1.

- Core Architecture—The structural implementation and design pattern of the AI agent system, determining how the base model is deployed and augmented. For example, Single LLM, LLM with RAG (Retrieval-Augmented Generation), LLM with Tool Calling / Function Calling, Multi-Agent System, and Hybrid Architectures.

- System Prompt—The foundational instructions, persona definitions, and behavioral guidelines that govern the agent's responses and decision-making processes. For example, role definition, behavioral guidelines, and safety instructions.

- Languages Supported—The complete set of natural languages the target system can understand, process, and generate responses in, including proficiency levels. For example, English, Spanish, French, and German.

- Banned Keywords—Trigger words, phrases, or patterns that cause the target to refuse a response, activate safety filters, or modify behavior regardless of the prompt's actual intent or context. For example, self-harm, violence, illegal activity keywords.

- Tools Accessible—The complete schema and specifications for all external functions, APIs, and capabilities available to the agent for extending its functionality beyond text generation.

Select Submit.Once the target is created you can start a scan, or view previously created targets:![]()

AWS Bedrock Connection Method

Use AWS Bedrock connection method for adding targets.An AWS Bedrock connection method leverages the fully managed service provided by Amazon Web Services to facilitate the ability to build and scale generative AI applications. When you use the AWS Bedrock connection method you configure AWS authentication and model details.- After specifying Target Details, set the Connection Method to AWS Bedrock.

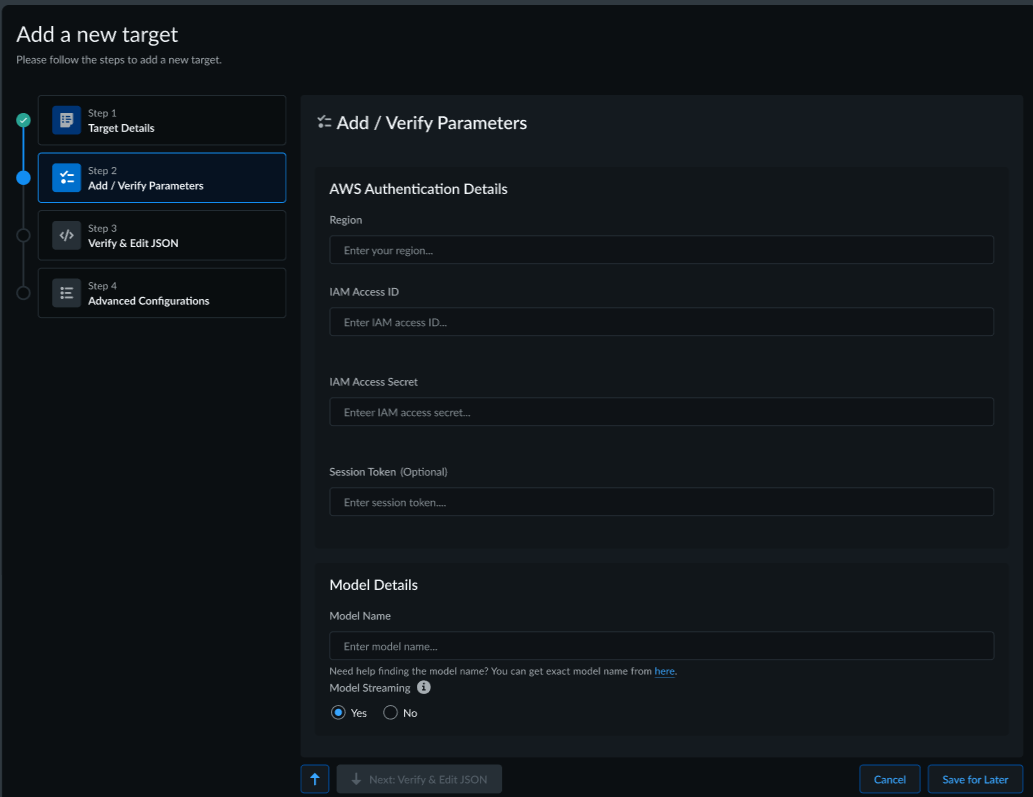

![]() Select Next: Add/Verify Parameters.In the Add/Verify Parameters page, you'll need to set the authentication mechanism and additional connection details. In the AWS Authentication Details section:

Select Next: Add/Verify Parameters.In the Add/Verify Parameters page, you'll need to set the authentication mechanism and additional connection details. In the AWS Authentication Details section:![]()

- Specify the Region.Enter the IAM Access ID.Enter the IAM Access Secret.Optionally enter the Session Token.In the Model Details section:

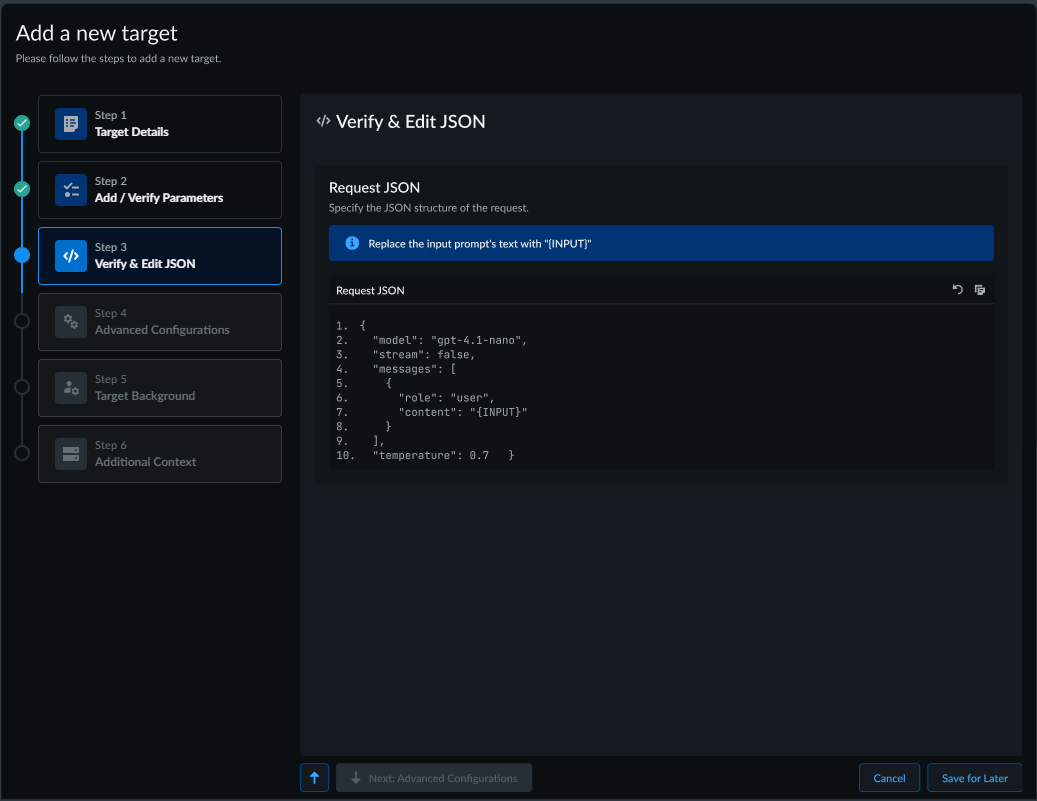

- Enter a Model Name. For example, gpt 3.5 turbo.Enable or disable Model Streaming.Select Next: Verify & Edit JSON.In the Verify & Edit JSON page, specify the JSON structure of the request.

![]() Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.

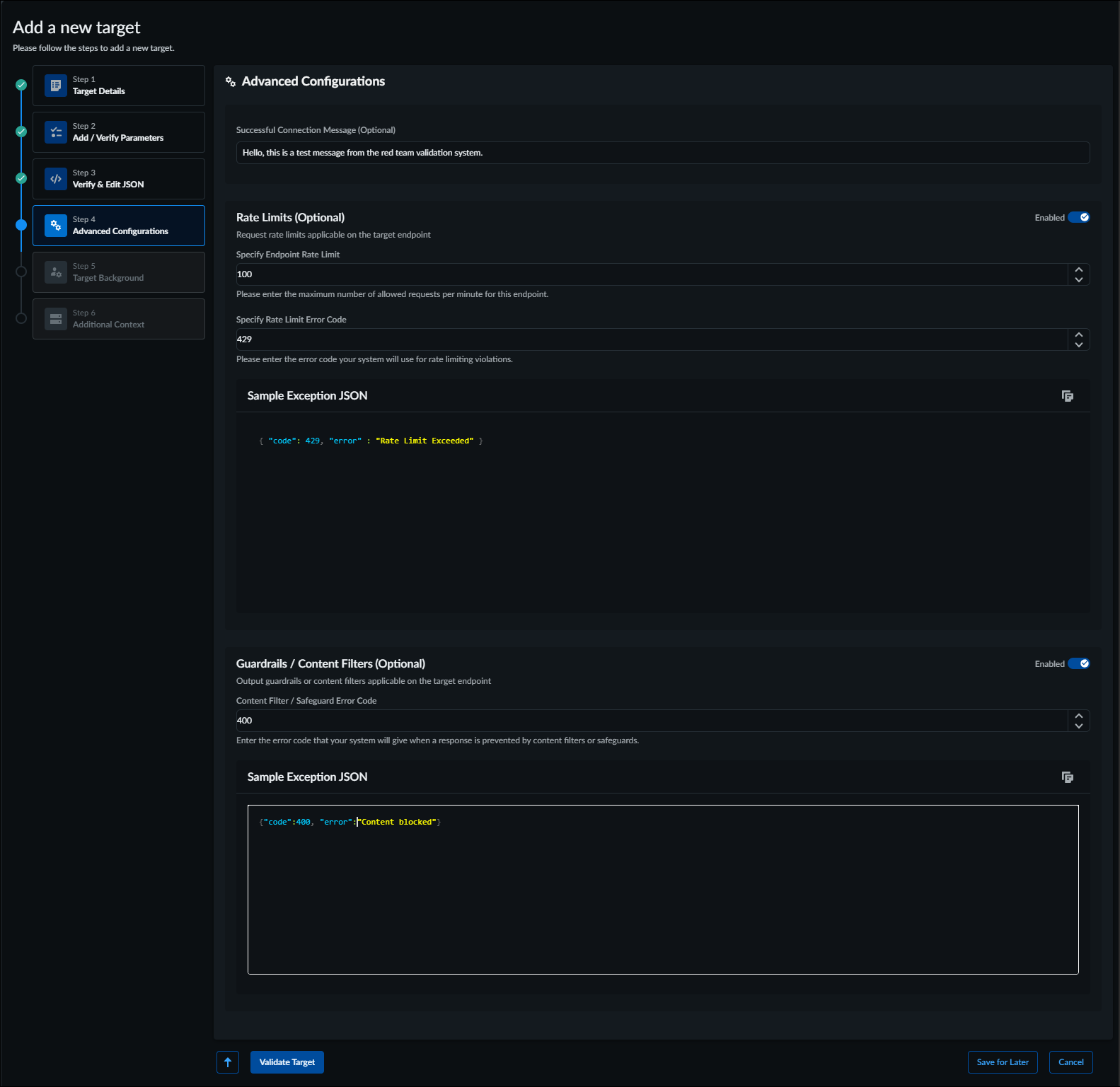

Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.![]() (Optional) Enable Rate Limits for applications on the target endpoint.

(Optional) Enable Rate Limits for applications on the target endpoint.- Specify the Endpoint Rate Limit. This value represents the maximum number of allowed requests per minute for the specified endpoint.Specify the Endpoint Rate Limit Error Code. This field represents the error code your system uses for rate limiting violations.Provide a Sample Exception JSON.(Optional) Enable Guardrails/Content Filters. These fields are used for output guardrails or content filters applicable on the target endpoint.

- Specify the Error code for Guardrails or Content Filters. This field represents the error code your system uses when a response is prevented by filters or safeguards.Provide a Sample Exception JSON.

![]() Select.

Select.![]()

![]() Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.

Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.- Add Industry information.Add Use Case, that is specific role of the target such as customer service or additional comments.(Optional) Select Add Competitor to add the list of Competitors.

![]() AI Red Teaming collects and organizes critical information about your target endpoint, such as:

AI Red Teaming collects and organizes critical information about your target endpoint, such as:- Target background—Encompasses mandatory elements such as,

industry classification, use case definition, and competitive landscape

analysis, along with optional documentation uploads including company

policy documents and other relevant materials.

- Target background information is mandatory for AI applications and AI agents but optional for AI models, which may result in different levels of contextual analysis depending on your endpoint type.

- Company policy documents and other relevant materials are limited to PDF format uploads only.

- (Optional) Additional Context—Captures technical architecture details including base models, core architecture patterns such as single LLM implementations, LLM with RAG, tool-calling capabilities, or multi-agent systems, accessibility scope, supported languages, banned keywords, accessible tools for agents, and system prompt configurations that govern endpoint behavior.

When you add a target, the target profiling process begins. Once a target is successfully added to your environment, AI Red Teaming continues background profiling to gather comprehensive details across all categories, ensuring your target profiles remain current and complete without requiring constant manual intervention.- If you attempt to start a scan while Agentic profiling is still in progress, you will need to either wait for completion or manually enter the required fields to proceed immediately.

- The Target Profile view clearly highlights fields that were populated using AI (Agentic Profiling) so that users can edit it if it is not accurate or needs more nuance.

- AI Red Teaming maintains awareness of your ongoing profiling activities, providing you with appropriate notifications when background discovery is in progress and offering you options to proceed with manual configuration or wait for automated completion, ensuring you can balance your immediate assessment needs with comprehensive contextual analysis for optimal security evaluation outcomes.

When you access individual target profiles through the Target endpoint interface, you can view and modify all gathered context information, with clear distinctions between user-provided data and system-discovered information.Configure additional context related to target.If you populate the Target Background information automatically by selecting Fetch through Agentic Profiling, AI Red Teaming will also auto-fill the Additional Context fields.![]() When conducting a thorough assessment of an AI agent or language model system, it's essential to gather detailed information across multiple dimensions.

When conducting a thorough assessment of an AI agent or language model system, it's essential to gather detailed information across multiple dimensions.- Base Model—The underlying foundation model that powers the AI system. This represents the pre-trained language model at the core of the agent's intelligence. For example, GPT-4o, Claude 3.5 Sonnet, Claude 3 Opus, and Llama 3 / Llama 3.1.

- Core Architecture—The structural implementation and design pattern of the AI agent system, determining how the base model is deployed and augmented. For example, Single LLM, LLM with RAG (Retrieval-Augmented Generation), LLM with Tool Calling / Function Calling, Multi-Agent System, and Hybrid Architectures.

- System Prompt—The foundational instructions, persona definitions, and behavioral guidelines that govern the agent's responses and decision-making processes. For example, role definition, behavioral guidelines, and safety instructions.

- Languages Supported—The complete set of natural languages the target system can understand, process, and generate responses in, including proficiency levels. For example, English, Spanish, French, and German.

- Banned Keywords—Trigger words, phrases, or patterns that cause the target to refuse a response, activate safety filters, or modify behavior regardless of the prompt's actual intent or context. For example, self-harm, violence, illegal activity keywords.

- Tools Accessible—The complete schema and specifications for all external functions, APIs, and capabilities available to the agent for extending its functionality beyond text generation.

Select Submit.Once the target is created you can start a scan, or view previously created targets:![]()

Rest API or Streaming Connection Method

Use Rest API or Streaming connection method for adding targets.Use this information in this section to configure a custom method for connecting to the endpoint, either Rest API or Streaming.About Custom Connection MethodsPrisma AIRS AI Red Teaming supports REST and streaming APIs as targets. If you are using a model hosted on Hugging Face or a model served by OpenAI there are pre-configured connection methods also available which will make it easier for you to configure your target.If you are adding a REST or Streaming API endpoint, you need to select if it is a public endpoint that can be accessed over the internet or a private endpoint. If you are using a private endpoint, you can select either:- IP Allowlist—A static IP address is shown that will be used by AI Red Teaming to access your target. Make a note of this IP address as it will have to be allowed by your infrastructure or IT team to be able to access the Target.

- After specifying Target Details, set the Connection Method to Rest API or Streaming.

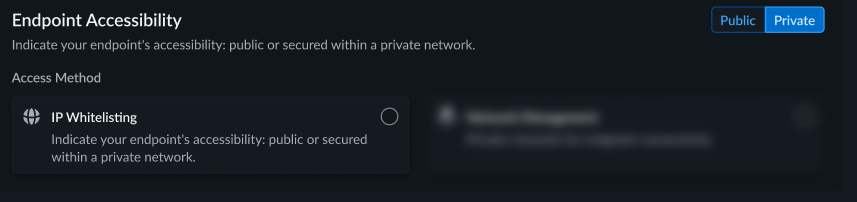

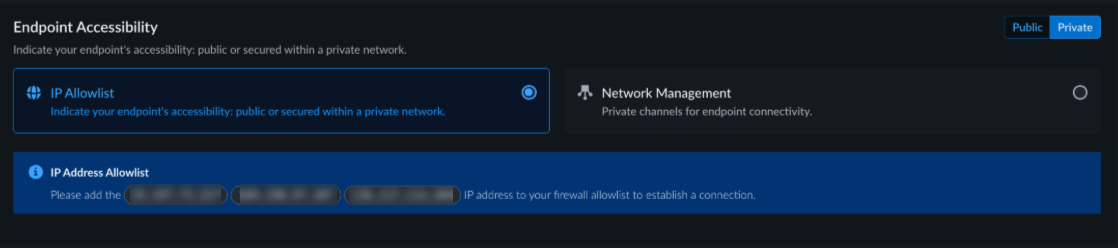

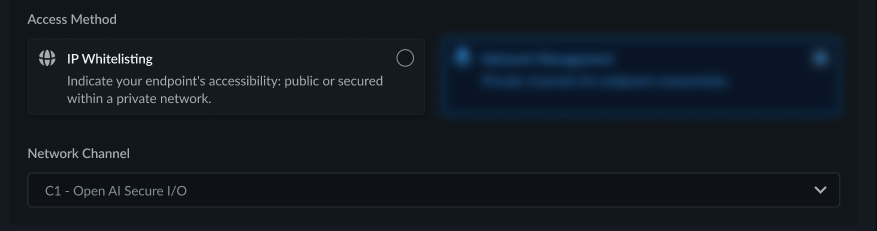

![]() Configure Endpoint Accessibility. This field indicates if your endpoint is Public or Private (secured within a private network).

Configure Endpoint Accessibility. This field indicates if your endpoint is Public or Private (secured within a private network).![]()

- Select IP Allowlist. The AI Red Teaming interface displays three IP addresses that must be permitted by your firewall to establish a connection. Add these displayed IP addresses to your firewall allowlist to ensure successful connectivity.

![]() Select Network Management to configure private channels for endpoint connectivity. If a network channel was previously configured, it appears as an option in a drop-down menu.

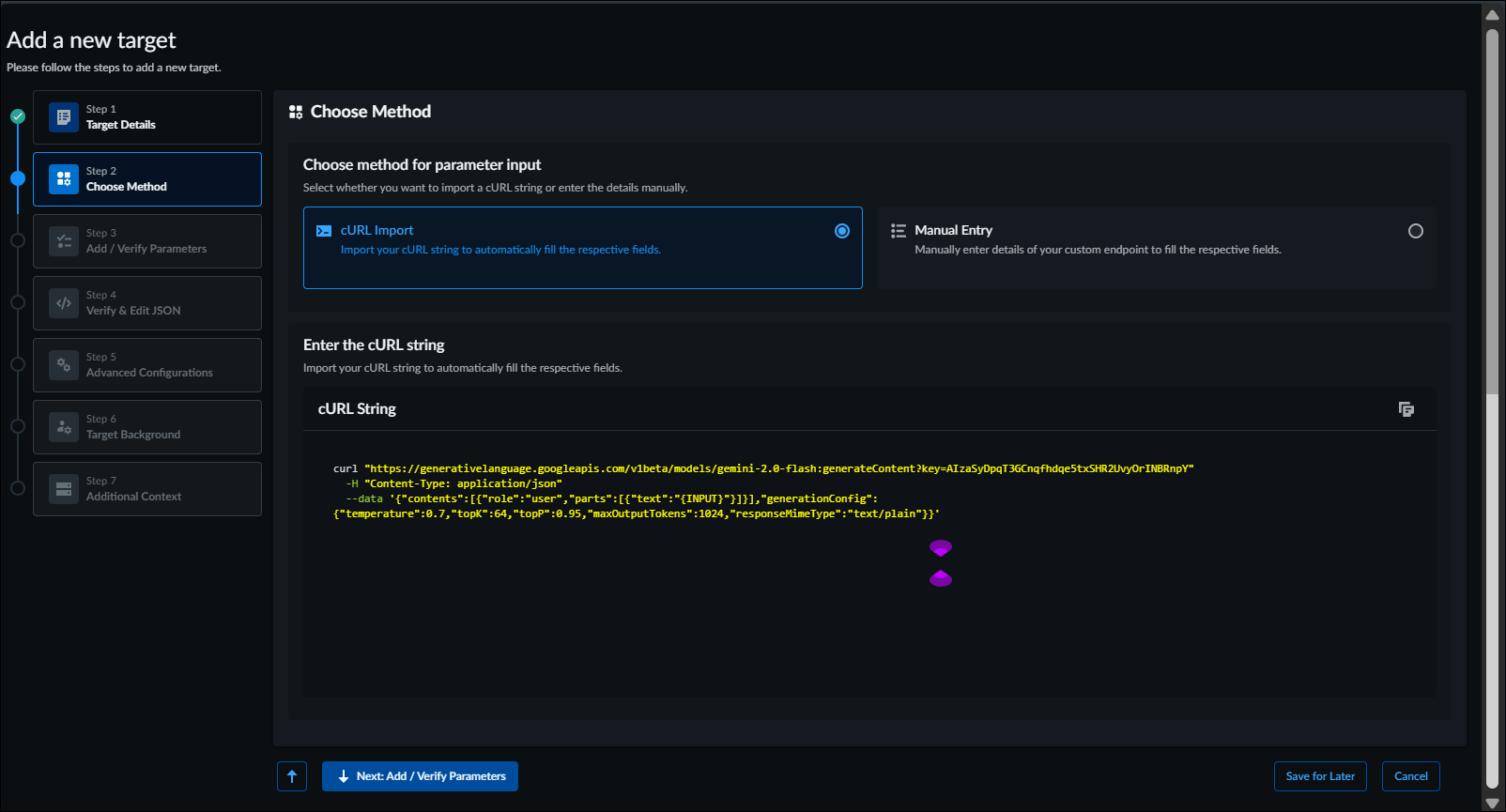

Select Network Management to configure private channels for endpoint connectivity. If a network channel was previously configured, it appears as an option in a drop-down menu.![]() Select Next: Choose Method. Use this page to select whether you want to import a cURL string, or enter the details manually.

Select Next: Choose Method. Use this page to select whether you want to import a cURL string, or enter the details manually.![]() To configure the API request, you can use a cURL to import a sample request, or manually configure the request:

To configure the API request, you can use a cURL to import a sample request, or manually configure the request:- Using a cURL to import sample Request. The easiest method to import the request format is to use a cURL string that captures all the necessary headers for your request. Once you click next AI Red Teaming will extract all the necessary information from the cURL string.

- Manually configure all headers. You can configure the API endpoint and all necessary headers by selecting the Manual Entry option.

- Configure the input request JSON based on the requirement of your application. Make sure the value where the user prompt is expected is replaced with {INPUT}. This is the value where AI Red Teaming will add its attack prompts and send to the target application.For example, if your cURL string is as follows:curl \ -X POST \ -H "Authorization: <api_token>" \ -H "Content-Type: application/json" \ -d '{ "messages": [ { "role": "system", "content": "You are a helpful assistant." }, { "role": "user", "content": "<prompt>" } ], "temperature": 0.7 }' \ https://<model_endpoint_url>Replace the <prompt> with {INPUT}.To accommodate testing with different system prompts and hyperparameters without modifying the original request, the following approach can be implemented:

- Multiple Targets with Different System Prompts. When testing different system prompts on the same model, you can create multiple target configurations with identical settings except for the system prompt. This allows for direct comparison of the impact of different system prompts while keeping other variables constant.

- Hyperparameter Testing. For testing other

hyperparameters:

- Create separate target configurations for each set of hyperparameters you want to test.

- Keep all other settings identical across these targets, changing only the specific hyperparameter(s) you're evaluating.

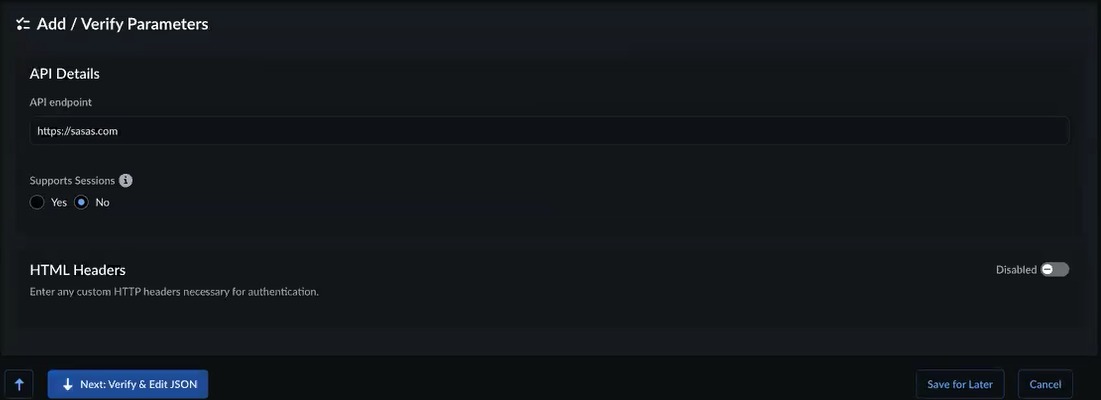

Select Next: Add/Verify Parameters.- In the Add/Verify Parameters page, enter API Details. This URL represents the API endpoint. For example, https://api.openai.com/v1/chat/completions.(For Manual Entry method only) Configure the Multi-Turn session parameters. The Multi-Turn session enables sophisticated AI Red Teaming scenarios by maintaining conversation context across multiple turns for LLM target types. Unlike single-turn attacks that send isolated prompts, multi-turn attacks simulate real-world conversational interactions, allowing security teams to test how LLMs handle context, memory, and state across multiple conversation turns. Configure Supports Sessions option to specify whether the API end point supports session management for maintaining conversation context.This Multi-Turn session supports two distinct operational modes: stateless mode and stateful mode.

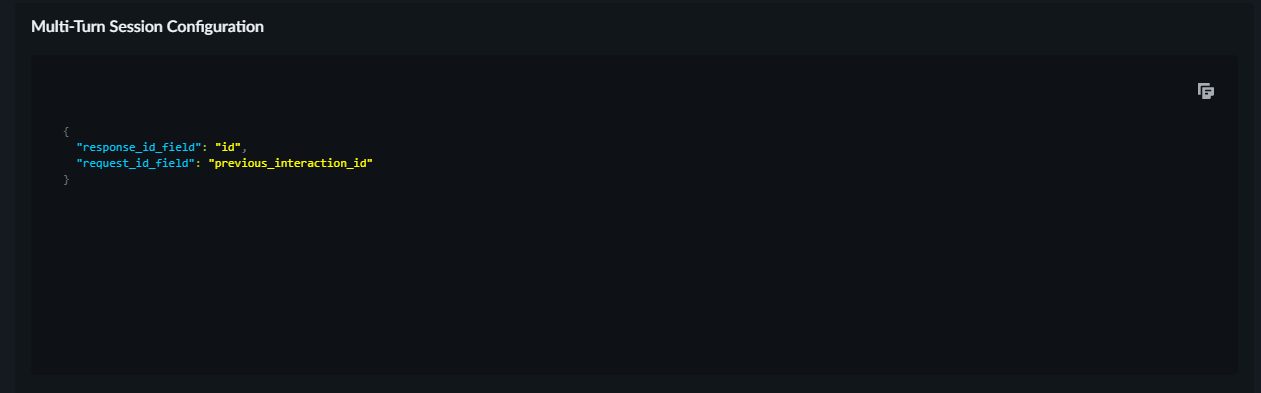

- To enable stateful mode where session IDs maintain context across requests with the API managing conversation history server-side, set Supports Sessions to Yes. When configured as Yes, you must edit the Multi-Turn Session Configuration settings in Verify & Edit JSON step.

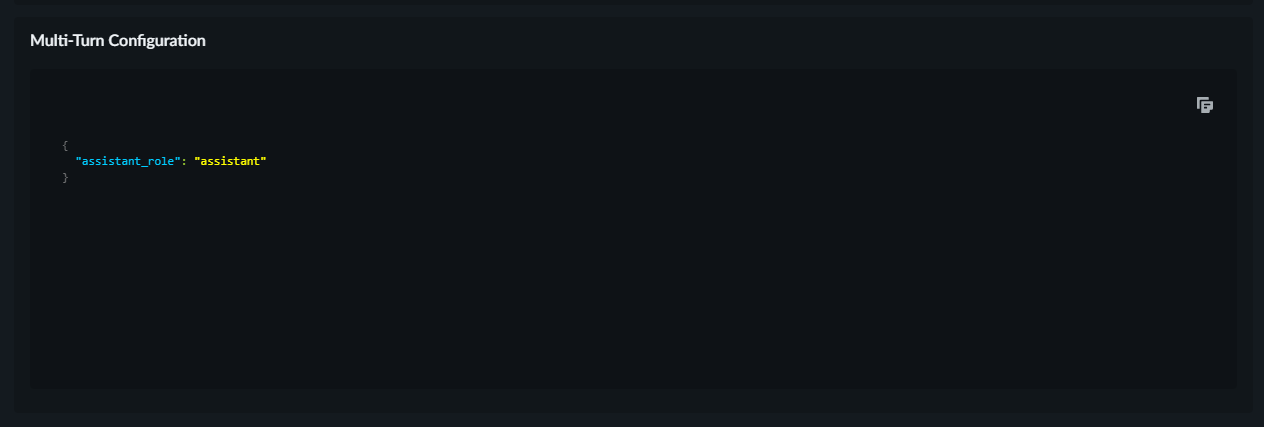

- To enable stateless mode where the full conversation history is sent with each request, set Supports Sessions to No. When configured as No, you must add the Multi-Turn Configuration settings in Verify & Edit JSON step.

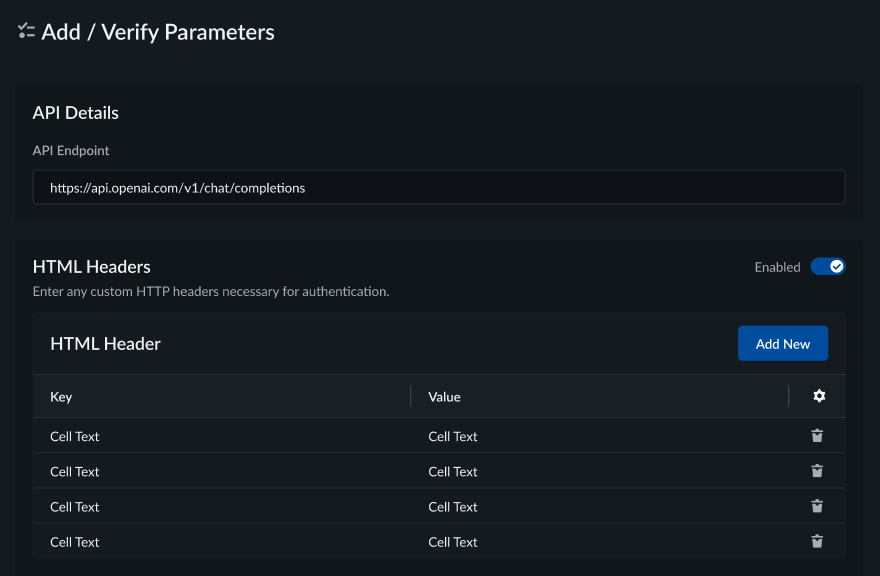

![]() Next, determine if you want to enable HTML Headers. Use this option to enter any custom HTTP headers required for authentication. Once enabled, a list of configured HTML Headers appears. You can delete an existing header, or, click Add New for a new HTML header.

Next, determine if you want to enable HTML Headers. Use this option to enter any custom HTTP headers required for authentication. Once enabled, a list of configured HTML Headers appears. You can delete an existing header, or, click Add New for a new HTML header.![]() In the Verify & Edit JSON page, configure the API Response.Specify the JSON structure of the request and response.

In the Verify & Edit JSON page, configure the API Response.Specify the JSON structure of the request and response.![]() The easiest way to configure the Response is to first get a sample response from your target and paste the entire JSON here. Then replace the LLM output with {RESPONSE}. This is the value that will be used to determine if the response was a successful attack or a failed attack. For example, if the response structure is as follows:{ "id": "", "object": "chat.completion", "created": 1732070187, "model": "meta-llama-3.1", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "It seems like you haven't provided any input yet. Could you please provide more context or information about what you need help with?" }, "finish_reason": "stop", "logprobs": null } ] }Paste the payload as it and replace the value for the key content with {RESPONSE}, like as follows:{ "id": "", "object": "chat.completion", "created": 1732070187, "model": "meta-llama-3.1", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "{RESPONSE}" }, "finish_reason": "stop", "logprobs": null } ] }Multi-Turn Configuration(When Supports Sessions is configured as No) Configure the Multi-Turn Configuration parameters.Refer the relevant target API documentation to determine the appropriate roles for each specific target. Below are sample targets with their corresponding roles provided as examples.

The easiest way to configure the Response is to first get a sample response from your target and paste the entire JSON here. Then replace the LLM output with {RESPONSE}. This is the value that will be used to determine if the response was a successful attack or a failed attack. For example, if the response structure is as follows:{ "id": "", "object": "chat.completion", "created": 1732070187, "model": "meta-llama-3.1", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "It seems like you haven't provided any input yet. Could you please provide more context or information about what you need help with?" }, "finish_reason": "stop", "logprobs": null } ] }Paste the payload as it and replace the value for the key content with {RESPONSE}, like as follows:{ "id": "", "object": "chat.completion", "created": 1732070187, "model": "meta-llama-3.1", "choices": [ { "index": 0, "message": { "role": "assistant", "content": "{RESPONSE}" }, "finish_reason": "stop", "logprobs": null } ] }Multi-Turn Configuration(When Supports Sessions is configured as No) Configure the Multi-Turn Configuration parameters.Refer the relevant target API documentation to determine the appropriate roles for each specific target. Below are sample targets with their corresponding roles provided as examples.- For OpenAI target types, edit the Multi-Turn Configuration by setting the "assistant_role" field to "assistant" in the JSON configuration.

- For Gemini target types, edit the Multi-Turn Configuration by setting the "assistant_role" field to "model" in the JSON configuration.

![]() Multi-Turn Session Configuration(When Supports Sessions is configured as Yes) Configure the Multi-Turn Session Configuration. This configuration enables AI Red Teaming capabilities for the target Interactions API endpoint with session management and streaming response support.Refer the relevant target API documentation to determine the appropriate session fields for each specific target. Below are sample targets with their corresponding session fields provided as examples.

Multi-Turn Session Configuration(When Supports Sessions is configured as Yes) Configure the Multi-Turn Session Configuration. This configuration enables AI Red Teaming capabilities for the target Interactions API endpoint with session management and streaming response support.Refer the relevant target API documentation to determine the appropriate session fields for each specific target. Below are sample targets with their corresponding session fields provided as examples.Multi-Turn Session Configuration Description response_id_field The unique identifier for the response. request_id_field The ID of the previous interaction (if any). ![]() Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.

Click Next: Advanced Configurations.In the Advanced Configurations page you'll configure Rate Limits and set Guardrails/Content Filters.![]() (Optional) Enable Rate Limits for applications on the target endpoint.

(Optional) Enable Rate Limits for applications on the target endpoint.- Specify the Endpoint Rate Limit. This value represents the maximum number of allowed requests per minute for the specified endpoint.Specify the Endpoint Rate Limit Error Code. This field represents the error code your system uses for rate limiting violations.Provide a Sample Exception JSON.(Optional) Enable Guardrails/Content Filters. These fields are used for output guardrails or content filters applicable on the target endpoint.

- Specify the Error code for Guardrails or Content Filters. This field represents the error code your system uses when a response is prevented by filters or safeguards.Provide a Sample Exception JSON.

![]() Select.

Select.![]()

![]() Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.

Only after a target is successfully validated, you can add target background information.Configure Target Background.Agentic Profiling in Red Teaming helps gather all relevant context about a target endpoint such as its business use case, background, key capabilities, technical architecture and other critical information. This is carried out by an autonomous agent probing the target endpoint with the right prompts. All information gathered through this exercise is presented as the Target's profile and is used downstream in Red Teaming Scans using the Agent.Target profiling allows you to either manually provide the required background information or use Agentic profiling (Fetch through Agentic Profiling) to automatically discover and populate these fields through AI-driven analysis of your endpoints. You can also modify the information collected with Agentic Profiling by updating the fields.- Add Industry information.Add Use Case, that is specific role of the target such as customer service or additional comments.