Prisma AIRS

AI Model Security

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

AI Model Security

See all the new features made available for Prisma AIRS AI Model

Security.

Here are the new Prisma AIRS AI Model Security features.

Model Security Adds Support for Two New Model Sources

|

January 2026

Supported for:

|

AI Model Security now supports JFrog Artifactory and GitLab Model

Registry as sources, adding to existing support for Local Storage,

HuggingFace, S3, GCS, and Azure Blob Storage.

You can now scan models stored in two new cloud

storage types:

- Artifactory—Models stored in JFrog Artifactory ML Model, Hugging Face, or generic artifact repositories.

- GitLab Model Registry—Models stored in the GitLab Model Registry.

Organizations can now establish consistent security standards across models

regardless of where development teams store them. Security Groups can enforce the

same comprehensive validation (deserialization threats, neural backdoors, license

compliance, insecure formats) for models in Artifactory and GitLab that you already

apply to other Sources.

This expansion reduces operational risk from unvalidated models by eliminating blind

spots in your AI security posture. Teams no longer need to move models between

repositories to apply security rules or generate compliance audit trails.

Configure Artifactory and GitLab sources through the same Security Group workflows used for other

model repositories.

Custom Labels for AI Model Security Scan

|

January 2026

Supported for:

|

Custom Labels for AI Model Security Scans enables you to attach custom key-value

metadata to your model scans, providing essential organizational context for

enterprise-scale security operations. When you run security scans on your AI models,

the results exist in isolation without the operational context your security teams

need to effectively triage, assign, and remediate findings. This feature allows you

to categorize scan results based on your specific organizational requirements,

whether you need to distinguish between production and development environments,

assign ownership to specific teams, track compliance framework requirements, or

integrate with your existing CI/CD workflows.

Key Benefits:

- Flexible Labeling Options—Attach custom labels to scan results either at scan time through the API and SDK or retroactively through the user interface, accommodating both automated and manual workflows.

- Simple Schema-Free Format—Uses straightforward string key-value pairs that adapt to diverse organizational structures without enforcing rigid schemas (such as, "environment:production", "team:ml-platform", "compliance:SOC2").

- Rich Contextual Categorization—Apply multiple custom labels to each scan result, creating comprehensive categorization that reflects your operational reality.

- Powerful Filtering Capabilities—Quickly isolate scan results by any combination of criteria rather than manually tracking which scans belong to which systems or teams.

- Compliance and Audit Support—Generate targeted audit reports for specific regulatory frameworks, enabling compliance teams to focus on relevant security findings.

- Team-Specific Focus—Allow security teams to prioritize production environment issues while development teams correlate scan results with their deployment pipelines.

- Seamless Integration—Comprehensive API support enables automated custom labelling in CI/CD systems while providing intuitive user interface controls for manual management.

- Enterprise Scalability—Essential for scaling Model Security across enterprise environments where hundreds or thousands of scans must be efficiently categorized, filtered, and acted upon by distributed teams with varying responsibilities and access requirements.

Local Scan AI Models Directly from Cloud Storages

|

January 2026

Supported for:

|

The AI Model Security client SDK now provides native access to scan machine learning

models stored across multiple cloud storage platforms without requiring

manual downloads. This enhanced capability allows you to perform security scans

directly on models hosted in Amazon S3, Azure Blob Storage, Google Cloud Storage,

JFrog Artifactory repositories, and GitLab Model Registry using your existing

authentication credentials and access controls.

You can leverage this feature when your organization stores trained model

repositories that require authenticated access, eliminating the need to manually

download large model files or rely on external scanning services that may not have

access to your secured storage environments. This approach is particularly valuable

when working with proprietary models, models containing sensitive data, or when

operating under strict data governance policies that prohibit transferring model

artifacts outside your controlled infrastructure.

The native storage integration streamlines your security workflow by

automatically handling credential resolution, temporary file management, and cleanup

operations while maintaining the same local scanning capabilities you rely on for

file-based model analysis. You benefit from reduced operational overhead and faster

scan execution since the SDK can optimize download and scanning operations without

intermediate storage steps. This capability enables seamless integration into CI/CD

pipelines, automated security workflows, and compliance processes where model

artifacts must remain within your organization's security perimeter throughout the

scanning lifecycle.

Customize Security Groups and View Enhanced Scan Results

|

December 2025

Supported for:

|

The AI Model Security web interface has been enhanced with the following new features

that provide deeper insights into model violations and offer greater flexibility for

customizing your security configuration. Below are the key new capabilities:

- Customize Security Groups—In addition to the set of default groups created for all new users, you can now create new custom model security groups directly using Strata Cloud Manager. Additionally, edit names and descriptions of existing security groups and delete the unused security groups (except the default ones).

- File Explorer—For each scan, you can now view the visualization of every file scanned by AI Model Security in its original file structure. You can also view detailed, file-level violation information for every scanned file.

- Enhanced JSON View—You can now view direct JSON responses from the API for scans, violations, and rule evaluations. The JSON view also provides detailed instructions on how to retrieve this data from your local machine.

Secure AI Models with AI Model Security

|

October 2025

Supported for:

|

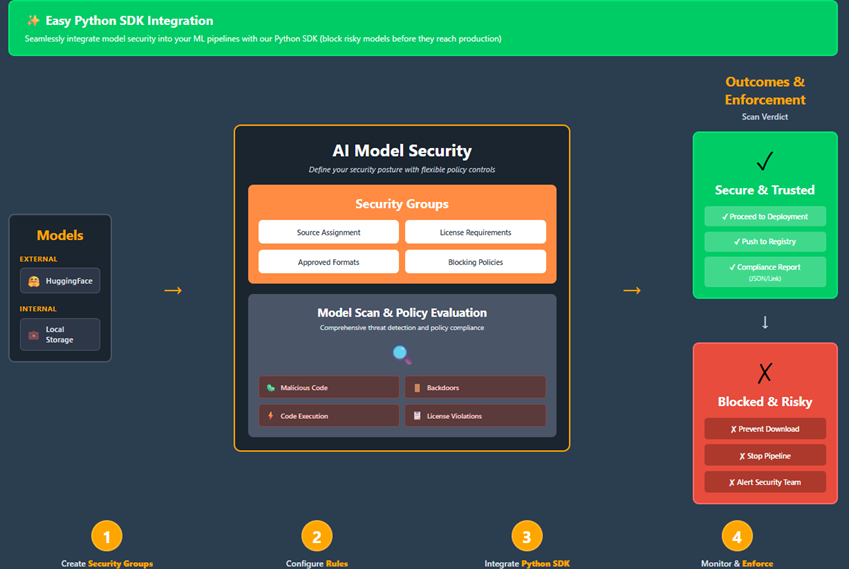

Models serve as the foundation of AI/ML

workloads and power critical systems across organizations today. Prisma AIRS now

features AI Model Security, a comprehensive solution that ensures only secure,

vulnerability-free models are used while maintaining your desired security

posture.

AI/ML models pose significant security risks as they can

execute arbitrary code during loading or inference. This is a critical vulnerability

that existing security tools fail to adequately detect. Compromised models have been

exploited in high-impact attacks including cloud infrastructure takeovers, sensitive

data theft, and ransomware deployments. Your valuable training datasets and

inference data processed by these models make them prime targets for cybercriminals

seeking to infiltrate AI-powered systems.

What you can do with AI Model Security:

- Model Security Groups—Create Security Groups that apply different managed rules based on where your models come from. Set stricter policies for external sources like HuggingFace, while tailoring controls for internal sources like Local or Object Storage.

- Model Scanning—Scan any model version against your Security Group rules. Get clear pass/fail results with supporting evidence for every finding, so you can confidently decide whether a model is safe to deploy.

- Prevent Security Risks Before Deployment: Identify vulnerabilities, malicious code, and security threats in AI models before they reach production environments.

- Enforce Consistent Security Standards: Apply organization-wide security policies across all model sources, ensuring every model meets your requirements regardless of origin.

- Accelerate Secure AI Adoption: Reduce manual security review time with automated scanning, enabling teams to deploy models faster without compromising security.

- Maintain Compliance and Governance: Demonstrate security due diligence with detailed scan evidence and audit trails for regulated industries and internal compliance requirements.