Prisma AIRS

AI Red Teaming

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

AI Red Teaming

Learn about new features for Prisma AIRS AI Red Teaming.

Here are the new Prisma AIRS AI Red Teaming features.

Multi-turn Attack Support

|

February 2026

Supported for:

|

Multi-turn attack support enables you to conduct sophisticated AI

Red Teaming scenarios by maintaining conversation context across multiple

interactions with your target language models, extending beyond basic single-turn

testing to simulate realistic attack patterns where adversaries gradually manipulate

model behavior through sequential prompts that mimic how actual users interact with

AI systems in production environments.

When you configure multi-turn attacks, AI Red

Teaming automatically manages conversation state, supporting both stateless APIs

that use past conversations and stateful backends that maintain session context

internally.

- For stateless configurations, you specify the assistant role name used by your target API, and the system automatically builds and transmits the complete conversation history with each subsequent request.

- For stateful configurations, you define how session identifiers are extracted from responses and injected into follow-up requests, allowing the system to maintain context without resending entire conversation histories.

AI Red Teaming supports all major LLM providers including OpenAI, Gemini,

Bedrock, and Hugging Face while also accommodating custom endpoints through flexible

configuration options that auto-detect common message formats.

You can leverage multi-turn attacks to test sophisticated vulnerabilities

that only emerge through extended conversations with AI agents, particularly when

evaluating chatbots and virtual assistants that maintain memory across interactions

to verify whether context from earlier turns can be exploited to bypass safety

controls in later exchanges. If you are assessing custom API endpoints or

self-hosted LLM backends, multi-turn testing reveals how these systems handle

conversation state management and whether attackers could poison agent memory or

create context confusion, while organizations migrating from one LLM provider to

another can use multi-turn campaigns to ensure their new infrastructure maintains

the same security posture across extended interactions.

Agentic Target Profiling

|

February 2026

Supported for:

|

Target Profiling enhances your AI security assessments by gathering

comprehensive contextual information about your AI endpoints, enabling more accurate

and relevant vulnerability discoveries. With Target Profiling, you can leverage both

user-provided information and dynamic Agentic Profiling to get detailed profiles of

your AI models, applications, and agents.

Target Profiling collects critical

information about your AI systems, including industry context, use cases,

competitive landscape, and technical foundations such as base models, architecture

patterns, and accessibility requirements. AI Red Teaming's Agentic Profiling

capability connects with and interrogates your target endpoint to discover all the

business and technical context of the target. This automated approach saves you time

while ensuring comprehensive coverage of contextual factors that influence security

risks.

The feature provides you with a centralized view where you can visualize all gathered

context. You can distinguish between user-provided information and agent-discovered

data, giving you full transparency into how your target profiles are

constructed.

Enhanced AI Red Teaming for AI Agents and Multi-Agent Systems

|

February 2026

Supported for:

|

You can now leverage Prisma AIRS AI Red Teaming's enhanced capabilities to

comprehensively assess the security posture of your autonomous AI agents and

multi-agent systems. As your organization deploys agentic systems that extend beyond

traditional AI applications to include tool calling, instruction execution, and

system interactions, you face an expanded and more complex attack surface that

requires specialized security assessment approaches. This advanced AI Red Teaming

solution addresses the unique vulnerabilities Inherent in generative AI agents by

employing agent-led testing methodologies that craft

targeted goals and attacks specifically designed to exploit agentic system

weaknesses.

When you configure your AI Red Teaming assessments, the system

automatically tailors its approach based on your target endpoint type, enabling you

to uncover critical vulnerabilities such as tool misuse where malicious actors

manipulate your AI agents to abuse their integrated tools through deceptive prompts

while operating within authorized permissions. The solution also identifies intent

breaking and goal manipulation vulnerabilities where attackers redirect your agent's

objectives and reasoning to perform unintended tasks.

This targeted approach ensures you can confidently deploy AI agents in

production environments while maintaining robust security controls against the

evolving threat landscape targeting autonomous AI systems.

Secure AI Red Teaming with Network Channels

|

January 2026

Supported for:

|

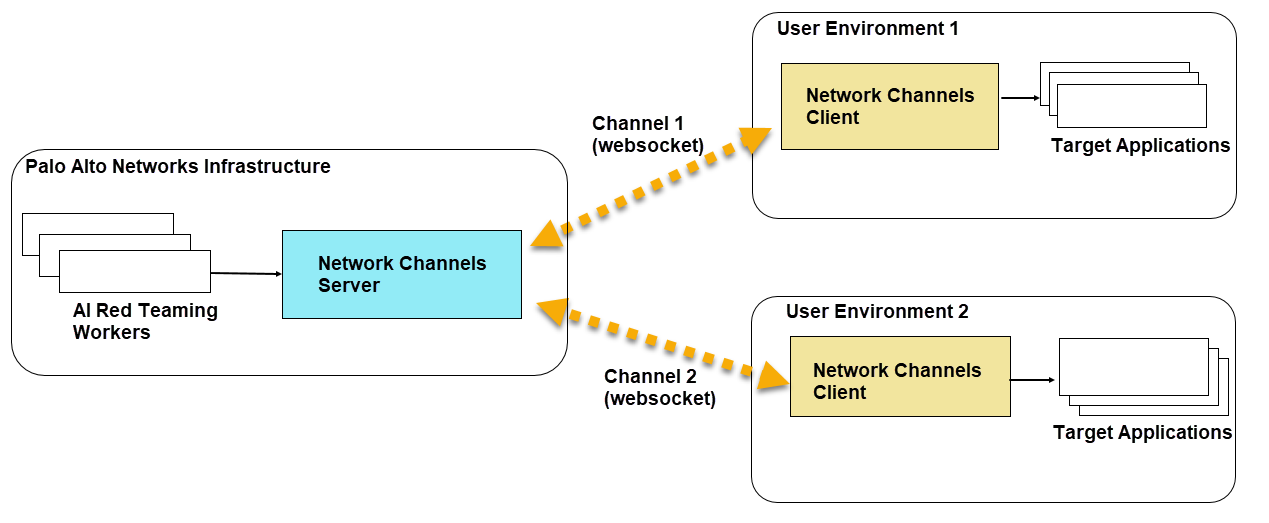

Network Channels is a secure connection solution that enables AI Red Teaming to

safely access and analyze your internal endpoints without requiring firewall rules

for specific IP addresses or opening inbound ports. This enterprise-grade solution

puts you in complete control of the connection, allowing you to initiate and

terminate access while maintaining your security perimeter.

The Network Channels enables you to

conduct secure, continuous AI Red Teaming assessments against user APIs and models

hosted within private infrastructure. Network channels eliminates the need for users

to expose inbound ports or modify firewall configurations, adhering strictly to Zero

Trust principles.

A channel is a unique communication pathway that clients use to

establish connections. Each channel has a unique connection URL with auth

credentials. You will need to create and validate a channel first, before using it

to add a target. Multiple channels can be created for different environments and

each channel can handle multiple targets accessible to it.

The solution utilizes a lightweight Network Channels client deployed within

the user’s environment. This client establishes a persistent, secure outbound WebSocket connection to the Palo

Alto Networks environment, facilitating seamless testing of internal systems without

the risks associated with allowing specific IP addresses through your firewall or

inbound access.

Additionally, you will be provided with a docker pull secret from Strata

Cloud Manager, which you can use to pull the docker image and helm chart for the

network channels client.

This combined solution is ideal for:

- Restricted Environments: Conducting assessments for enterprise users with air-gapped systems or strict compliance requirements.

- Continuous Monitoring: Maintaining reliable, persistent connectivity for real-time AI security updates.

- Automated Workflows: Deploying network broker clients across distributed infrastructure using existing container orchestration (Kubernetes/Helm) without manual intervention.

- Enhanced Security: No need to expose internal endpoints or modify firewall rules.

- Complete Control: Initiate and terminate connections on demand.

- Easy Setup: Simple client installation process.

- Flexible Management: Create and manage multiple secure channels for different environments.

- Reusability: Use the same connection for multiple targets.

- Enterprise Ready: Designed for organizations with strict security requirements.

Remediation Recommendations for AI Red Teaming Risk Assessment

|

January 2026

Supported for:

|

The Recommendations feature enables you to seamlessly transition from

identifying AI system vulnerabilities through Red Teaming assessments to

implementing targeted security controls that address your specific risks. This

feature closes the critical gap between AI risk assessment and mitigation by

transforming vulnerability findings into actionable remediation plans. The

remediation recommendations can be found in all Attack Library and Agent Scan Reports.

When you conduct AI Red Teaming evaluations on your AI models,

applications, or agents, this integrated solution automatically analyzes the

discovered security, safety, brand reputation, and compliance risks to generate

contextual remediation recommendations that directly address your specific

vulnerabilities.

The generated contextual remediation recommendations include two distinct

components:

- Runtime Security Policy configuration: Rather than configuring runtime security policies through trial and error, you receive intelligent guidance that maps each identified risk category to appropriate guardrail configurations, such as enabling prompt injection protection for security vulnerabilities or toxic content moderation for safety concerns.

- Other recommended measures: The system identifies successfully compromised vulnerabilities, and provides the corresponding remediation measures by prioritizing them based on effectiveness and implementation feasibility, allowing you to eliminate manual evaluation and focus resources on high-impact fixes.

For organizations deploying AI systems in production environments, this

capability ensures that your runtime security configurations and remediation

measures are informed by actual risk insights rather than generic best practices,

resulting in more effective protection against the specific threats your AI systems

face.

The remediation recommendations appear directly in your AI Red Teaming scan

reports, providing actionable guidance. You can then manually create and attach the

recommended security profiles to your desired workloads, transforming AI risk

management from a reactive process into a proactive workflow that connects

vulnerability discovery with targeted protection.

AI Red Teaming Executive Reports

|

January 2026

Supported for:

|

When you conduct AI Red Teaming assessments, you often need to share results with

executive stakeholders like CISOs and CIOs who require high-level insights rather

than granular technical details. The exportable executive PDF report feature

addresses this need by transforming your AI Red Teaming assessment data into a

consumable format. You can now generate comprehensive PDF reports that consolidate

the essential information from the web interface, organized to highlight critical

takeaways and strategic insights in a format that can be shared easily with

executives. While security practitioners can leverage CSV and JSON export formats to

access detailed findings for remediation purposes, this PDF format is highly

valuable for CXOs who intend to understand high level risk assessment of any AI

system.

Use this feature to communicate security posture and risk assessments to

executive leadership who may not have the time or technical background to parse

through detailed CSV exports or navigate complex web interfaces. The PDF format

ensures you can easily distribute reports through email or include them in executive

briefings and board presentations. The report contains AI summary, key risk

breakdown, high-level overview charts, and metrics that matter most to

decision-makers.

You can use this feature when preparing for executive reviews, board

meetings, or any scenario where you need to demonstrate the effectiveness of your

security controls and communicate risk exposure to non-technical stakeholders. The

structured format with AI summary, overview sections, and detailed attack tables,

ensures that both strategic insights and supporting technical evidence are readily

accessible, enabling informed decision-making at the executive level.

AI Summary for AI Red Teaming Scans

|

January 2026

Supported for:

|

When you complete an AI Red Teaming scan, you receive an AI Summary (in the

scan report) that synthesizes key risks and their implications. This executive

summary eliminates the need for manual interpretation of technical data, allowing

you to quickly understand which attack categories or techniques pose the greatest

threats to your systems and what the potential business impact might be.

The AI Summary contains the scan

configuration, key risks, and implications.

This capability is particularly valuable when you need to communicate AI

risk assessment results across different organizational levels or when preparing

briefings for leadership meetings. Rather than struggling to translate technical

vulnerability reports into business language, you can rely on the AI Red Teaming

generated report to articulate security, safety, compliance, brand, and business

risks in terms that resonate with executive audiences. This summary is also valuable

in prioritising remediation measures which teams can adopt for a safer deployment of

AI systems in production.

Enhanced AI Red Teaming with Brand Reputation Risk Detection

|

January 2026

Supported for:

|

You can now assess and protect your AI systems against Brand reputation

risks using Prisma AIRS enhanced AI Red Teaming capabilities. This feature addresses

a critical gap in AI security by identifying vulnerabilities that could damage your

organization's reputation when AI systems interact with users in production

environments. Beyond the existing Security, Safety, and Compliance risk categories,

you can now scan for threats including Competitor Endorsements, Brand Tarnishing

Content, Discriminating Claims, and Political Endorsements.

The enhanced agent assessment capabilities

automatically generate goals focused on Brand Reputational risk scenarios that could

expose your organization to public relations challenges or regulatory scrutiny. You

benefit from specialized evaluation methods designed to detect subtle forms of reputational risk,

including false claims and inappropriate endorsements that traditional security

scanning might miss. This comprehensive approach allows you to proactively identify

and address potential brand vulnerabilities before deploying AI systems to

production environments, protecting both your technical infrastructure and corporate

reputation in an increasingly AI-driven business landscape.

Error Logs and Partial Scan Reports

|

December 2025

Supported for:

|

When you conduct AI Red Teaming scans using Prisma AIRS, you may encounter

situations where scans fail completely or complete only partially due to target

system issues or connectivity problems. The Error Logs and Partial Scan Reports feature provides you

with comprehensive visibility into scan failures and enables you to generate

actionable reports even when your scans don't complete successfully. You can access

detailed error logs directly within the scan interface, both during active scans on

the progress page and after completion in the scan logs section, allowing you to

quickly identify whether issues stem from your target AI system or the Prisma AIRS

platform itself.

This feature particularly benefits you when conducting Red Teaming

assessments against enterprise AI systems that may have intermittent availability or

response issues. When your scan completes the full simulation but doesn’t receive

valid responses for all attacks, AI Red Teaming marks it as partially complete

rather than failed. You can then choose to generate a comprehensive report based on

the available test results, giving you valuable security insights even from

incomplete assessments. AI Red Teaming transparently informs you about credit

consumption before report generation and clearly marks any generated reports as

partial scans, indicating the percentage of attacks that received responses.

By leveraging this capability, you can maximize the value of your Red

Teaming efforts, troubleshoot scanning issues more effectively, and maintain

continuous security assessment workflows even when facing target system limitations

or temporary connectivity challenges during your AI security evaluations.

Automated AI Red Teaming

|

October 2025

Supported for:

|

Palo Alto Networks' is an automated solution designed to scan any AI

system—including LLMs and LLM-powered applications—for safety and security

vulnerabilities.

The tool performs a Scan against a specified Target (model,

application, or agent) by sending carefully crafted attack prompts to

simulate real-world threats. The findings are compiled into a comprehensive Scan

Report that includes an overall Risk Score (ranging from 0 to 100),

indicating the system's susceptibility to attacks.

Prisma AIRS offers three distinct scanning modes for thorough assessment:

- Attack Library Scan: Uses a curated, proprietary library of predefined attack scenarios, categorized by Security (e.g., Prompt Injection, Jailbreak), Safety (e.g., Bias, Cybercrime), and Compliance (e.g., OWASP LLM Top 10).

- Agent Scan: Utilizes a dynamic LLM attacker to generate and adapt attacks in real-time, enabling full-spectrum Black-box, Grey-box, and White-box testing.

- Custom Attack Scan: Allows users to upload and execute their own custom prompt sets alongside the built-in library.

A key feature of the service is its single-tenant deployment model, which

ensures complete isolation of compute resources and data for enhanced security and

privacy.