Prisma AIRS

Create Model Groups in Panorama

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

Create Model Groups in Panorama

Create a model group to apply customized protections to your AI models. Configure

each model group with specific protection settings for your AI models, applications, and

data.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

This secion helps you to create model groups in Panorama. You can customize

the settings to group the AI models to apply specific application protection, AI

model protection, and AI data protection. Add a model group to an existing AI

security profile or a new AI security profile.

To create a new model group or edit an existing one with customized protections,

follow these steps:

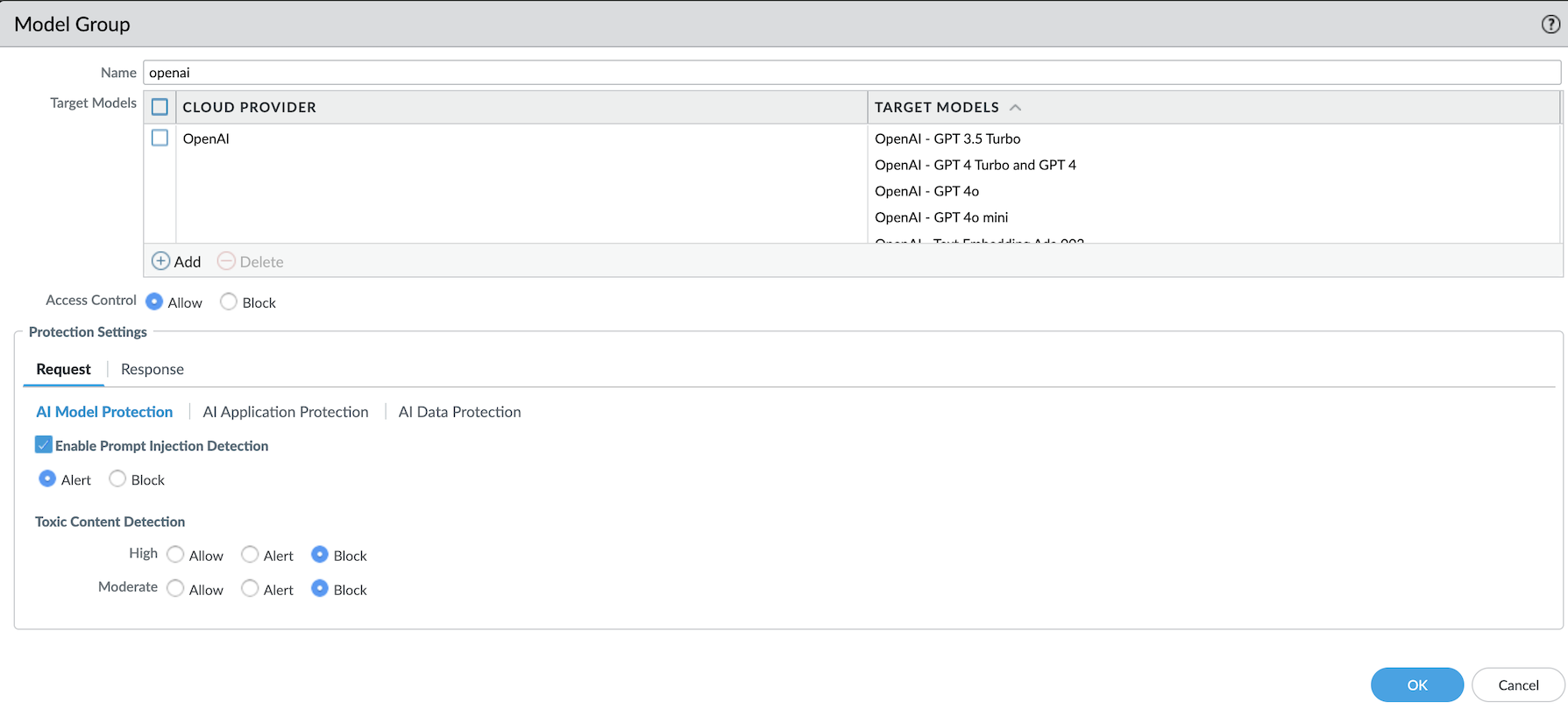

- Log in to Panorama.Navigate to Objects Security Profiles AI Security.Select the default model group or click Add to create a new one.The security profile has a default model group defining the behavior of models not assigned to any specific group. If a supported AI model isn't part of a designated group, the default model group’s protection settings will apply.Enter a Name for the model group.In the Target Models field, select the AI models supported by the cloud provider.Click Add, select a cloud provider from the dropdown list, and then select the available target models from the supported list. (See the AI Models on Public Clouds Support Table list for reference.)In Access Control, set the action to Allow or Block for the model group.When you set the access control to Allow, you can configure the protection settings for request and response traffic.When you set the access control to Block for a model group, the protection settings are also disabled. This means any traffic to this model will be blocked for this profile.Configure the following Protection Settings for the Request and Response traffic:

Request Response AI Model Protection - Prompt Injection: Enable prompt injection

detection and set the action as Alert

to log the prompt injection or Block to stop

the prompt when a prompt injection is

detected.The feature supports the following languages: English, Spanish, Russian, German, French, Japanese, Portuguese, Italian, and Simplified Chinese.

- Toxic Content: Enable toxic content detection

in LLM model requests or responses. This feature

protects your LLM models against generating or

responding to inappropriate content.The feature supports the following languages: English, Spanish, Russian, German, French, Japanese, Portuguese, Italian, and Simplified Chinese.The actions include Allow, Alert, or Block, with the following severity levels:

- Moderate: Detects content that some users may consider toxic, but which may be more ambiguous. The default value is Allow.

- High: Content with a high likelihood of most users considering it toxic. The default value is Allow.

The system will warn you if you attempt to configure a more severe action for moderately toxic content than for highly toxic content. When toxic content is detected, an AI Security log is generated with the following details:- Incident Type: "Model Protection"

- Incident Subtype: "Toxic Content"

- Incident Subtype Details: Specific toxicity category (e.g., Hate, Sexual, Violence & Self Harm, Profanity)

- Severity: Medium for "High" confidence matches, Low for "Moderate" confidence matches

![]()

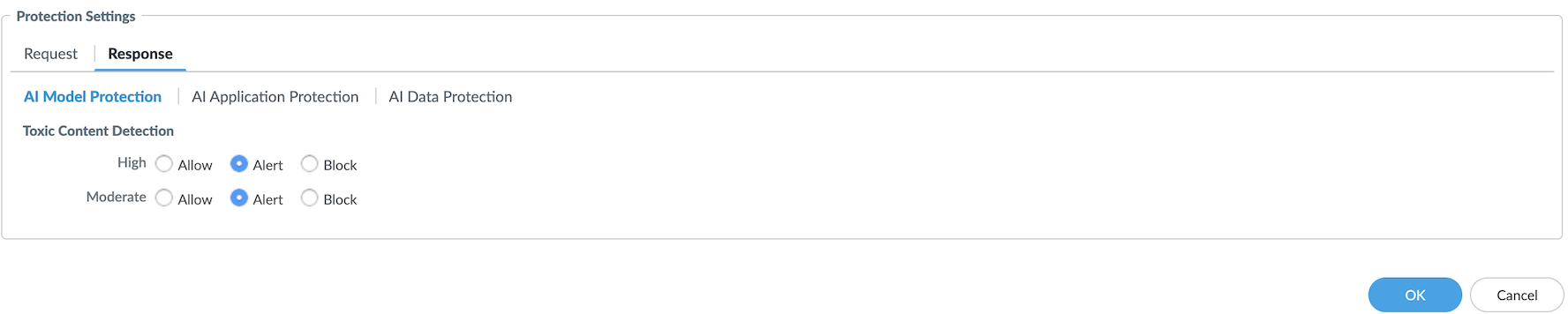

AI Model Protection - Toxic Content: Enable toxic content detection

in LLM model requests or responses. This feature

protects your LLM models against generating or

responding to inappropriate content.The actions include Allow, Alert, or Block, with the following severity levels:

- Moderate: Detects content that some users may consider toxic, but which may be more ambiguous. The default value is Allow.

- High: Content with a high likelihood of most users considering it toxic. The default value is Allow.

The system will warn you if you attempt to configure a more severe action for moderately toxic content than for highly toxic content. When toxic content is detected, an AI Security log is generated with the following details:- Incident Type: "Model Protection"

- Incident Subtype: "Toxic Content"

- Incident Subtype Details: Specific toxicity category (e.g., Hate, Sexual, Violence & Self Harm, Profanity)

- Severity: Medium for "High" confidence matches, Low for "Moderate" confidence matches

![]()

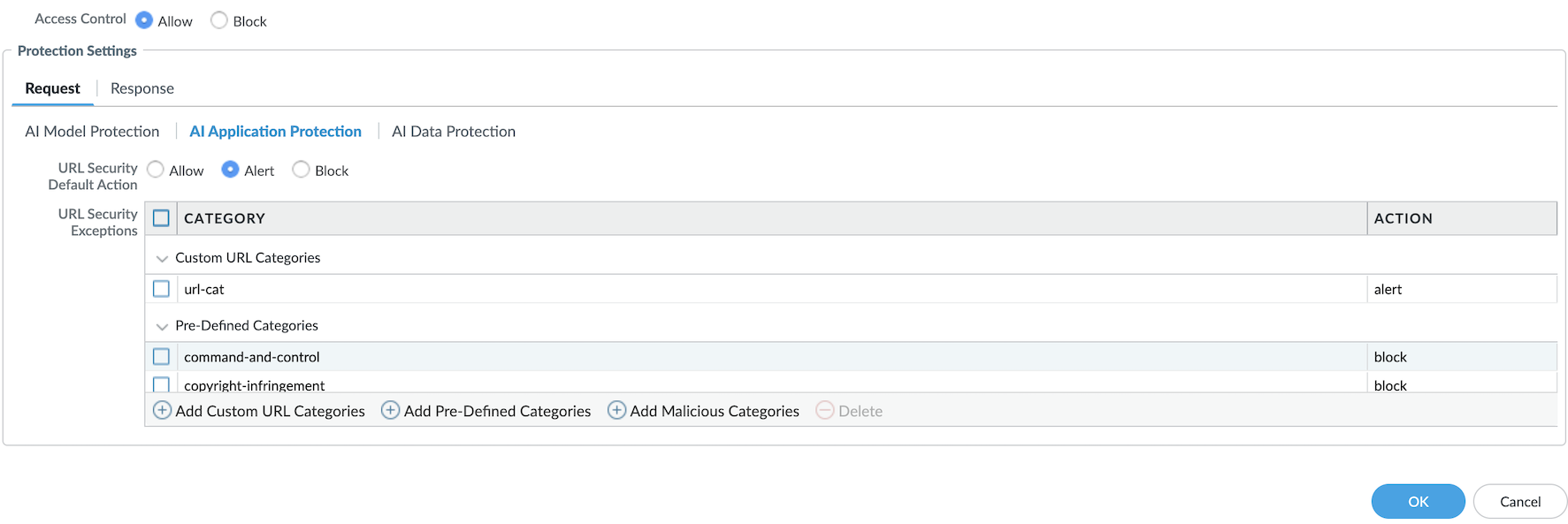

AI Application Protection - URL Security Default Action: Set the default URL security action to Allow, Alert, or Block. This action applies to URLs detected in the content of the model input.

- URL Security Exceptions: Override the default

behavior by specifying actions for individual URL

categories. For example, configure the default

action as Allow but block malicious URL

categories, or set the default action to

Block with exceptions for trusted

categories.Click to add custom URL categories, pre-defined categories, and malicious categories. When you click Add Malicious Categories, you can add multiple malicious categories to the URL Security Exceptions table. Please note that adding additional malicious categories doesn’t affect existing custom configurations.

![]()

AI Application Protection - URL Security Default Action: Set the default URL security action to Allow, Alert, or Block. This action applies to URLs detected in the content of the model input.

- URL Security Exceptions: Override the default

behavior by specifying actions for individual URL

categories. For example, configure the default

action as Allow but block malicious URL

categories, or set the default action to

Block with exceptions for trusted

categories.Click to add custom URL categories, pre-defined categories, and malicious categories. When you click Add Malicious Categories, you can add multiple malicious categories to the URL Security Exceptions table. Please note that adding additional malicious categories doesn’t affect existing custom configurations.

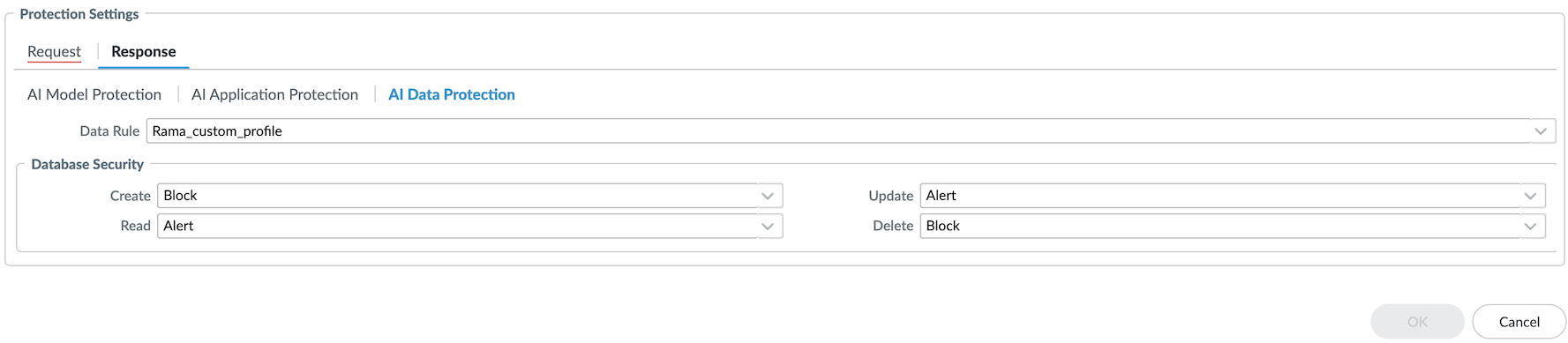

AI Data Protection - Data Rule: Select the predefined or custom DLP rule to detect sensitive data in model input, or click New to create a new DLP rule.

AI Data Protection - Data Rule: Select the predefined or custom DLP rule to detect sensitive data in model input, or click New to create a new DLP rule.

- Database Security: Regulate the types of database queries generated by genAI models to ensure appropriate access control.Set actions (Allow, Alert, or Block) for database operations: Create, Read, Update, and Delete, to prevent unauthorized actions.

![]() Click OK to create a new model group or save the existing model group configurations.Next, add the model group to an AI security profile.

Click OK to create a new model group or save the existing model group configurations.Next, add the model group to an AI security profile.