CN-Series

Prepare the Cluster

Table of Contents

Expand All

|

Collapse All

CN-Series Firewall Docs

-

- CN-Series System Requirements for the Kubernetes Cluster

- CN-Series System Requirements for On-Premises Kubernetes Deployments

- CN-Series Performance and Scaling

- Create Service Accounts for Cluster Authentication

- Get the Images and Files for the CN-Series Deployment

- Strata Logging Service with CN-Series Firewall

- IOT Security Support for CN-Series Firewall

- Software Cut-through Based Offload on CN-Series Firewall

-

HSF

- Deployment Modes

- HSF

- In-Cloud and On-Prem

-

- CN-Series HSF System Requirements

- Configure Traffic Flow Towards CN-Series HSF

- Test Case: Layer 3 BFD Based CN-GW Failure Handling

- View CN-Series HSF Summary and Monitoring

- Validating the CN-Series HSF Deployment

- Custom Metric Based HPA Using KEDA in EKS Environments

- Configure Dynamic Routing in CN-Series HSF

- Features Not Supported on the CN-Series

Prepare the Cluster

You will need to configure the following:

- Nodegroup and Nodes

- Node Labels

- Service Account

- Interfaces

Nodegroup and Nodes

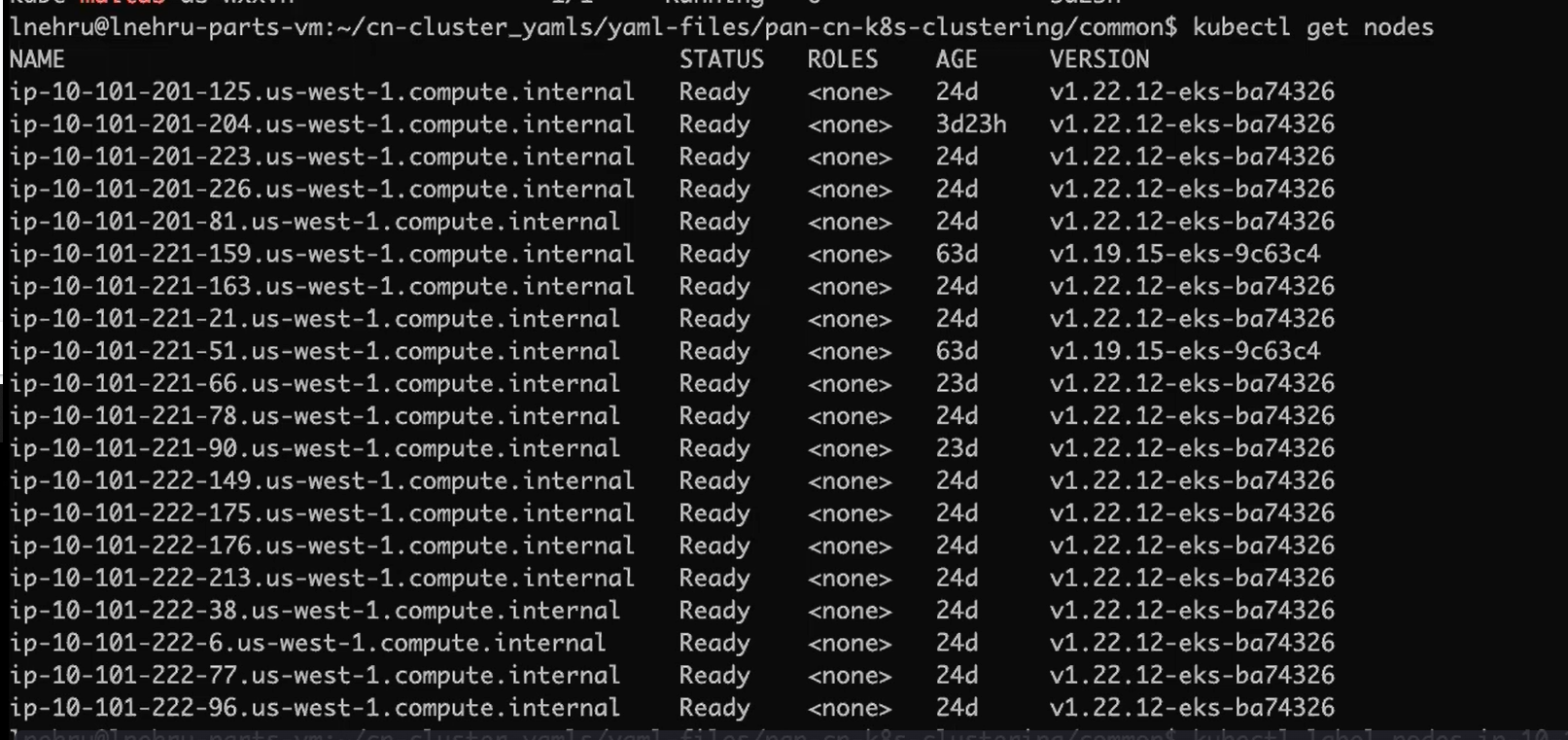

You will need a minimum of 8 nodes to handle the topology

and accommodate all the pods in the solution. Palo Alto Networks

recommends 4 sets of nodegroups with a minimum of two nodes each.

Ensure that you do not allow the MP nodegroup to overlap with the

rest of the 3 nodegroups.

If you wish to use DPDK, you need to have an AMI with DPDK drivers

configured on it. For more information, see

Set up DPDK on

AWS EKS.

After you have the EKS cluster running, use the CloudFormation

template with Multus to bring up nodegroup and EC2 instances with

nodetypes.

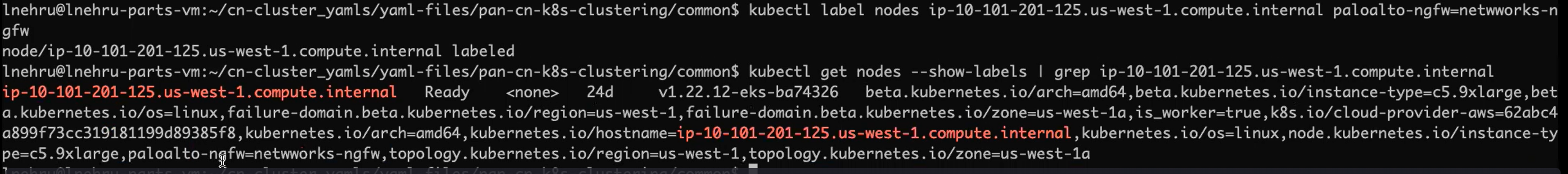

Node Labels

Use the following commands to label all the nodes:

kubectl label node (MP_node_name) Panw-mp=Panw-mp

kubectl label node (DB_node_name) Panw-db=Panw-db

kubectl label node (GW_node_nam) Panw-gw=Panw-gw

kubectl label node (NGFW_node_name) Panw-ngfw=Panw-ngfw

The following are examples of node labels:

CN-NGFW - paloalto-ngfw: networks-ngfw

CN-MGMT - paloalto-mgmt: networks-mgmt

CN-GW - paloalto-gw: networks-gw

CN-DB - paloalto-db: networks-db

A key-value pair is expected to be provided for each node type.

Also, a default value of key paloalto and value networks is recommended.

However, if you choose to change the node labels, then you will

need to make corresponding changes in the configuration.

After labeling the nodes, download the YAMLs needed to bring

up the cluster.

Service Account

The extended permissions for deployments is provided

using a service account yaml. To create the service accounts, your

Kubernetes cluster should be ready.

- Run the service account YAML for the

plugin-deploy-serviceaccount.yaml.

The service account enables the permissions that Panorama requires to authenticate to the cluster for retrieving Kubernetes labels and resource information. This service account is named pan-plugin-user by default.

- Navigate to yaml-files/clustering folder/common and deploy the following:kubectl apply -f plugin-deploy-serviceaccount.yamlkubectl apply -f pan-mgmt-serviceaccount.yamlkubectl -n kube-system get secrets | grep pan-plugin-user-tokenCreate the credential file, cred.json for example, that includes the secrets and save this file. You need to upload this file to Panorama to set up the Kubernetes plugin for monitoring the clusters.

- To view the secrets associated with this service account.kubectl -n kube-system get secrets (secrets-from-above-command) -o json >> cred.json

- Upload the cred.json to the kubernetes plugin and verify the validation status.

After the first validation post commit on Panorama, the plugin will continue to invoke the validation logic at regular intervals and update the validation status on the UI.

After the first validation post commit on Panorama, the plugin will continue to invoke the validation logic at regular intervals and update the validation status on the UI.

Interfaces

You will need to create the ENIs needed for CN-DB, CN-NGFW, and CN-GW. Identify the PCI Bus IDs of these interfaces which will then be used to create network attachment definitions for interconnecting the pods.

- SSH into the node using the key/user you created while creating a cluster.ssh ec2-user@(node_ip) -i private_(key)

- Install the ethtool package.Sudo yum install ethtoolsudo yum update -y && sudo yum install ethtool -y

- Identify the interface name.ifconfig

- Identify the PCI Bus ID of the interface to deploy the network connectivity on the pods.ethtool -i (i/f)

Here eth0 is node management interface, eth1 is CI interface, eth2 is TI, eth3 External interface 1, eth4 External interface 2. In the node labeled for CN-MGMT, you will find only the eth0 interface for management. For CN-DB, you will have eth1, for CN-NGFW, you will have eth1, eth2 and for CN-GW you will have eth1, eth2, and as many external interfaces you created in your environment.net-attach-1 - 0000:00:08.0 net-attach-2 - 0000:00:09.0 net-attach-def-ci-db - 0000:00:06.0 net-attach-def-ci-gw - 0000:00:06.0 net-attach-def-ci-ngfw - 0000:00:06.0 net-attach-def-ti-gw - 0000:00:07.0 net-attach-def-ti-ngfw - 0000:00:07.0All the pods of a deployment need to be on different nodes as they would use the same network attachment definitions and hence each pod needs access to the same PCI bus ID. For example, if the net-attach is using PCI ID 6 for C/U pod CI link then each C/U pod needs to be placed on a node which has PCI ID 6 interface from the same subnet.

Here eth0 is node management interface, eth1 is CI interface, eth2 is TI, eth3 External interface 1, eth4 External interface 2. In the node labeled for CN-MGMT, you will find only the eth0 interface for management. For CN-DB, you will have eth1, for CN-NGFW, you will have eth1, eth2 and for CN-GW you will have eth1, eth2, and as many external interfaces you created in your environment.net-attach-1 - 0000:00:08.0 net-attach-2 - 0000:00:09.0 net-attach-def-ci-db - 0000:00:06.0 net-attach-def-ci-gw - 0000:00:06.0 net-attach-def-ci-ngfw - 0000:00:06.0 net-attach-def-ti-gw - 0000:00:07.0 net-attach-def-ti-ngfw - 0000:00:07.0All the pods of a deployment need to be on different nodes as they would use the same network attachment definitions and hence each pod needs access to the same PCI bus ID. For example, if the net-attach is using PCI ID 6 for C/U pod CI link then each C/U pod needs to be placed on a node which has PCI ID 6 interface from the same subnet. - Modify the PCI Bus ID on the Network Attachment Definition YAML.{ "cniVersion": "0.3.1", "type": "host-device", "pciBusID": "0000:00:07.0" }

Here the first link eth1 is used as CI, eth2 is used as TI, and eth3 onwards is used for external links.

Here the first link eth1 is used as CI, eth2 is used as TI, and eth3 onwards is used for external links. - Apply the prerequisite YAML files.kubectl apply -f pan-mgmt-serviceaccount.yamlkubectl apply -f net-attach-def-1.yamlkubectl apply -f net-attach-def-2.yamlkubectl apply -f net-attach-def-ci-db.yamlkubectl apply -f net-attach-def-ci-gw.yamlkubectl apply -f net-attach-def-ci-ngfw.yamlkubectl apply -f net-attach-def-ti-gw.yamlkubectl apply -f net-attach-def-ti-ngfw.yaml

In Openshift, apply Kubectl apply -f ctrcfg-pidslimit.yaml. For more information on pidlimit, see Configuration Tasks.

If static PVs are used, create the static PV mount volumes on nodes labeled for CN-MGMT pods.

/mnt/pan-local1, /mnt/pan-local2, /mnt/pan-local3, /mnt/pan-local4, /mnt/pan-local5, /mnt/pan-local6