Prisma AIRS

AI Runtime API

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

AI Runtime API

See all the new features made available for Prisma AIRS AI Runtime API.

Here are the new Prisma AIRS AI Runtime API features.

Token-based Licensing for Prisma AIRS AI Runtime API

|

February 2026

Supported for:

|

Prisma AIRS API now supports token-based licensing, shifting from an API call-based

consumption model to a more flexible and scalable monthly token consumption model.

This aligns your environment with current AI industry standards, providing granular,

content-driven usage measurement priced per billion monthly tokens where a token is

four characters. You can manage allocations in the Customer Support Portal (CSP) and

monitor consumption in Strata Cloud Manager (SCM). For more information, see Create a Deployment Profile for Prisma AIRS AI

Runtime API.

AI Agent Discovery

|

November 2025

Supported for:

|

Prisma AIRS supports AI Agent Discovery to track

enterprise AI agents you create using simple, no-code/low-code tools

provided by cloud providers. Think of this process by creating an inventory

and a security guard for your AI bots (or, agents); these

processes are built on cloud platforms like AWS Bedrock and Azure AI Foundry/Open

AI.

AI Agent Discovery addresses two main objectives:

- Determine what AI agents exist. This part of the process, referred to as configuration discovery, involves finding the blueprint of each agent, including its name, description, the brain (Foundation Model) it uses, what it knows (Knowledge Bases), and what it can do (Tools).

- Determine how the AI agents are used. This part of the process, referred to as runtime interactions, involves watching the agent while it's working to see if it talks to another agent, uses a tool, or asks its brain (model) a question. This is primarily supported for AWS agents using their activity logs.

AI Agent Discovery supports SaaS and enterprise AI agents. With this

functionality you can discover agents from an onboarded cloud account and secure

them using the AI Runtime API Intercept workflow.

At this

release, only AWS Bedrock agents and Azure AI Foundry Service (and Azure OpenAI

Assistants) are supported.

AI Sessions and and Applications Views

|

October 2025

Supported for:

|

The new AI Applications and AI Sessions features provide security

practitioners with enhanced visibility into AI-enabled application security. This

enables real-time insights into AI applications and their conversational sessions,

allowing for proactive detection and prevention of security profile violations.

These features address the limitation of traditional security views by grouping

related API calls into coherent sessions, offering a comprehensive understanding of

AI application behavior.

Key capabilities include:

- AI Sessions: View grouped, sequential API calls representing full conversations or interactions.

- AI Applications: Access an inventory of all protected AI applications, showing violation trends and associated security profiles.

For more information, see Use the Prisma AIRS AI Sessions and API

Application Views.

Command Prompt Injection

|

October 2025

Supported for:

|

You can detect command injection attacks in AI applications using the Malicious Code Detection capabilities

in Prisma AIRS. This feature identifies command injection scripts within

prompts, responses, and MCP content that may attempt to execute malicious commands

in your environment. When you enable Malicious Code detection in your AI security

profile, the system extracts and analyzes code blocks from content, including

encoded scripts using Base64, ROT13, hexadecimal, and compressed formats.

You should enable this protection if you are using agent tools, MCP servers, or code

generation applications where input could contain embedded commands. The detection

service extracts code snippets from content using machine learning models, then

analyzes those snippets through ATP services to identify command injection patterns.

You can configure the feature alongside existing malicious code detection for known

malware scripts.

You will need this feature if you are processing user-generated content, integrating

with external AI services, or generating executable code, as it prevents attackers

from injecting commands that could execute in your development environment or

production systems. The detection capability is available in both synchronous and

asynchronous scan APIs, allowing you to integrate protection into existing AI

application workflows.

MCP Threat Detection

|

September 2025

Supported for:

|

Prisma AIRS protects your AI agents from supply chain attacks by adding support for

Model Context Protocol (MCP) tools. This feature adds security scanning capabilities

to the MCP ecosystem, specifically targeting two critical threats:

- Context poisoning via tool definition, tool input (request) and tool output (response) manipulation. This prevents malicious actors from tampering with MCP tool definitions that could trick AI agents into performing harmful actions like leaking sensitive data or executing dangerous commands.

- Exposed credentials and identity leakage. This detects and blocks sensitive data (tokens, credentials, API keys) from being exposed through MCP tool interactions.

This functionality provides a number of benefits:

- Zero-touch security. No new UI or profile configuration required.

- Comprehensive threat detection. Leverages existing detection services (DLP, prompt injection, toxic content, etc.).

- Real-time protection. Works with both synchronous and asynchronous scanning APIs.

- Supply chain security. Validates tool descriptions, inputs and outputs as part of MCP communication.

It ensures that as AI agents become more powerful and autonomous through MCP tools,

they cannot be weaponized against your organization through compromised or malicious

tools in the MCP ecosystem.

This feature represents a broader initiative to secure AI agents that use MCP for

tool integration, ensuring that MCP-based AI systems remain secure against

manipulation and data exposure attacks. For more information, Detect MCP Threats.

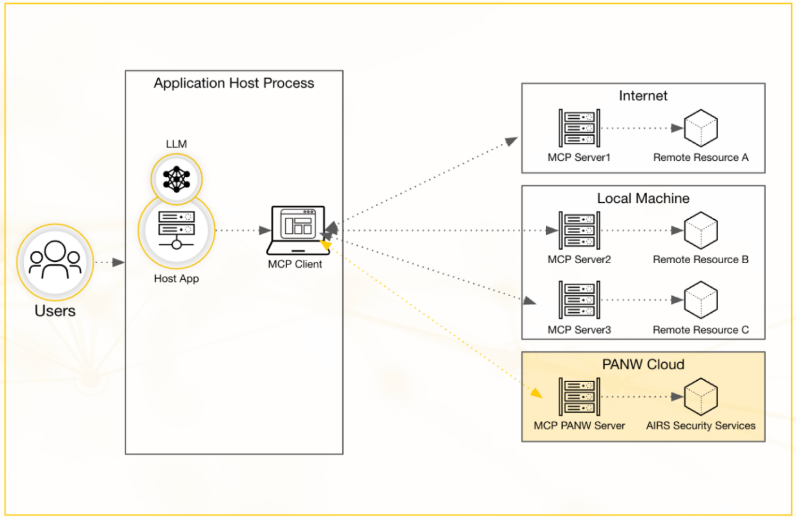

Securing AI Agents with a Standalone MCP Server

|

September 2025

Supported for:

|

The Prisma AIRS Model Context Protocol (MCP) server is a standalone remote server

that addresses significant integration challenges in securing agentic AI

applications with easy deployment. You can use this service to protect your AI

applications without the complex infrastructure dependencies or extensive code

changes that traditional security solutions require.

The Prisma AIRS MCP server in the Palo Alto

Networks cloud environment serves as a centralized security gateway for AI agent

interactions. The server validates all tool invocations through the MCP and provides

real-time Threat Detection on tool inputs, outputs, and tool descriptions or

schemas. The Prisma AIRS MCP server empowers you within the MCP ecosystem by

delivering security-focused building blocks for AI and copilot workflows. Its

universal, easy-to-integrate interface works with any MCP client, enabling AI agents

to translate plain-language user requests into secure, powerful workflows.

When you implement the MCP server as a tool, you only need to specify the protocol

type, URL, and API key in your configuration file to automatically scan all external

MCP server calls for vulnerabilities. This minimal setup enables you to detect

various threats including prompt injection, MCP context poisoning, and exposed

credentials without disrupting your development workflow. The service is valuable

for low-code or no-code platforms where inserting security between the AI agent and

its tool calls would otherwise be challenging. You can protect your AI applications

with features such as AI Application Protection, AI Model Protection, and AI Data

Protection, each designed to safeguard specific aspects of your AI workflows.

The Prisma AIRS MCP server integrates with your existing API infrastructure, enabling

you to view comprehensive scan logs through familiar interfaces. This integration

ensures you maintain visibility into security events while simplifying your security

operations.

Multiple Applications per Deployment Profile

|

September 2025

Supported for:

|

Prisma AIRS API allows you to associate multiple applications with a single deployment

profile, removing the need to create separate deployment profiles for

each application.

With this feature, you can associate up to 20 applications with a single deployment

profile, significantly simplifying management while maintaining consistent security

policies. When creating a new application, you can either select an existing

activated deployment profile or activate a new one before establishing the

association. This flexibility helps you organize your applications based on shared

security requirements or business functions.

All applications associated with a single deployment profile

consume the daily API calls quota tied to that deployment profile. When setting this

value in CSP, consider how many applications (max allowed is 20) you plan to

associate with this deployment profile.

You can easily modify which deployment profile an application uses through the

application edit view, allowing you to adapt as your security needs evolve. The

application detail and list views clearly display which deployment profile each

application is linked to, providing transparency and helping you maintain proper

governance. This capability is fully supported through both Strata Cloud Manager and

API endpoints, enabling you to programmatically manage multiple applications per

deployment profile for automation and integration scenarios.

Unified AI Security Logging

|

August 2025

Supported for:

|

API scan events, including blocked threats, now integrate with the Strata Logging Service, providing a unified log viewer interface for both

API-based and network-based AI security events. The Log Viewer now includes a new log type,

Prisma AIRS AI Runtime Security API, which displays the scan API logs.

This integration allows Security Operations Center (SOC) teams to be alerted to

critical threats.The integration also enables a powerful query builder to search and

analyze scan data and supports out-of-the-box queries for analyzing threats. Log

forwarding is now supported for Prisma AIRS AI Runtime: API intercept. This ensures

comprehensive visibility and streamlines security operations across multiple

supported regions.

Enhance AI Security with India Region Support

|

August 2025

Supported for:

|

You can now deploy API detection services in the India region, ensuring compliance and improving performance. When creating a deployment profile, you can select India as your preferred region. This choice determines the underlying region for data processing and storage.

When you create a deployment profile for the API intercept and associate it with a TSG, you can select your preferred region: United States, Europe (Germany), or India. A separate, region-specific API endpoint is provided for India. This deployment includes all Prisma AIRS AI Runtime: API intercept services and routes detection requests to the nearest APAC-based region for each respective service, reducing latency and data transfer costs.

Malicious Code Extraction from Plain Text

|

July 2025

Supported for:

|

Malicious code embedded directly in plain-text fields of API prompts or

responses is detected across both synchronous and asynchronous scan services. Even

if the code isn’t in a traditional file format, it is identified and analyzed. For

testing purposes, send malicious code in plain text within the

API “prompt” or “response” fields to confirm detection.

As AI applications become more integrated, the risk of malicious code

injection through user input or model responses increases. This feature helps

safeguard your AI models and applications by providing a layer of defense against

such threats, even when the code is embedded in formats other than traditional

files.

Strengthen Threat Analysis with User IP Data

|

July 2025

Supported for:

|

You can include the end user's IP address in both synchronous and

asynchronous scan requests to enhance threat correlation and incident

response capabilities. A new `user_ip` field has been added to the scan

request metadata schema, allowing you to incorporate the originating IP address of

the end user in both synchronous and asynchronous scan requests. The `user_ip` field

provides crucial context for security analysis. Understanding the source IP address

of an end user involved in a scan significantly enhances your ability to correlate

threats and streamline incident response.

Enhance Python Application Security with Prisma AIRS SDK

|

May 2025

Supported for:

|

Prisma AIRS API Python SDK, integrates

advanced AI security scanning into Python applications. It supports Python versions

3.9 through 3.13, offering synchronous and asynchronous scanning, robust error

handling, and configurable retry strategies.

This SDK allows developers to "shift left" security, embedding real-time

AI-powered threat detection and prevention directly into their Python applications.

By providing a streamlined interface for scanning prompts and responses for

malicious content, data leaks, and other threats, it helps secure your AI models,

data, and applications from the ground up.

API Detection Services for the European Region

|

May 2025

Supported for:

|

You can now use Strata Cloud Manager to manage API detection services

hosted in the EU (Germany) region. When creating a deployment profile, you select your

preferred region, and all subsequent scan requests are routed to the corresponding

regional API endpoint. This allows for localized hosting and processing of your AI

security operations.

By enabling regional deployment of AI security services, you can: comply

with data residency requirements, reduce latency by processing security scans closer

to your European users and infrastructure.

Automatic Sensitive Data Masking in API Payloads

|

May 2025

Supported for:

|

Automatic detection and masking of sensitive data

patterns are now available in the scan API output, which scans the prompts and

responses in Large Language Models (LLM). This feature replaces sensitive

information such as Social Security Numbers and bank account details with "X"

characters while maintaining the original text length. API scan logs indicate

sensitive content with the new "Content Masked" column.

As LLMs become more prevalent, the risk of inadvertently exposing sensitive

data increases. This automatic masking capability enhances data privacy and

maintains compliance with data protection regulations. Proactively obscuring

sensitive information reduces the risk of data leakage, strengthens the security

posture of AI applications, and builds greater trust in the use of AI models by

ensuring sensitive details are never fully exposed in logs or intermediary

steps.

Protect AI Agent Workflows on Low-Code or No-Code Platforms

|

May 2025

Supported for:

|

You can protect and monitor AI agents against

unauthorized actions and system manipulation. This feature extends security to AI

agents developed on low-code/no-code platforms, like Microsoft Copilot Studio, AWS

Bedrock, GCP Vertex AI, and VoiceFlow, as well as custom workflows.

As AI agents become more prevalent, they introduce new attack surfaces.

This protection is crucial for ensuring the integrity and secure operation of your

AI agents, regardless of how the agents were developed.

Prevent Inaccuracies in LLM Outputs with Contextual Grounding

|

May 2025

Supported for:

|

You can now enable Contextual Grounding detection in

your LLM response, which detects responses that contain information not present in

or contradicting the provided context. This feature works by comparing the LLM's

generated output against a defined input context. If the response includes

information that wasn't supplied in the context or directly contradicts it, the

detection flags these inconsistencies, helping to identify potential hallucinations

or factual inaccuracies.

Ensuring that LLM responses are grounded in the provided context is

critical for applications where factual accuracy and reliability are paramount.

Define AI Content Boundaries with Custom Topic Guardrails

|

May 2025

Supported for:

|

You can enable the Custom Topic Guardrails

detection service to identify a topic violation in the given prompt or response.

This feature allows you to define specific topics that must be allowed or blocked

within the prompts and responses processed by your LLM models. The system then

monitors content for violations of these defined boundaries, ensuring that

interactions with your LLMs stay within acceptable or designated subject matter.

Custom Topic Guardrails provide granular control over the content your AI

models handle, offering crucial protection against various risks. For example, you

can prevent misuse, maintain brand integrity, ensure compliance, and enhance the

focus of the LLM's outputs.

Detect Malicious Code in LLM Outputs

|

March 2025

Supported for:

|

Code snippets generated by Large Language Models (LLMs) can be protected

with Malicious Code Detection feature for

potential security threats. This feature is crucial for preventing supply chain

attacks, enhancing application security, maintaining code integrity, and mitigating

AI risks.

The system supports scanning for malicious code in multiple languages,

including JavaScript, Python, VBScript, PowerShell, Batch, Shell, and Perl.

To activate this protection, you need to enable it within the API Security

Profile. When configured, this feature can block the execution of potentially

malicious code or be set to allow, depending on your security needs. This capability

is vital for organizations that are increasingly leveraging generative AI for

development, as it helps to secure against the risks of LLM poisoning, where

adversaries intentionally introduce malicious data into training datasets to

manipulate model outputs.

Detect Toxic Content in LLM Requests and Responses

|

March 2025

Supported for:

|

To protect AI applications from generating or responding to inappropriate

content, a new capability adds toxic content detection to LLM requests and

responses. This advanced detection is designed to

counteract sophisticated prompt injection techniques used by malicious actors to

bypass standard LLM guardrails. The feature identifies and mitigates content that

contains hateful, sexual, violent, or profane themes.

This capability is vital for maintaining the ethical integrity and safety

of AI applications. It helps protect brand reputation, ensures user safety,

mitigates misuse, and promotes a responsible AI. By analyzing both user inputs and

model outputs, the system acts as a filter to intercept requests and responses that

violate predefined safety policies.

The system can either block the request entirely or rewrite the output to

remove the toxic language. In addition to detecting toxic content, it also helps

prevent bias and misinformation, which are common risks associated with LLMs. By

implementing this security layer, you can ensure that your AI agents and

applications operate securely and responsibly, safeguarding against both intentional

and unintentional generation of harmful content.

Centralized Management of AI Firewalls

|

February 2025

Supported for:

|

You can now manage and monitor your AI firewalls with

Panorama. This integration allows you to leverage a central

platform for defining and observing AI security policies and logs.

This capability extends to securing VM workloads and Kubernetes clusters,

allowing for a unified approach to security across your diverse environments.

Centralized management provides a number of key benefits, including unified

visibility, streamlined operations, consistent policy enforcement, and accelerated

incident response.

Customize API Security with Centralized Management

|

January 2025

Supported for:

|

You can manage Applications, API Keys, and Security

Profiles from a centralized dashboard within Strata Cloud

Manager. This allows you to create and manage multiple API keys, define and manage

applications, and create and manage AI API security profiles and their revisions.

This centralized approach enables you to tailor security policy rules precisely to

the unique needs of different applications and API integrations.

Automate AI Application Security with Programmatic APIs

|

November 2024

Supported for:

|

Prisma AIRS API intercept is a threat

detection service that enables you to discover and secure applications by

programmatically scanning prompts and models for threats. You can implement a

Security-as-Code approach using our REST APIs to protect your AI models,

applications, and AI data.

These REST APIs seamlessly integrate AI security scanning into your

application development and deployment workflows. This methodology enables automated

and continuous protection for your AI models, applications, and the data they

process, making security an intrinsic part of your development lifecycle.

Extend Prisma AIRS AI Network Security Across AWS and Azure

|

October 2024

Supported for:

|

You can now discover your Azure and AWS cloud assets by onboarding your accounts in Strata Cloud

Manager for central management. You can deploy and secure these environments with

Prisma AIRS AI Runtime: Network intercept.

This expanded support enables unified multi-cloud protection, enhanced

visibility, streamlined deployment, and reduced risk.

Extend AI Network Security to Google Cloud Platform

|

September 2024

Supported for:

|

You can now discover your GCP cloud assets by onboarding your GCP account in Strata Cloud

Manager. You can deploy and secure your GCP environment with network

intercept. This feature enables onboarding your GCP cloud account to a centralized

management platform, enabling the discovery of your cloud assets and providing

visibility into your AI workload deployments.

This expanded support for GCP provides dedicated protection, enhanced

visibility, streamlined deployment, and reduced risk.