CN-Series

CN-Series Troubleshooting

Table of Contents

Expand All

|

Collapse All

CN-Series Firewall Docs

-

-

-

- Deployment Modes

- In-Cloud and On-Prem

-

-

-

CN-Series Troubleshooting

On your OpenShift environment, deploy the CN-Series firewalls.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

|

|

Term

|

Definitions

|

|---|---|

|

MP

|

CN-MGMT

|

|

DP

|

CN-NGFW

|

Connect to MP or DP

Run the following command to know your MP or DP pod name:

kubectl get pods -n=<namespace>

Run the following command to connect to MP or DP pods:

Kubectl -n kube-system exec -it <mp-pod-name> -- su

admin

Kubectl -n kube-system exec -it <mp/dp-pod-name> --

bash

Pods fail to pull image with error

x509: certificate signed by unknown authority (Mostly seen with

native/onprem k8 clusters)

On all the worker nodes, update /etc/docker/daemon.json with

the image repo you are pulling from. Create a daemon.json file if it doesn’t

exist

root@ctnr-debug-worker-2:~# cat /etc/docker/daemon.json { "insecure-registries" : ["docker-panos-ctnr.af.paloaltonetworks.local", "panos-cntr-engtools.af.paloaltonetworks.local", "docker-public.af.paloaltonetworks.local", "panos-cntr-engtools-releng.af.paloaltonetworks.local", "docker-qa-pavm.af.paloaltonetworks.local"] } root@ctnr-debug-worker-2:~#

Docker restart with command "systemctl restart

docker.service".

MP stays in Pending state

Verify the following:

-

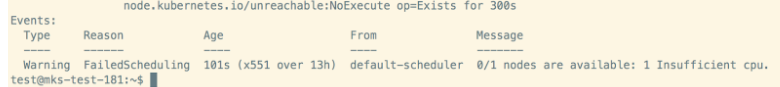

Required resources(node/memory/cpu) are available for MP. We can verify this by checking the command output forkubectl -n kube-system describe <mp-pod-name>

![]()

-

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by server/storage/cluster administrator or dynamically provisioned using Storage Classes. It is a resource in the cluster just like node. A PersistentVolumeClaim (PVC) is a request for storage by a user which can be obtained from PV.PVCs are bound to the PV (kubectl -n kube-system get pvc). If not, delete old pvcs running the following command:“kubectl -n kube-system delete pvc -l appname=pan-mgmt-sts-<whatever>”Check if required directories (pan-local1, pan-local2, pan-local3, pan-local4, pan-local5, pan-local6 under /mnt) are created on at least 2 worker nodes for on-prem setups.The pan-local1, pan-local2, pan-local3, pan-local4, pan-local5, pan-local6 under /mnt are not required for dynamic volume provisioning. Missing EBS CSI driver on AWS EKS is one of the reasons that the MP stays in pending state. You must ensure that EBS CSI driver is enabled in the cluster, check the role and identify the provider for the cluster. For more information, see Managing the Amazon EBS CSI driver as an Amazon EKS add-on.If error is “pan-mgmt-sts-0": pod has unbound immediate PersistentVolumeClaims” exec “kubectl get pvcs -o wide” and “kubectl get pv -o wide”. This should show which pvcs failed to bind.Solution would be to delete older PVCs using command kubectl -n kube-system delete pvc/<pvc-name> or to clean up. Delete all PVCs, PVs. Deploy new PVs and deploy MP.If "k8 describe pod <mp-pod>" errors out with below, make sure PVs are created. If not, deploy pan-cn-pv-local.yaml (with node names where the directories are configured)"VolumeBinding" filter plugin for pod ""pan-mgmt-sts-0"": pod has unboundate PersistentVolumeClaimsWarning FailedScheduling <unknown> default-scheduler running "VolumeBinding" filter plugin for pod ""pan-mgmt-sts-0"": pod has unboundate PersistentVolumeClaimslnehru@lnehru-parts-vm:~/cnv1/Kubernetes/pan-cn-k8s-daemonset/eks$ k8l pan-mgmt-sts-012-22-2021 11:34:36.961697 PST INFO: Management container starting running PanOS version 10.1.3-c4712-22-2021 11:34:41.335521 PST ERROR: Failed to start pansw: 2Issue could be that “pan-cn-mgmt-configmap.yaml” does not have mandatory values.

MP keeps crashing

Login to MP root and navigate to /var/cores to see the process that is crashing.K8 MP logs show the following error

Device registration request failed : Failed to send request to CSP serverVerify the following:-

“Pan-cn-mgmt-secret.yaml” should have correct values for below 2 fields CN-SERIES-AUTO-REGISTRATION-PIN-ID: "<PIN Id>"CN-SERIES-AUTO-REGISTRATION-PIN-VALUE: "<PIN-Value>"

-

If above values are correct, make sure the PinID and Value are not expired from CSP

MP fails connecting to Panorama or MP CommitAll failure

-

Verify MP can reach/ping Panorama IP. For public clouds, make sure required security policies are configured to enable reachability between Panorama and K8 clustersLogin to mp:

-

-

Kubectl -n kube-system exec -it <mp-pod-name> -- su admin

-

“Show panorama status” to get the panorama IP address

-

ping host <panorama-ip>

-

If ping works, continue with below verifications

-

-

-

Verify “bootstrap-auth-key” provided in mgmt-secret.yaml is present on Panorama and not expired.

-

To verify bootstrap-auth-key, login to panorama and execute command “request bootstrap vm-auth-key show” → This should be valid(not expired)

-

If not available, generate using “request bootstrap vm-auth-key generate lifetime 8760” and update in the pan-mgmt-secret.yaml. Undeploy all yamls, clear PV, PVCs and redeploy.

-

-

Verify the DG, TS and Collector-group(CG) configured in mgmt-configmap.yaml are not misspelled and configured+committed on Panorama

-

Verify pan-mgmt-configmap and secret yamls are deployed before mgmt.yamVerify pan-mgmt-configmap and secret yamls are deployed before mgmt.yaml.

-

Check if commit-all from Panorama on MP is successful, by execute cmd “show jobs all” and “show job id <id>” to see for any failures and fix any configs on panorama and do commit all/force again.

-

Panorama config is pushed on MP “show config pushed-shared-policy

- Look for configd.log on Panorama from root by entering cmd “debug tac-login response” and search with MP serial number. It should have reason why connection fail.vi /var/log/pan/configd.logExample:2021-03-15 14:19:49.213 -0700 Error: pan_cfg_bootstrap_device_add_to_cfg(pan_cfg_bootstrap_mgr.c:4085): bootstrap: template stack cnv2-template-stack not found, serial=8CABD801686AD2021-04-15 14:19:49.213 -0700 bootstrap: candidate cfg ch Error: pan_cfg_bootstrap_vm_auth_key_verify(pan_cfg_bootstrap_mgr.c:3822): Failed to find vm_auth_key 923688689426978, vm_auth _key invalid

Commit All doesn’t start on MP

Verify on Panorama if CommitAll jobs are stuck/ACT state.This may happen because of network connectivity issues between MP and Panorama.In Lab, this issue is resolved by having MP/worker-node and Panorama in the same subnet.Below panorama logs can be seen if hitting the above issue.2023-01-19 09:13:51.788 -0800 Error: device_needs_bkup(pan_bkup_mgr.c:323): failed to check out /opt/pancfg/mgmt/devices/8B8AE8CB506CF09/running-config.xml 2023-01-19 09:13:51.938 -0800 Panorama push device-group cn-dg-12c13c51-1 for device 8B8AE8CB506CF09 with merge-with-candidate-cfg include-template flags set.JobId=50860.User=pano rama. Dequeue time=2023/01/19 09:13:51. 2023-01-19 09:13:52.812 -0800 Preference list thread was spawned to send to device 8B8AE8CB506CF09 in group CG 2023-01-19 09:13:52.812 -0800 Preference list thread was sent to device 8B8AE8CB506CF09 2023-01-19 09:13:52.813 -0800 DAU2: Will clear all addresses on dev:8B8AE8CB506CF09. 2023-01-19 09:14:35.061 -0800 Error: pan_conn_mgr_callback_expiry_async(cs_conn.c:8781): connmgr: Expired Request. entry:916, msgno=3 devid=8B8AE8CB506CF09 2023-01-19 09:14:35.061 -0800 Error: pan_conn_mgr_callback_expiry_async(cs_conn.c:8781): connmgr: Expired Request. entry:916, msgno=6 devid=8B8AE8CB506CF09 2023-01-19 09:14:35.061 -0800 Error: pan_conn_mgr_callback_expiry_async(cs_conn.c:8781): connmgr: Expired Request. entry:916, msgno=4 devid=8B8AE8CB506CF09 2023-01-19 09:15:05.060 -0800 Error: pan_conn_mgr_callback_expiry_async(cs_conn.c:8781): connmgr: Expired Request. entry:916, msgno=0 devid=8B8AE8CB506CF09 2023-01-19 09:15:05.060 -0800 Error: pan_conn_mgr_callback_expiry_async(cs_conn.c:8781): connmgr: Expired Request. entry:916, msgno=5 devid=8B8AE8CB506CF09 2023-01-19 09:15:05.060 -0800 copy-lcs-pref-list: Response Processor: copy lcs pref job received response from device 8B8AE8CB506CF09 of cookie 2407. Current cookie is 2408. Remaini ng: 1 2023-01-19 09:15:05.060 -0800 copy-lcs-pref-list: Response Processor: copy lcs pref job received response from device 8B8AE8CB506CF09 of cookie 2408. Current cookie is 2408. Remaini ng: 1 2023-01-19 09:15:05.060 -0800 Error: pan_async_copy_lcs_pref_list_result(pan_comp_collector.c:2761): 2023-01-19 09:15:05.060 -0800 copy-lcs-pref-list:Failed to receive response fro m device 8B8AE8CB506CF09. Error - time out sending/receiving message Error: pan_async_copy_lcs_pref_list_result(pan_comp_collector.c:2761): copy-lcs-pref-list:Failed to receive response from device 8B8AE8CB506CF09. Error - time out sending/receiving message 2023-01-19 09:15:08.545 -0800 connmgr: received disconnect cb from ms for 8B8AE8CB506CF09(1020484) 2023-01-19 09:15:08.545 -0800 connmgr: connection entry removed. devid=8B8AE8CB506CF09 sock=4294967295 result=0 2023-01-19 09:15:08.545 -0800 Handling device conn update [disconnection][activated:1] for 8B8AE8CB506CF09: "server: client is device" 2023-01-19 09:15:08.545 -0800 Error: pan_bkupjobmgr_process_async_result(pan_bkup_mgr.c:208): Failed to receive response from device 8B8AE8CB506CF09. Error - failed to send message 2023-01-19 09:15:08.545 -0800 Error: pan_async_lcs_pref_list_result(pan_comp_collector.c:2681): lcs-pref-list:Failed to receive response from device 8B8AE8CB506CF09. Error - failed to send message 2023-01-19 09:15:08.546 -0800 Panorama HA feedback: 8B8AE8CB506CF09 disconnected 2023-01-19 09:15:08.547 -0800 connmgr: connection entry removed. devid=8B8AE8CB506CF09 (1020484) 2023-01-19 09:15:41.212 -0800 Warning: _register_ext_validation(pan_cfg_mgt_handler.c:4418): reg: device '8B8AE8CB506CF09' not using issued cert. 2023-01-19 09:15:41.213 -0800 Warning: pan_cfg_handle_mgt_reg(pan_cfg_mgt_handler.c:4737): SC3: device '8B8AE8CB506CF09' is not SC3 capable 2023-01-19 09:15:41.213 -0800 SVM registration. Serial:8B8AE8CB506CF09 DG:cn-dg-12c13c51-1 TPL:cn-tmplt-stk-12c13c51-1 vm-mode:0 uuid:4b96eccd-9d66-43b1-a3f3-2318f3e5b2fd cpuid:K8SM P:A6D64F:8410079617204080582: svm_id:2023-01-19 09:15:41.213 -0800 Error: pan_cfg_bootstrap_device_add_to_cfg(pan_cfg_bootstrap_mgr.c:4020): bootstrap: 8B8AE8CB506CF09 already adde d to mgd devicesDP pods stay in Pending or Container Creating state

Run the following commands and verify for the errors mentioned in respective command’s output and fix-

Kubectl -n kube-system describe pod/<dp-pod-name>.If the following error is seen as part of the above command, continue looking for CNI logs and if CNI is using multus.MountVolume.SetUp failed for volume "pan-cni-ready" : hostPath type check failed: /var/log/pan-appinfo/pan-cni-ready is not a directoryKubectl -n kube-system logs <dp-pod-name>Kubectl -n kube-system describe pod <cni-name-on-same-node>Kubectl -n kube-system logs <cni-name-on-same-node>If kubectl CNI logs as below, make sure CNI is running on each node. (On GKE cluster, we need to enable Network policy for default CNi to be running):08-18-2022 23:55:07.397661 UTC DEBUG: PAN CNI config: { "name": "pan-cni", "type": "pan-cni", "log_level": "debug", "appinfo_dir": "/var/log/pan-appinfo", "mode": "service", "dpservicename": "pan-ngfw-svc", "dpservicenamespace": "kube-system", "firewall": [ "pan-fw" ], "interfaces": [ "eth0" ], "interfacesip": [ "" ], "interfacesmac": [ "" ], "override_mtu": "", "kubernetes": { "kubeconfig": "/etc/cni/net.d/ZZZ-pan-cni-kubeconfig", "cni_bin_dir": "/opt/cni/bin", "exclude_namespaces": [], "security_namespaces": [ "kube-system" ] }} 08-18-2022 23:55:07.402812 UTC DEBUG: CNI running in FW Service mode. Bypassfirewall can be enabled on application pods 08-18-2022 23:55:07.454392 UTC CRITICAL: Detected Multus as primary CNI (CONF file 00-multus.conf); waiting for non-multus CNI to become primary CNI. root@manojmaster:~/pan-cn-k8s-service/native#If above error is seen, Try Undeploying the Multus and delete the file 00-multus.conf from the worker nodes where this CNI and DPs are deployedroot@manojworker1:/etc/cni/net.d# pwd /etc/cni/net.d root@manojworker1:/etc/cni/net.d# rm 00-multus.conf

MP: Not showing Panorama details/status

admin@PA-CTNR> show panorama-status admin@PA-CTNR> [root@PA-CTNR /]# cat /opt/pancfg/mgmt/bootstrap/init-cfg.txt.20210527 type=static netmask=255.255.255.0 cgname=CG tplname=10_3_252_62-CNv2 ip-address=10.233.99.17 default-gateway=10.233.99.1 dgname=10_3_252_62-CNv2 panorama-server=107.21.240.64 hostname=pan-mgmt-sts-0 vm-auth-key=158251502922307 [root@PA-CTNR /]#-

Possibly pan-cn-mgmt-configmap is deployed after pan-cn-mgmt.yaml

-

Possbile pan-cn-mgmt-secret deployment failed (possible because bootstrap-auth-key starts with ‘0’) → Delete the auth key from panorama and recreate to make sure it doesn’t start with ‘0’

To resolve, undeploy MP, delete PVCs, PVs, redeploy mp-configmap, followed by MP.In case of deployment using the HELM Chart:To resolve the issue, undeploy the HELM chart, delete the CN-Series PVCs/PVs, and then redeploy the HELM.Panorama not showing MP as managed device

-

Make sure MP and DP are on the same sw version. “K8 logs on MP would throw error logs if they are not on the same version.

-

If mgmt-slot-crd and mgmt-slot-cr.yaml are deployed.

-

If this DP has established IPSec to any of the MPs (login to MP using root and check with cmd “ipsec status” .

-

AutoCommit and CommitAll should have passed on the MP this DP is connected to. If not, check on MP why CommitAll or AutoCommit has failed and fix accordingly. Refer to above step (MP fails connecting to Panorama or MP CommitAll failure) to resolve.

-

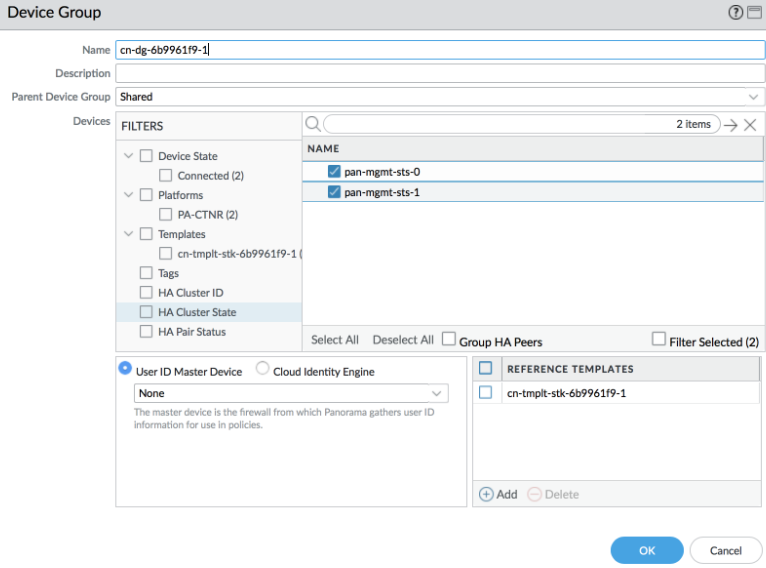

admin@pan-mgmt-sts-0> debug show internal interface all → should display interface config, if it does not, Make sure the template-stack is referenced in the DG. Also make sure K8S-Network-template has the interface configuration.

![]()

-

If DP have 4Gig Memory(check in ngfw.yaml)

-

Masterd all process are running by running “masterd all status” cmd.

-

“Ps -aef” check the process working.

-

Check output of the following command and verify if DP slot registration failures are seen:“kubectl get panslotconfigs -n kube-system --insecure-skip-tls-verify -o yaml”

DP Slot registration failing

- Verify pan-cn-mgmt-slot-cr and crd.yamls are deployed.

-

[root@rk-cl3-master-1 native-2]# k8sys get PanSlotConfig NAME AGE pan-mgmt-svc-2-slots 13s pan-mgmt-svc-slots 11d [root@rk-cl3-master-1 native-2]#[root@rk-cl3-master-1 native-2]# k8sys get crd | grep pan NAME CREATED AT panslotconfigs.paloaltonetworks.com 2022-11-10T04:18:13Z [root@rk-cl3-master-1 native-2]#11-21-2022 20:54:31.783302 UTC INFO: Masterd Started 11-21-2022 20:54:40.050008 UTC INFO: IPSec up-client event with 169.254.202.2 11-21-2022 20:54:40.121502 UTC INFO: Calling dp slot register script 11-21-2022 20:54:40.323061 UTC WARNING: Readiness: Not Ready. Panorama config is not pushed. pan_task is not running. 11-21-2022 20:54:40.486734 UTC INFO: Strongswan daemon is up. Trying to reach Management Plane.. 11-21-2022 20:54:41.623966 UTC INFO: Management Plane connectivity established. 11-21-2022 20:54:42.700770 UTC ERROR: Registration/Re-registration failed: USER 11-21-2022 20:54:42.818729 UTC WARNING: dp slot register failed. Retrying a few times 11-21-2022 20:54:44.265372 UTC ERROR: Registration/Re-registration failed: USER 11-21-2022 20:54:45.759982 UTC ERROR: Registration/Re-registration failed: USER 11-21-2022 20:54:47.256744 UTC ERROR: Registration/Re-registration failed: USER 11-21-2022 20:54:48.768491 UTC ERROR: Registration/Re-registration failed: USER 11-21-2022 20:54:50.272969 UTC ERROR: Registration/Re-registration failed: USER 11-21-2022 20:54:51.390138 UTC CRITICAL: Failed to register to MP. Shutting down DP

MP/DP/CNI pods are not shown when we do “k8 get pods -n kube-system”

-

Verify Possibly ‘Service-account” is not created because “sa.yaml” is not deployed.

-

Verify mp service is running using command “k8 -n kube-system get svc”

-

Verify mp stateful set is running using command “k8 -n kube-system get sts” and k8n -n describe sts/pan-mgmt-sts → this would print if any issue with pvc/pv

Traffic from secure app pods not sent through DP/Firewall

-

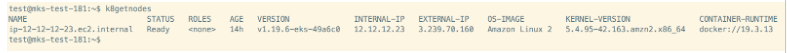

Verify if all the worker nodes are running min 5.4 Kernel version (using the cmd “kubectl get nodes -o wide)

![]()

-

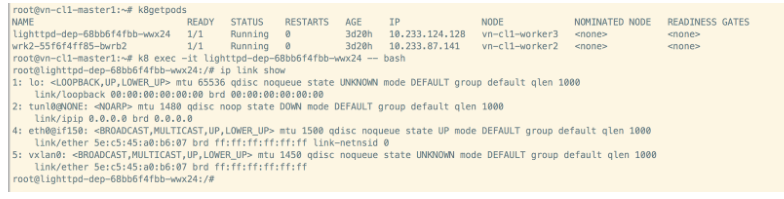

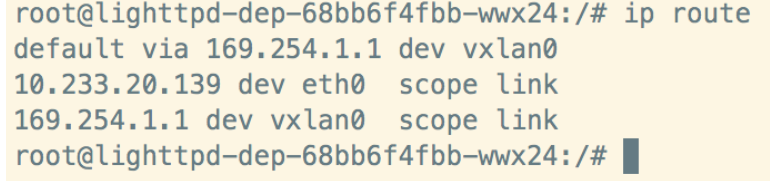

(for CNv2 only) Verify if ‘vxlan’ interface is created on the secure app pod and a default route is created though this vxlan interface

![]()

![]()

-

Verify if the DPs are running and are connected to MP using IPSec, If DPs are active/Running, but IPSec is terminated

-

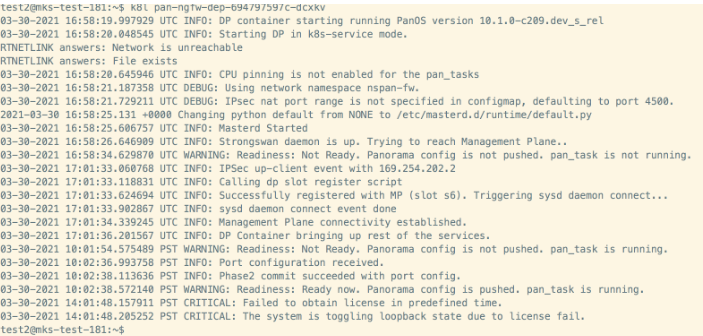

Verify if DP licensing failed after 4 hours of DP bringup because of no auth-code or Cluster not connected to panorama - k8 plugin

![]()

-

Verify if the auth-code on kubernets plugin in Panorama is not expiredadmin@Panorama> request plugins kubernetes get-license-tokensSecurity Subscriptions: Wildfire, Threat Prevention, DNS, URL FilteringAuthcode Type: SW-NGFW CreditsAuthcode: D2962989Expired: noExpiry Date: December 31 2022Issued vCPU: 50Used vCPU: 0Issue Date: December 31 2022admin@Panorama-49.88>

-

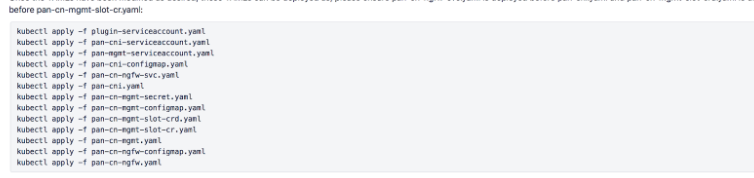

Verify if the ‘ngfw-svc.yaml' is deployed before CNI.yaml and NGFW svc has ClusterIP and is running.

![]()

-

Check CNI logs by logging into the node under /var/log/pan/pan-cni.log ([ec2-user@ip-12-12-12-184 ~]$ vi /var/log/pan-appinfo/pan-cni.log)

-

App pod should be created after CNI and ngfw svc are running - restart of pod can help

-

App pod should be created after CNI and ngfw svc are running - restart of pod can help

-

Check interface config is pushed to DP using command “debug show internal interface all“ on MP pod

-

“show rule-hit-count vsys vsys-name vsys1 rule-base security rules all” to see which security rule is being hit and modify security policy accordingly.

How to verify which DP pod is processing traffic

-

kubectl -n kube-system get pods -l app=pan-ngfw -o wide

-

kubectl -n kube-system describe pod <dp-pod-name> | grep "Container ID"

-

On Panorama->Monitor logs, add “Container ID” column and you would see the above container ID

Logging: Panorama not showing traffic/threat logs

Following are the troubleshooting steps:-

Verify if logs are generated on the MP using command “show log traffic/threat direction equal backward”. If logs are not seen on MP, verify if the same MP is processing traffic using “show session all”, while sending continuous ping from secure pod

-

Make sure DP is licensed using commands:

-

On MP “request plugins vm_series list-dp-pods”

-

K8 logs on DP should confirm this.

-

-

“debug log-receiver statistics “ will show log incoming rate from DP to MP.

-

Verify the traffic is hitting the expected policy configured with log-forwarding using “show session all” and “show session id <id>”

-

Verify the configuration is received on MP using cmd “show config pushed-shared-policy” and “show running security-policy”

-

Make sure “managed collectors” are in sync and in a connected state on panorama.

-

“masterd elasticsearch status” on panorama → it should be running. If not running, execute “es_restart.py -e”

-

[root@cnsmokepanorama ~]# sdb cfg.es.* cfg.es.acache-update: 1 cfg.es.enable: 0x0es_cluster.sh health“debug log-collector log-collection-stats show incoming-logs” on the panoramapan_logquery -t traffic -i bwd -n 50

Logging: Panorama not showing logs when filtering with rule-name

Issue can happen when Panorama fails loading ES templates correctly. Try restarting ES by command “es_restart.py -t” from Panorama root mode. Send new traffic/logs and verify logs are seen:[root@sjc-bld-smk01-esx12-t4-pano-02 ~]# es_restart.py -t ====== /opt/pancfg/mgmt/factory/es/templates/urlsum.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/sctpsum.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/iptag.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/panflex0000100004.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/sctp.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/extpcap.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/system.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/wfr.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/gtpsum.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/panflex0000100006.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/decryption.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/thsum.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/globalprotect.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/hipmatch.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/desum.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/userid.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/panflex0000100007.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/trace.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/threat.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/auth.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/config.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/panflex0000100003.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/gtp.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/trsum.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/traffic.tpl ==== ====== /opt/pancfg/mgmt/factory/es/templates/panflex0000100005.tpl ==== [root@sjc-bld-smk01-esx12-t4-pano-02 ~]#MP fails re-connecting to panorama

pan_cfg_handle_mgt_reg(pan_cfg_mgt_handler.c:5105): This device or log collector or wf appliance (devid 892A1C93EF280D0) is not managedAbove error msg means the device is re-registering. since it registered before, it is not going through the bootstrap workflow where it adds itself to the panorama config.This could happen when the device previously registered and connected, but a commit was not done on panorama to save that configuration, then panorama restarted or rebooted, clearing the configuration and when the device tries to connect, panorama does not recognize the device so the connection is severed.Below logs from configd.log on panorama:2021-02-03 11:48:00.436 -0800 Processing lcs-register message from device '8B1EB1ADC72B44E' 2021-02-03 11:48:00.436 -0800 Warning: _get_current_cert(sc3_utils.c:84): sdb node 'cfg.ms.ak' does not exist. 2021-02-03 11:48:04.425 -0800 logbuffer: no active connection to cms0 2021-02-03 11:48:24.425 -0800 logbuffer: no active connection to cms0 2021-02-03 11:48:44.425 -0800 logbuffer: no active connection to cms0 2021-02-03 11:48:57.751 -0800 Warning: sc3_register(sc3_register.c:90): SC3: Disabled - Ignoring register. 2021-02-03 11:48:57.752 -0800 Warning: pan_cfg_handle_mgt_reg(pan_cfg_mgt_handler.c:4645): SC3: device '892A1C93EF280D0' is not SC3 capable 2021-02-03 11:48:57.752 -0800 SVM registration. Serial:892A1C93EF280D0 DG: TPL: vm-mode:0 uuid:481a70f4-1647-426c-954a-a003ec60943f cpuid:K8SMP:A6D64F:84100796172040 80581: svm_id:2021-02-03 11:48:57.752 -0800 processing a register message from 892A1C93EF280D0 2021-02-03 11:48:57.752 -0800 Error: pan_cfg_handle_mgt_reg(pan_cfg_mgt_handler.c:5105): This device or log collector or wf appliance (devid 892A1C93EF280D0) is not managed 2021-02-03 11:49:04.426 -0800 logbuffer: no active connection to cms0 2021-02-03 11:49:06.015 -0800 Warning: sc3_register(sc3_register.c:90): SC3: Disabled - Ignoring register. 2021-02-03 11:49:06.015 -0800 Warning: pan_cfg_handle_mgt_reg(pan_cfg_mgt_handler.c:4645): SC3: device '8B1EB1ADC72B44E' is not SC3 capable 2021-02-03 11:49:06.015 -0800 SVM registration. Serial:8B1EB1ADC72B44E DG: TPL: vm-mode:0 uuid:731de362-59ed-45a0-9fdd-7e642626f187 cpuid:K8SMP:A6D64F:84100796172040 80581: svm_id:2021-02-03 11:49:06.015 -0800 processing a register message from 8B1EB1ADC72B44E 2021-02-03 11:49:06.015 -0800 Error: pan_cfg_handle_mgt_reg(pan_cfg_mgt_handler.c:5105): This device or log collector or wf appMP and DP are active and running state, but IPsec is terminated between MP and DP

Verify kubectl logs on MP, see if the slots are freed up after 4 hours as below:(parts) root@test-virtual-machine:~# k8sys logs pan-mgmt-sts-0 03-09-2021 01:07:36.508002 PST INFO: Management container starting running PanOS version 10.1.0-c182.dev_s_rel Starting PAN Software: 03-09-2021 01:08:07.460287 PST WARNING: Readiness: Not Ready. slotd for Data Plane registration is not running. ipsec for Data Plane connections is not running. Failed to execute cmd:dpdk-devbind --status [ OK ] 03-09-2021 01:09:43.043467 PST INFO: Masterd Started 03-09-2021 01:10:53.453639 PST WARNING: Readiness: Ready now. slotd for Data Plane registration is running. ipsec for Data Plane connections is running. 03-09-2021 01:10:54.558525 PST INFO: Strongswan daemon is up. 03-09-2021 01:10:56.286104 PST INFO: SW version matches, both MP and DP software versions are 10.1.0-c182.dev_s_rel 03-09-2021 01:10:56.346162 PST INFO: Get registration with uid pan-ngfw-ds-4lhhc, sw_ver 10.1.0-c182.dev_s_rel, slot 0, dp_ip 169.254.202.2 03-09-2021 01:10:56.453298 PST INFO: Allocated slot 1 for uid pan-ngfw-ds-4lhhc 169.254.202.2 03-09-2021 01:10:57.131769 PST INFO: SW version matches, both MP and DP software versions are 10.1.0-c182.dev_s_rel 03-09-2021 01:10:57.198584 PST INFO: Get registration with uid pan-ngfw-ds-9pj2f, sw_ver 10.1.0-c182.dev_s_rel, slot 0, dp_ip 169.254.202.3 03-09-2021 01:10:57.288892 PST INFO: Allocated slot 2 for uid pan-ngfw-ds-9pj2f 169.254.202.3 03-09-2021 01:12:02.279032 PST INFO: Installing license AutoFocus. 03-09-2021 01:12:02.362417 PST INFO: Installing license LoggingServices. 03-09-2021 05:13:01.521227 PST INFO: SW version matches, both MP and DP software versions are 10.1.0-c182.dev_s_rel 03-09-2021 05:13:01.597810 PST INFO: Freeing slot 2, uid pan-ngfw-ds-9pj2f with Force 03-09-2021 05:13:01.694588 PST INFO: SW version matches, both MP and DP software versions are 10.1.0-c182.dev_s_rel 03-09-2021 05:13:01.764245 PST INFO: Freeing slot 1, uid pan-ngfw-ds-4lhhc with Force 03-09-2021 05:13:02.100376 PST INFO: IPSec got down-client event for 169.254.202.2 03-09-2021 05:13:02.707976 PST INFO: IPSec got down-client event for 169.254.202.3ImagePullBackOff

Check the following:-

Image not available on repo or node doesn’t have access to the repo

-

x509: certificate signed by unknown authority.. If so do below:add/Modify the file /etc/docker/daemon.json with private repos:

-

root@vn-cl1-master1:~# cat /etc/docker/daemon.json {"insecure-registries" : ["panos-cntr-engtools-releng.af.paloaltonetworks.local", "panos-cntr-engtools.af.paloaltonetworks.local", "docker-public.af.paloaltonetworks.local", "panos-cntr-engtools-releng.af.paloaltonetworks.local", "docker-qa-pavm.af.paloaltonetworks.local"]} root@vn-cl1-master1:~#Events:Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 64s default-scheduler Successfully assigned kube-system/pan-cni-4jbpl to qalab-virtual-machine Normal Pulling 23s (x3 over 63s) kubelet Pulling image "docker-panos-ctnr.af.paloaltonetworks.local/pan-cni/develop/pan-cni-1.0.0:10_a26df862ed" Warning Failed 23s (x3 over 63s) kubelet Failed to pull image "docker-panos-ctnr.af.paloaltonetworks.local/pan-cni/develop/pan-cni-1.0.0:10_a26df862ed": rpc error: code = Unknown desc = Error response from daemon: Get https://docker-panos-ctnr.af.paloaltonetworks.local/v2/: x509: certificate signed by unknown authority Warning Failed 23s (x3 over 63s) kubelet Error: ErrImagePull Warning DNSConfigForming 8s (x7 over 63s) kubelet Nameserver limits were exceeded, some nameservers have been omitted, the applied nameserver line is: 8.8.8.8 8.8.4.4 2620:130:800a:14::53 Normal BackOff 8s (x3 over 63s) kubelet Back-off pulling image "docker-panos-ctnr.af.paloaltonetworks.local/pan-cni/develop/pan-cni-1.0.0:10_a26df862ed" Warning Failed 8s (x3 over 63s) kubelet Error: ImagePullBackOff qalab@master-node:~/cnv2/Kubernetes/pan-cn-k8s-service/native$

Login to DP from worker-node running in ns-panw namespace

Navigate to /var/log/pan-appinfo directory and run the command cat pan-cmdmap and copy the log to login to DP in nspan-fw namespaceroot@pv-k8-vm-worker-2:/var/log/pan-appinfo# cat pan-cmdmap 02-07-2022 17:39:54.079133 PST : kube-system/pan-ngfw-ds-ql4q9: '/usr/bin/nsenter -t 15872 -m -p --ipc -u --net=/var/run/netns/nspan-fw -- /bin/bash' 02-07-2022 17:43:08.976154 PST : kube-system/pan-ngfw-ds-zbt54: '/usr/bin/nsenter -t 28308 -m -p --ipc -u --net=/var/run/netns/nspan-fw -- /bin/bash' root@pv-k8-vm-worker-2:/var/log/pan-appinfo# root@pv-k8-vm-worker-2:/var/log/pan-appinfo# /usr/bin/nsenter -t 28308 -m -p --ipc -u --net=/var/run/netns/nspan-fw -- /bin/bash [root@pan-ngfw-ds-zbt54 /]# —----->>>>>You can login to DP and can run masterd all status from here.DP pods remain in ContainerCreating status with below kubectl logs

“MountVolume.SetUp failed for volume "pan-cni-ready"hostPath type check failed: /var/log/pan-appinfo/pan-cni-ready is not a directoryEvents: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 98s default-scheduler Successfully assigned kube-system/pan-ngfw-dep-7569f69d8-j4hfp to ctnr-debug-worker-3 Warning FailedMount 34s (x8 over 98s) kubelet MountVolume.SetUp failed for volume "pan-cni-ready" : hostPath type check failed: /var/log/pan-appinfo/pan-cni-ready is not a directory test@msatane-182:~/scripts$Solution:-

check pan-cni pod's kubectl log. Ensure multus vs non-multus, correct yaml deployed.

-

If multus is deployed, undeploy multus and delete the 00-multus.conf from /etc/cni/net.d/ dir. Followed by undeploying and redeploying CNI and DP

Below k8 logs on pan-cni show multus is detected. So the above steps should be followed.test@msatane-182:~/results/job_vm_series_72342/cn-sanity/cntr_deploy/kube-system$ k8l pan-cni-csqt4 09-29-2021 04:07:22.495812 UTC DEBUG: Passed CNI_CONF_NAME= 09-29-2021 04:07:22.498585 UTC DEBUG: Using CNI config template from CNI_NETWORK_CONFIG environment variable. 09-29-2021 04:07:22.633416 UTC DEBUG: Removing existing binaries 09-29-2021 04:07:22.731559 UTC DEBUG: Wrote PAN CNI binaries to /host/opt/cni/bin 09-29-2021 04:07:22.734940 UTC DEBUG: /host/secondary-bin-dir is non-writeable, skipping 09-29-2021 04:07:22.752094 UTC DEBUG: PAN CNI config: { "name": "pan-cni", "type": "pan-cni", "log_level": "debug", "appinfo_dir": "/var/log/pan-appinfo", "mode": "service", "dpservicename": "pan-ngfw-svc", "dpservicenamespace": "kube-system", "firewall": [ "pan-fw" ], "interfaces": [ "eth0" ], "interfacesip": [ "" ], "interfacesmac": [ "" ], "override_mtu": "", "kubernetes": { "kubeconfig": "/etc/cni/net.d/ZZZ-pan-cni-kubeconfig", "cni_bin_dir": "/opt/cni/bin", "exclude_namespaces": [], "security_namespaces": [ "kube-system" ] }} 09-29-2021 04:07:22.756725 UTC DEBUG: CNI running in FW Service mode. Bypassfirewall can be enabled on application pods 09-29-2021 04:07:22.796082 UTC CRITICAL: Detected Multus as primary CNI (CONF file 00-multus.conf); waiting for non-multus CNI to become primary CNI. test@msatane-182:~/results/job_vm_series_72342/cn-sanity/cntr_deploy/kube-system$Link status on App pod secured by CNv2 (using vxlan)

root@testapp-secure-deployment-86f9f95b5-q5nxt:/# ip link showlo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN mode DEFAULT group default qlen 1000link/ipip 0.0.0.0 brd 0.0.0.0eth0@if227: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP mode DEFAULT group default qlen 1000link/ether 26:54:8f:43:44:3f brd ff:ff:ff:ff:ff:ff link-netnsid 0vxlan0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1430 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000link/ether 26:54:8f:43:44:3f brd ff:ff:ff:ff:ff:ffroot@testapp-secure-deployment-86f9f95b5-q5nxt:/#Capture Techsupport from MP

On MP:-

admin@pan-mgmt-sts-0> request tech-support dumpExec job enqueued with jobid 4 4 admin@pan-mgmt-sts-0> show jobs id 4 Enqueued Dequeued ID Type Status Result Completed ------------------------------------------------------------------------------------------------------------------------------ 2022/02/15 12:46:50 12:46:51 4 Exec FIN OK 12:47:36Login to MP root to copy the TSF name stored atpraveena@praveena:~$ kubectl -n kube-system exec -it pan-mgmt-sts-0 -- bashDefaulted container "pan-mgmt" out of: pan-mgmt, pan-mgmt-init (init)[root@pan-mgmt-sts-0 /]#[root@pan-mgmt-sts-0 techsupport]# pwd/opt/pancfg/tmp/techsupport[root@pan-mgmt-sts-0 techsupport]# lsPA_878C48E8DDCFA5B_ts_102.0-c55_20220215_1246.tar.gzCopy the TSF from MP to local controller usingpraveena@praveena:~$ kubectl -n kube-system cp pan-mgmt-sts-0:/opt/pancfg/tmp/techsupport/PA_878C48E8DDCFA5B_ts_102.0-c55_20220215_1246.tar.gz PA_878C48E8DDCFA5B_ts_102.0-c55_20220215_1246.tar.gz

HPA not working

-

Verify “k8sys get hpa” and “k8sys describe hpa”

-

Verify the monitoring tool(cloudwatch/GCP stackdriver/Azure App Insights) to see if the custom metrics are seen.

-

Verify /var/log/pan/pan_vm_plugin.log for any errors if custom-metrics on monitoring tool not seentest2@mks-test-181:~/cnv2/yaml-files/CNSeries_V2/eks/HPA$ k8 get pods -n custom-metricsNAME READY STATUS RESTARTS AGEk8s-cloudwatch-adapter-6647595dfd-qhbtd 1/1 Running 0 42mtest2@mks-test-181:~/cnv2/yaml-files/CNSeries_V2/eks/HPA$ k8 logs k8s-cloudwatch-adapter-6647595dfd-qhbtd -n custom-metrics

How to control - Inbound Access to Apps on OpenShift?

For the Inbound Access to Applications:-

Have the customer enable the protection for haproxy/router by using the annotation in the yaml files. This will ensure that all the traffic coming into and going out of the haproxy is going through CN-Series.

-

Have them use the custom URL-based rules (destination) with the source IPs to enforce who is allowed to access a given application. The custom URLs (e.g., osecluster/payments) will need to be defined for the endpoints of the applications. This will allow them to allow/deny access to these applications without having to worry about the NAT.

-

If they are using an external load balancer (e.g., F5) in front of the OSE cluster, they could use the XFF header to enforce further granularity on who is allowed to have access to a given application.

Undeploy CN-series

Run the following commands:-

kubectl delete -f pan-cn-mgmt.yaml

-

kubectl delete -f pan-cn-mgmt-configmap.yaml

-

kubectl delete -f pan-cn-mgmt-secret.yaml

-

Delete PVCs:kubectl -n kube-system delete pvc -l appname=pan-mgmt-sts

-

kubectl delete -f . → undeploys all objects defined in the yamls in that dir(THIS TEARS DOWN EVERYTHING!!)

Enable packet-diag on CN

-

Exec into the MP pod to which the specific DP pod that inspects traffic from the app pod.

-

Execute the following command:> debug dataplane packet-diag set filter match source <src> destination <> … > Verify the filter using “debug dataplane packet-diag show setting” > debug dataplane packet-diag set capture on > After packets are captured execute “debug dataplane packet-diag set capture off”After the packet are captured, find the file under:/opt/panlogs/session/pan/filters/

IPSec between MP and DP fail with error assymetric state

This may happen in the following scenarios:-

MP and DP are on different PanOS versions

-

“pan-cn-mgmt-slot-crd.yaml” and pan-cn-mgmt-slot-cr.yaml” are not deployed.2022-08-22 -0700 11:46:35.208 16[NET] received packet: from 10.233.110.10[4500] to 10.233.96.7[4500] (464 bytes) 2022-08-22 -0700 11:46:35.208 16[ENC] parsed IKE_SA_INIT request 0 [ SA KE No N(NATD_S_IP) N(NATD_D_IP) N(FRAG_SUP) N(HASH_ALG) N(REDIR_SUP) ] 2022-08-22 -0700 11:46:35.208 16[IKE] 10.233.110.10 is initiating an IKE_SA 2022-08-22 -0700 11:46:35.208 16[CFG] selected proposal: IKE:AES_CBC_256/HMAC_SHA2_256_128/PRF_HMAC_SHA2_256/MODP_2048 2022-08-22 -0700 11:46:35.229 16[IKE] local host is behind NAT, sending keep alives 2022-08-22 -0700 11:46:35.229 16[IKE] remote host is behind NAT 2022-08-22 -0700 11:46:35.229 16[IKE] sending cert request for "CN=kubernetes" 2022-08-22 -0700 11:46:35.229 16[ENC] generating IKE_SA_INIT response 0 [ SA KE No N(NATD_S_IP) N(NATD_D_IP) CERTREQ N(FRAG_SUP) N(HASH_ALG) N(CHDLESS_SUP) ] 2022-08-22 -0700 11:46:35.229 16[NET] sending packet: from 10.233.96.7[4500] to 10.233.110.10[4500] (489 bytes) 2022-08-22 -0700 11:46:35.274 11[NET] received packet: from 10.233.110.10[4500] to 10.233.96.7[4500] (1236 bytes) 2022-08-22 -0700 11:46:35.274 11[ENC] parsed IKE_AUTH request 1 [ EF(1/2) ] 2022-08-22 -0700 11:46:35.274 11[ENC] received fragment #1 of 2, waiting for complete IKE message 2022-08-22 -0700 11:46:35.274 12[NET] received packet: from 10.233.110.10[4500] to 10.233.96.7[4500] (308 bytes) 2022-08-22 -0700 11:46:35.274 12[ENC] parsed IKE_AUTH request 1 [ EF(2/2) ] 2022-08-22 -0700 11:46:35.274 12[ENC] received fragment #2 of 2, reassembled fragmented IKE message (1456 bytes) 2022-08-22 -0700 11:46:35.274 12[ENC] parsed IKE_AUTH request 1 [ IDi CERT N(INIT_CONTACT) CERTREQ IDr AUTH CPRQ(ADDR DNS) SA TSi TSr N(EAP_ONLY) N(MSG_ID_SYN_SUP) ] 2022-08-22 -0700 11:46:35.274 12[IKE] received cert request for "CN=kubernetes" 2022-08-22 -0700 11:46:35.274 12[IKE] received end entity cert "CN=pan-fw.kube-system.svc" 2022-08-22 -0700 11:46:35.274 12[CFG] looking for peer configs matching 10.233.96.7[CN=pan-mgmt-svc.kube-system.svc]...10.233.110.10[CN=pan-fw.kube-system.svc] 2022-08-22 -0700 11:46:35.274 12[CFG] selected peer config 'to-mp' 2022-08-22 -0700 11:46:35.274 12[CFG] using certificate "CN=pan-fw.kube-system.svc" 2022-08-22 -0700 11:46:35.275 12[CFG] using trusted ca certificate "CN=kubernetes" 2022-08-22 -0700 11:46:35.275 12[CFG] checking certificate status of "CN=pan-fw.kube-system.svc" 2022-08-22 -0700 11:46:35.275 12[CFG] certificate status is not available 2022-08-22 -0700 11:46:35.275 12[CFG] reached self-signed root ca with a path length of 0 2022-08-22 -0700 11:46:35.275 12[IKE] authentication of 'CN=pan-fw.kube-system.svc' with RSA_EMSA_PKCS1_SHA2_256 successful 2022-08-22 -0700 11:46:35.279 12[IKE] authentication of 'CN=pan-mgmt-svc.kube-system.svc' (myself) with RSA_EMSA_PKCS1_SHA2_256 successful 2022-08-22 -0700 11:46:35.279 12[IKE] IKE_SA to-mp[2] established between 10.233.96.7[CN=pan-mgmt-svc.kube-system.svc]...10.233.110.10[CN=pan-fw.kube-system.svc] 2022-08-22 -0700 11:46:35.279 12[IKE] sending end entity cert "CN=pan-mgmt-svc.kube-system.svc" 2022-08-22 -0700 11:46:35.279 12[IKE] peer requested virtual IP %any 2022-08-22 -0700 11:46:35.279 12[CFG] assigning new lease to 'CN=pan-fw.kube-system.svc' 2022-08-22 -0700 11:46:35.279 12[IKE] assigning virtual IP 169.254.202.2 to peer 'CN=pan-fw.kube-system.svc' 2022-08-22 -0700 11:46:35.279 12[CFG] selected proposal: ESP:AES_GCM_8_128/NO_EXT_SEQ 2022-08-22 -0700 11:46:35.279 12[IKE] CHILD_SA to-mp{1} established with SPIs 6d779dbe_i 0a178b55_o and TS 0.0.0.0/0 === 169.254.202.2/32 2022-08-22 -0700 11:46:35.290 12[ENC] generating IKE_AUTH response 1 [ IDr CERT AUTH CPRP(ADDR) SA TSi TSr ] 2022-08-22 -0700 11:46:35.290 12[ENC] splitting IKE message (1392 bytes) into 2 fragments 2022-08-22 -0700 11:46:35.290 12[ENC] generating IKE_AUTH response 1 [ EF(1/2) ] 2022-08-22 -0700 11:46:35.290 12[ENC] generating IKE_AUTH response 1 [ EF(2/2) ] 2022-08-22 -0700 11:46:35.291 12[NET] sending packet: from 10.233.96.7[4500] to 10.233.110.10[4500] (1236 bytes) 2022-08-22 -0700 11:46:35.291 12[NET] sending packet: from 10.233.96.7[4500] to 10.233.110.10[4500] (228 bytes) 2022-08-22 -0700 11:46:35.345 09[NET] received packet: from 10.233.96.9[4500] to 10.233.96.7[4500] (464 bytes) 2022-08-22 -0700 11:46:35.346 09[ENC] parsed IKE_SA_INIT request 0 [ SA KE No N(NATD_S_IP) N(NATD_D_IP) N(FRAG_SUP) N(HASH_ALG) N(REDIR_SUP) ] 2022-08-22 -0700 11:46:35.346 09[IKE] 10.233.96.9 is initiating an IKE_SA 2022-08-22 -0700 11:46:35.346 09[CFG] selected proposal: IKE:AES_CBC_256/HMAC_SHA2_256_128/PRF_HMAC_SHA2_256/MODP_2048 2022-08-22 -0700 11:46:35.356 09[IKE] local host is behind NAT, sending keep alives 2022-08-22 -0700 11:46:35.356 09[IKE] remote host is behind NAT 2022-08-22 -0700 11:46:35.356 09[IKE] sending cert request for "CN=kubernetes" 2022-08-22 -0700 11:46:35.356 09[ENC] generating IKE_SA_INIT response 0 [ SA KE No N(NATD_S_IP) N(NATD_D_IP) CERTREQ N(FRAG_SUP) N(HASH_ALG) N(CHDLESS_SUP) ] 2022-08-22 -0700 11:46:35.356 09[NET] sending packet: from 10.233.96.7[4500] to 10.233.96.9[4500] (489 bytes) 2022-08-22 -0700 11:46:35.363 10[NET] received packet: from 10.233.96.9[4500] to 10.233.96.7[4500] (1236 bytes) 2022-08-22 -0700 11:46:35.364 10[ENC] parsed IKE_AUTH request 1 [ EF(1/2) ] 2022-08-22 -0700 11:46:35.364 10[ENC] received fragment #1 of 2, waiting for complete IKE message 2022-08-22 -0700 11:46:35.364 15[NET] received packet: from 10.233.96.9[4500] to 10.233.96.7[4500] (308 bytes) 2022-08-22 -0700 11:46:35.364 15[ENC] parsed IKE_AUTH request 1 [ EF(2/2) ] 2022-08-22 -0700 11:46:35.364 15[ENC] received fragment #2 of 2, reassembled fragmented IKE message (1456 bytes) 2022-08-22 -0700 11:46:35.364 15[ENC] parsed IKE_AUTH request 1 [ IDi CERT N(INIT_CONTACT) CERTREQ IDr AUTH CPRQ(ADDR DNS) SA TSi TSr N(EAP_ONLY) N(MSG_ID_SYN_SUP) ] 2022-08-22 -0700 11:46:35.364 15[IKE] received cert request for "CN=kubernetes" 2022-08-22 -0700 11:46:35.364 15[IKE] received end entity cert "CN=pan-fw.kube-system.svc" 2022-08-22 -0700 11:46:35.364 15[CFG] looking for peer configs matching 10.233.96.7[CN=pan-mgmt-svc.kube-system.svc]...10.233.96.9[CN=pan-fw.kube-system.svc] 2022-08-22 -0700 11:46:35.364 15[CFG] selected peer config 'to-mp' 2022-08-22 -0700 11:46:35.364 15[CFG] using certificate "CN=pan-fw.kube-system.svc" 2022-08-22 -0700 11:46:35.364 15[CFG] using trusted ca certificate "CN=kubernetes" 2022-08-22 -0700 11:46:35.364 15[CFG] checking certificate status of "CN=pan-fw.kube-system.svc" 2022-08-22 -0700 11:46:35.364 15[CFG] certificate status is not available 2022-08-22 -0700 11:46:35.364 15[CFG] reached self-signed root ca with a path length of 0 2022-08-22 -0700 11:46:35.364 15[IKE] authentication of 'CN=pan-fw.kube-system.svc' with RSA_EMSA_PKCS1_SHA2_256 successful 2022-08-22 -0700 11:46:35.366 15[IKE] authentication of 'CN=pan-mgmt-svc.kube-system.svc' (myself) with RSA_EMSA_PKCS1_SHA2_256 successful 2022-08-22 -0700 11:46:35.366 15[IKE] IKE_SA to-mp[3] established between 10.233.96.7[CN=pan-mgmt-svc.kube-system.svc]...10.233.96.9[CN=pan-fw.kube-system.svc] 2022-08-22 -0700 11:46:35.366 15[IKE] sending end entity cert "CN=pan-mgmt-svc.kube-system.svc" 2022-08-22 -0700 11:46:35.366 15[IKE] peer requested virtual IP %any 2022-08-22 -0700 11:46:35.366 15[CFG] assigning new lease to 'CN=pan-fw.kube-system.svc' 2022-08-22 -0700 11:46:35.366 15[IKE] assigning virtual IP 169.254.202.3 to peer 'CN=pan-fw.kube-system.svc' 2022-08-22 -0700 11:46:35.366 15[CFG] selected proposal: ESP:AES_GCM_8_128/NO_EXT_SEQ 2022-08-22 -0700 11:46:35.366 15[IKE] CHILD_SA to-mp{2} established with SPIs a97528ab_i f6667584_o and TS 0.0.0.0/0 === 169.254.202.3/32 2022-08-22 -0700 11:46:35.372 15[CHD] updown: SIOCADDRT: File exists 2022-08-22 -0700 11:46:35.373 15[ENC] generating IKE_AUTH response 1 [ IDr CERT AUTH CPRP(ADDR) SA TSi TSr ] 2022-08-22 -0700 11:46:35.373 15[ENC] splitting IKE message (1392 bytes) into 2 fragments 2022-08-22 -0700 11:46:35.373 15[ENC] generating IKE_AUTH response 1 [ EF(1/2) ] 2022-08-22 -0700 11:46:35.373 15[ENC] generating IKE_AUTH response 1 [ EF(2/2) ] 2022-08-22 -0700 11:46:35.373 15[NET] sending packet: from 10.233.96.7[4500] to 10.233.96.9[4500] (1236 bytes) 2022-08-22 -0700 11:46:35.373 15[NET] sending packet: from 10.233.96.7[4500] to 10.233.96.9[4500] (228 bytes) 2022-08-22 -0700 11:46:46.471 11[NET] received packet: from 10.233.96.9[4500] to 10.233.96.7[4500] (80 bytes) 2022-08-22 -0700 11:46:46.471 11[ENC] parsed INFORMATIONAL request 2 [ D ] 2022-08-22 -0700 11:46:46.471 11[IKE] received DELETE for IKE_SA to-mp[3] 2022-08-22 -0700 11:46:46.471 11[IKE] deleting IKE_SA to-mp[3] between 10.233.96.7[CN=pan-mgmt-svc.kube-system.svc]...10.233.96.9[CN=pan-fw.kube-system.svc] 2022-08-22 -0700 11:46:46.471 11[IKE] unable to reestablish IKE_SA due to asymmetric setup 2022-08-22 -0700 11:46:46.471 11[IKE] IKE_SA deleted 2022-08-22 -0700 11:46:46.751 11[ENC] generating INFORMATIONAL response 2 [ ] 2022-08-22 -0700 11:46:46.751 11[NET] sending packet: from 10.233.96.7[4500] to 10.233.96.9[4500] (80 bytes) 2022-08-22 -0700 11:46:46.752 11[CFG] lease 169.254.202.3 by 'CN=pan-fw.kube-system.svc' went offline 2022-08-22 -0700 11:46:47.040 12[NET] received packet: from 10.233.110.10[4500] to 10.233.96.7[4500] (80 bytes) 2022-08-22 -0700 11:46:47.040 12[ENC] parsed INFORMATIONAL request 2 [ D ] 2022-08-22 -0700 11:46:47.040 12[IKE] received DELETE for IKE_SA to-mp[2] 2022-08-22 -0700 11:46:47.040 12[IKE] deleting IKE_SA to-mp[2] between 10.233.96.7[CN=pan-mgmt-svc.kube-system.svc]...10.233.110.10[CN=pan-fw.kube-system.svc] 2022-08-22 -0700 11:46:47.040 12[IKE] unable to reestablish IKE_SA due to asymmetric setup 2022-08-22 -0700 11:46:47.041 12[IKE] IKE_SA deleted

App pods fail DNS resolution (irrespective of its firewalled or not)

Verify the following:-

Check DNS pods are running: “kubectl get pods --namespace=kube-system -l k8s-app=kube-dns”

-

Check DNS service is running: “kubectl get svc --namespace=kube-system”

-

If DNS service is not running, expose the DNS deployment as svc or use the yaml and deploy.

-

Once SVC is deployed, verify the endpoints are exposed correctly. “kubectl get endpoints coredns --namespace=kube-system”

-

Redeploy app pods.