AI Runtime Security

Create Model Groups for Customized Protections

Table of Contents

Expand All

|

Collapse All

AI Runtime Security Docs

-

- AI Models on Public Clouds Support

-

- Deploy AI Runtime Security: Network Intercept in GCP

- Deploy AI Runtime Security: Network Intercept in Azure

- Deploy AI Runtime Security: Network Intercept in AWS

- Configure Strata Cloud Manager to Secure VM Workloads and Kubernetes Clusters

- Harvest IP-Tags from Public and Hybrid Kubernetes Clusters to Enforce Security Policy Rules

- AI Runtime Security for Private Clouds

- Manually Deploy and Bootstrap AI Runtime Security: Network Intercept

Create Model Groups for Customized Protections

Create Model Groups to group the AI models to apply specific application

protection, AI model protection, and AI data protection.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

- Log in to Strata Cloud Manager.

- Select Manage → Configuration → NGFW and Prisma Access → Security Services → AI Security → Add Profile.

- Select Add Model Group.The security profile has a default model group defining the behavior of models not assigned to any specific group. If a supported AI model isn't part of a designated group, the default model group’s protection settings will apply.

- Enter a Name.

- Choose the AI models supported by the cloud provider in the Target Models section. See the AI Models on Public Clouds Support Table list for reference.

- Set the Access Control as Allow or Block for the model group.When you select the Allow access control, you can configure the protection settings for request and response traffic.When you block the access control for a model group, the protection settings are also disabled. This means any traffic to this model will be blocked for this profile.

- Configure the following Protection Settings for the Request and Response traffic:

Request Response AI Model Protection - Enable Prompt injection detection and

set it to Alert or Block.The feature supports the following languages: English, Spanish, Russian, German, French, Japanese, Portuguese, and Italian.

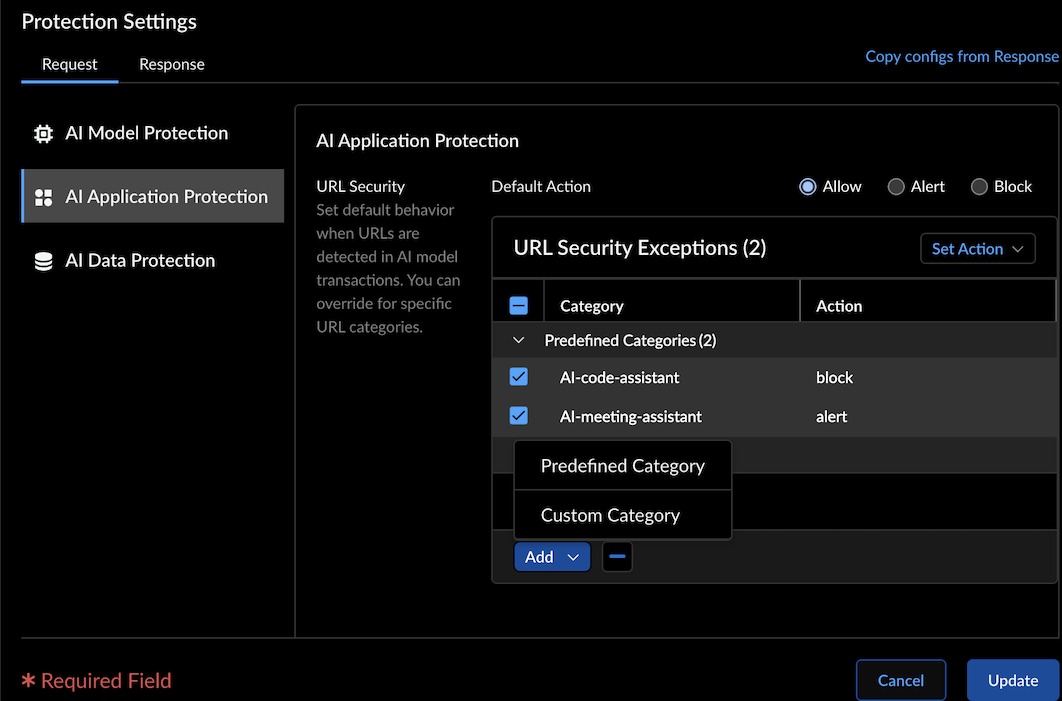

N/A AI Application Protection - Set the default URL security behavior to Allow, Alert, or Block. This action applies to URLs detected in the content of model input.

- Override the default behavior by specifying actions for individual URL categories in the URL Security Exceptions table.

- For example, configure the default action as Allow but block malicious URL categories, or set the default action to Block with exceptions for trusted categories.

- Supports predefined and custom URL categories. (Custom categories can be created under Manage → NGFW and Prisma Access → Security Services → URL Access Management).

AI Application Protection - Set the default URL security behavior to Allow, Alert, or Block for URLs detected in the content of model output.

- Override the default behavior by specifying actions for individual URL categories in the URL Security Exceptions table.

- For example, configure the default action as Allow but block malicious URL categories, or set the default action to Block with exceptions for trusted categories.

- Supports predefined and custom URL categories. (Custom categories can be created under Manage → NGFW and Prisma Access → Security Services → URL Access Management).

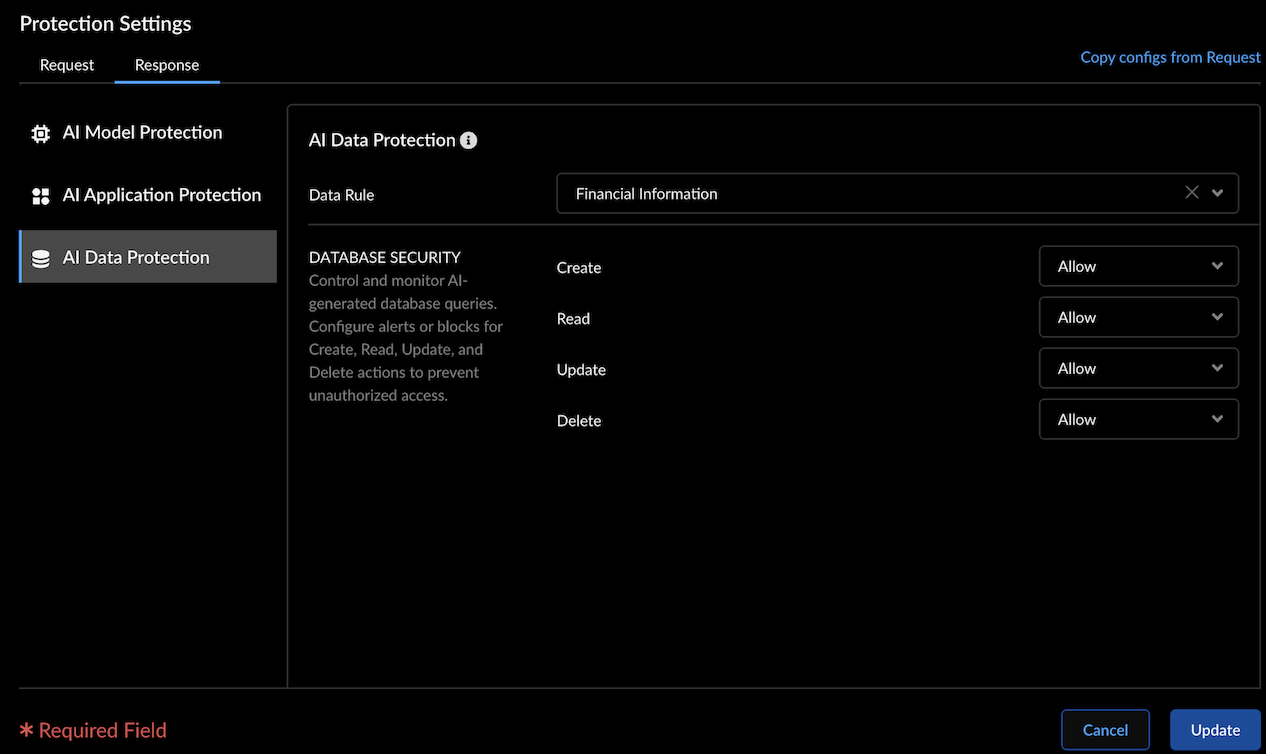

AI Data Protection - Data Rule: Select the predefined or custom DLP rule to detect sensitive data in model input.

AI Data Protection - Data Rule: Select the predefined or custom DLP rule to detect sensitive data in model input.

- Database Security: Regulate the types of database queries generated by genAI models to ensure appropriate access control.Set actions (Allow, Alert, or Block) for database operations Create, Read, Update, and Delete to prevent unauthorized actions.

The URL filtering monitors the AI traffic passing to AI data by monitoring the model request, and response payloads.You can also copy and import request and response configurations for the common protection settings including AI application protection and AI data protection. - Enable Prompt injection detection and

set it to Alert or Block.

- In the Advanced Settings, under Latency:

- Max Inline Latency: Set the maximum allowed latency for inline threat detection. The latency range is between 1-300 seconds.

- Inline Timeout Action: Specify the action to take if inline

threat detection exceeds the Max Inline Latency:

- Allow

- Alert (Report threats asynchronously)

- Block

- Select Add to create the model group and add this security profile to Security Profile Groups.When a query is detected with the action alert or block, an AI security log is generated with the respective AI Incident Type and AI Incident Subtype.Refer to AI Security Report Log Viewer for more details.

- Select Manage > Operations > Push Config and push the security configurations for the security rule from Strata Cloud Manager to AI Runtime Security: Network intercept.As the user interacts with the app, and the app makes requests to an AI model, the AI security logs are generated for each one of these policy rules. Check the specific logs in the AI Security Report under AI Security Log Viewer.Refer to Monitor: Threat Logs and AI Security Logs for more details.

Edit Model Groups

- In your AI security profile, select a Model group.

- Update the Target Models in a model group.

- You can associate each model with a unique model group.Select AI models from the AWS, Azure, and GCP cloud providers. Refer to AI Models on Public Clouds Support for a complete list of supported public cloud provider pre-trained models.

- Select the model name from the available models.

- Update the access control to Allow or Block.

- Configure the Request and Response protection settings.

- Update to save the model group changes.You can then add this security profile with customized model group protections to a security profile group.What’s Next: Configure the Security Profile Groups and add the AI Security profile to the profile group. You can then attach this profile group to a security policy rule.