Prisma AIRS

Configure Panorama to Secure VM Workloads and Kubernetes Clusters

Table of Contents

Expand All

|

Collapse All

Prisma AIRS Docs

Configure Panorama to Secure VM Workloads and Kubernetes Clusters

Panorama configurations to secure your VM workloads/vNets and Kubernetes clusters

after you deploy Panorama managed Prisma AIRS AI Runtime

Firewall.

| Where Can I Use This? | What Do I Need? |

|---|---|

|

This page covers the configurations you need to secure your VM workloads/vNets

and Kubernetes clusters, and route traffic after you apply the Panorama-managed

deployment Terraform template in your cloud environment.

On this page, you will:

- Configure the following in Panorama:

- Interfaces

- Zones

- NAT Policy

- Routers

- Security Policies

- Secure VM workloads only for public clouds

- Secure Kubernetes clusters in public and private clouds

- Install a Kubernetes application with Helm

- (Optional) Configure labels in your cloud environment for manual deployments.The deployment Terraform you generate from Strata Cloud Manager, automatically adds the required labels to organize your Prisma AIRS AI Runtime Firewall. For manual deployments, ensure you have the following labels (key-value pairs) in your Terraform template.

- Add the following labels (key-value pairs) under Tags in the Terraform template file under your downloaded path `<azure|aws-deployment-terraform-path>/architecture/security_project/terraform.tfvars`. The value of these keys must be unique.

- For GCP: `paloaltonetworks_com-trust` and `paloaltonetworks_com-occupied`.

- For Azure and AWS: `paloaltonetworks.com-trust` and `paloaltonetworks.com-occupied`.

- Ensure the network interface name in the security_project Terraform is suffixed by `-trust-vpc`.

Prisma AIRS AI Runtime Firewall

is only supported for public clusters on GCP, Azure, and AWS cloud platforms and a

few private clouds such as OpenShift, ESXi, and KVM.

Prerequisites

- Deploy Prisma AIRS AI Runtime Firewall for Panorama Managed Firewall specific to your cloud to Save and Download the Terraform template.

- Unzip and navigate to the `<unzipped-folder>` that has the following

structure:|____architecture |____LICENSE |____README.md |____security_project |____application_project |____helm |____modules

- Enable SSL/TLS decryption on Prisma AIRS AI Runtime Firewall to decrypt traffic between AI applications and AI models. Refer to Configure an SSL/TLS Service Profile in Panorama (Device > Certificate Management > SSL/TLS Service Profile) details.

- Add a Template and configure a Template stack in Panorama.

- Add a Device group in Panorama.

- After you create a template stack and a device group in Panorama, the first Commit All Changes pushes all the configuration changes to the Panorama managed firewall.

- You can also schedule a Configuration Push

to Managed Firewalls after deploying an AI network

intercept managed by Panorama, and then select

Commit -> Commit to Panorama and Commit -> Push to

Devices. (This flexibility allows you to decide the order of

operations that best suits your deployment workflow.) Select the AI Runtime Security platform under Device Groups in the Push Scope Selection.

GCP

Prisma AIRS AI Runtime Firewall post-deployment configurations in Panorama and GCP to protect VM workloads and Kubernetes clusters.This guide provides step-by-step instructions to configure Panorama for securing VM workloads and Kubernetes clusters in GCP. The configurations include setting up interfaces, zones, NAT policies, virtual routers, and security policies. Where Can I Use This? What Do I Need? - Secure VMs and Kubernetes Clusters in Panorama

- Configure Interfaces:

- Log in to the Panorama Web Interface.Navigate to Network > Interfaces.Configure Ethernet Interfaces: Configure a Layer 3 Interface for eth1/1 and eth1/2:

- Interfaces: eth1/1 and eth1/2

- Location: Specify the location if applicable

- Interface Type: Layer3

- IP Address: Dynamic (DHCP Client)

Navigate to Network > Interfaces > Loopback:- In IPv4s, enter the private IP address of the ILB (Internal Load Balancer).

- Set Security Zone to trust for eth1/2 and untrust for eth1/1.

- Ensure VR (Virtual Router) is set to default or the same as eth1/2.

Configure Zones.Configure a logical router:- Create a Logical Router and add the Layer 3 interfaces (eth1/1 and eth1/2).Configure a Static Route with the ILB static IP addresses for routing. Use the trust interface gateway IP address.You don’t have to configure the Virtual router, as advanced routing is enabled on Prisma AIRS AI Runtime Firewall, by default.Add a security policy. Set the action to Allow.Ensure the policy allows health checks from the GCP Load Balancer (LB) pool to the internal LB IP from Panorama. Check session IDs to ensure the firewall responds correctly on the designated interfaces.Select Commit → Commit and Push, to push the policy configurations to Prisma AIRS AI Runtime Firewall.

Configurations to Secure VM Workloads

- Configure Static Routes for VPC endpoints.

- For VPC subnet:

- Edit the IPv4 Static Routes and add the VPC IPv4 range CIDR subnets route.

- Set the Next Hop as eth1/2.

- Set the Destination as the trust subnet gateway IP from Strata Cloud Manager.

- Update the static route.

Save the Logical Router.Push the policy configurations to the Prisma AIRS AI Runtime Firewall managed by Panorama (Panorama > Scheduled Config Push).Configurations to Secure Kubernetes Clusters

- Add pod and service IP Subnets to Prisma AIRS AI Runtime Firewall trust firewall rules:

- Get the IP addresses for the pod and service subnets:

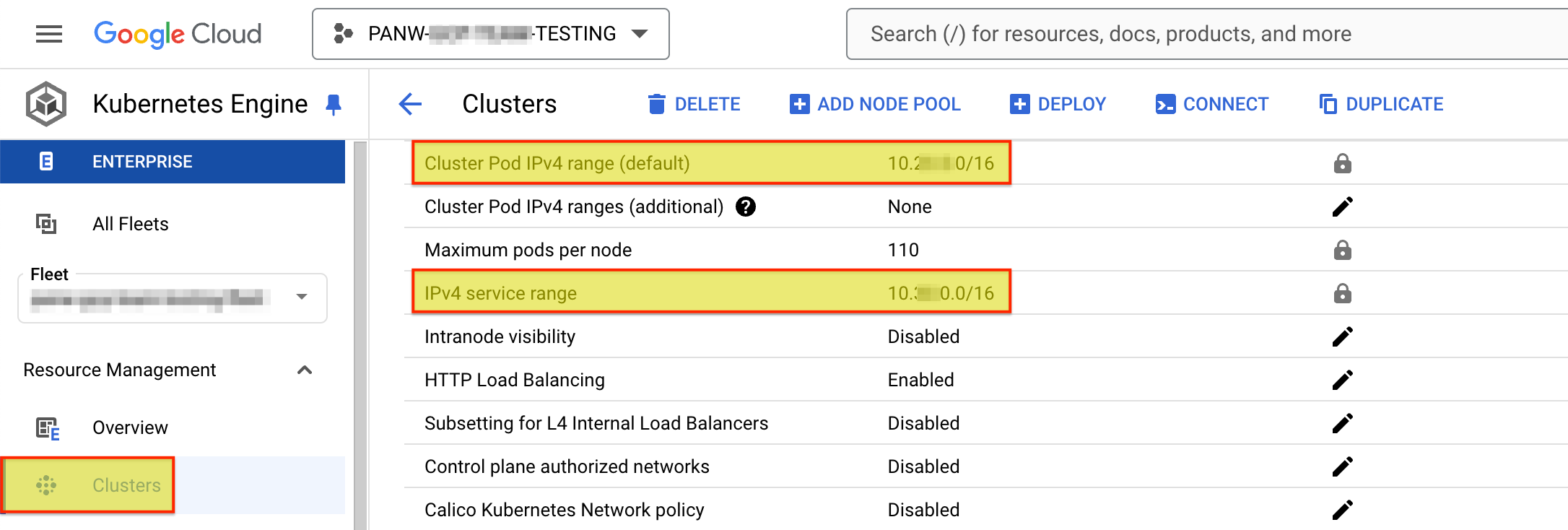

- Go to Kubernetes Engine -> Clusters.Select a Cluster and copy the Cluster Pod IPv4 and IPv4 Service range IP addresses.

![]() To save and download the Terraform template, follow the section on Deploy Prisma AIRS AI Runtime Firewall in GCP.Edit the Terraform template to allow the following IP addresses in your VPC network firewall rules:

To save and download the Terraform template, follow the section on Deploy Prisma AIRS AI Runtime Firewall in GCP.Edit the Terraform template to allow the following IP addresses in your VPC network firewall rules:- Navigate to the `<unzipped-folder>/architecture/security_project` directory.

- Edit the `terraform.tfvars` file to add the copied IP addresses list

to your `source_ranges`.firewall_rules = { allow-trust-ingress = { name = "allow-trust-vpc" source_ranges = ["35.xxx.0.0/16", "130.xxx.0.0/22", "192.xxx.0.0/16", "10.xxx.0.0/14", "10.xx.208.0/20"] # 1st 2 IPs are for health check packets. Add APP VPC/Pod/Service CIDRs priority = "1000" allowed_protocol = "all" allowed_ports = [] } }

- Apply Terraform:terraform init terraform plan terraform applyAdd Static Routes on the logical router for Kubernetes workloads:

- Pod Subnet:

- Edit the IPv4 Static Routes and add a route with the Pod IPv4 range CIDR.

- Set the Next Hop as eth1/2 (trust interface).

- Set the Destination as the trust subnet gateway IP from Panorama.

- Service Subnet

- Edit the IPv4 Static Routes add a route with the IPv4 Service range CIDR.

- Set the Next Hop as eth1/2 (trust interface).

- Set the Destination as the trust subnet gateway IP from Panorama.

Add source NAT policy for outbound traffic:- Source Zone: Trust

- Destination Zone: Untrust (eth1/1)

- Policy Name: trust2untrust or similar.

- Set the Interface to eth1/1. (The translation happens at

eth1/1).If needed, create a complementary rule for the reverse direction (for example, untrust2trust).

Push the policy configurations to Prisma AIRS AI Runtime Firewall managed by Panorama (Panorama > Scheduled Config Push).If you have a Kubernetes cluster running, follow the section to install a Kubernetes application with Helm.Secure a Kubernetes Application with Helm

- Navigate to the downloaded tar file and extract the contents:tar -xvzf <your-terraform-download.tar.gz>Navigate to the appropriate Helm directory based on your deployment configuration:

- For VPC-level

security:cd <unzipped-folder>/architecture/helm

- For namespace-level security with traffic steering inspection:

cd <unzipped-folder>/architecture/helm-<complete-app-name-path>- Navigate to each Helm application folder. When you configure traffic steering inspection, separate Helm charts are generated for each protected namespace, allowing granular security policies per application.

- GKE Autopilot clusters do not support Helm deployments due to restrictions on modifying the kube-system namespace.

Install the Helm chart using the appropriate command:- For VPC-level

security:helm install ai-runtime-security helm --namespace kube-system --values helm/values.yaml

- For namespace-level security with traffic steering inspection:

Repeat this command for each namespace-specific Helm chart generated during the deployment process.helm install ai-runtime-security helm-<complete-app-name-path> --namespace kube-system --values helm-<complete-app-name-path>/values.yamlThis creates a container network interface (CNI), but doesn’t protect the container traffic until you annotate the application `yaml` or `namespace`.Verify the Helm installation:#List all Helm releases helm list -A #Ensure the output shows your installation with details such as: NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ai-runtime-security kube-system 1 2024-08-13 07:00 PDT deployed ai-runtime-security-0.1.0 11.2.2Check the pod status:kubectl get pods -A #Verify that the pods with names similar to `pan-cni-*****` are present.Check the endpoint slices:kubectl get endpointslice -n kube-system #Confirm that the output shows an ILB IP address: NAME ADDRESSTYPE PORTS ENDPOINTS AGE my-endpointslice IPv4 80/TCP 10.2xx.0.1,10.2xx.0.2 12hVerify the Kubernetes resources were created properly:a. Check the service accounts kubectl get serviceaccounts -n kube-system | grep pan b. Check the secrets kubectl get secrets -n kube-system | grep pan c. Check the services: `kubectl get svc -n kube-system | grep pan`You should see resources like pan-cni-sa (service accounts), pan-plugin-user-secret (secrets), and pan-ngfw-svc (service).Annotate at the pod level in your application yaml so that the traffic from the pod is redirected to the Prisma AIRS AI Runtime Firewall for inspection.Annotate the pod using the below command:- For VPC-level

security:kubectl annotate namespace <namespace-to-be-annotated> paloaltonetworks.com/firewall=pan-fw

- For namespace-level security with traffic steering inspection:

Ensure every pod has this annotation to be moved to the ‘protected’ state across all cloud environments.kubectl annotate pods --all paloaltonetworks.com/subnetfirewall=ns-secure/bypassfirewallRestart the existing application pods after applying Helm and annotating the pods for all changes to take effect. This enables the firewall to inspect the pod traffic and secure the containers. - For namespace-level security with traffic steering inspection:

Azure

Prisma AIRS AI Runtime Firewall post deployment configurations in Panorama and Azure to protect VM workloads and Kubernetes clusters.This guide provides step-by-step instructions to configure Panorama for securing VM workloads and Kubernetes clusters in Azure. The configurations include setting up interfaces, zones, NAT policies, virtual routers, and security policies. Where Can I Use This? What Do I Need? - Secure VMs and Kubernetes Clusters in Panorama

Configure Panorama

Interfaces

- Log in to the Panorama Web Interface.Navigate to Network > Interfaces.Set the Configuration Scope to your AI Runtime Security folder.Select Add Interface.

- In the Ethernet tab, Configure a Layer 3 Interface for eth1/1(trust) and eth1/2(untrust):

- Enter Interface Name (Create interfaces for both eth1/1(trust) and eth1/2(untrust) interfaces).

- Select Layer3 Interface type.

- In Logical Routers, select `lr-private` for eth1/1 and `lr-public` for eth1/2.

- In Zone, select trust for eth1/1 and untrust for eth1/2.

- Select DHCP Client type under IPV4 address.

- Enable IPV4 for both eth1/1 and eth1/2 interfaces.

- For eth1/2 (untrust) only, enable Automatically create default route pointing to default gateway provided by server.

- Select Advanced Settings > Other Info.

- Select a Management Profile switch HTTPS enabled under Administrative Management Services or create a new one:

- Add.

- Configure Network > Interfaces > Loopback to receive health checks from each load balancer:

- Select the Logical Routers.

- Set the trust Zone for private Logical Router and untrust Zone for the public Logical Router.

- In the IPv4s section, enter the private IP address of the Internal Load Balancer (ILB).This IP address is in the output displayed after successfully deploying the `security_project` Terraform, as described in the Deploy AI Runtime Security Firewall in Azure page.

- Expand Advanced Settings Management Profile and add your allow-health-checks profile.

- Add or Save.

Zones

- Configure Zones (Network → Zones).Select Add Zone.Enter a Name.Select Layer3 Interface type.In Interfaces, add $eth1 interface for trust zone and $eth2 interface for untrust zone.Select Save.

NAT

Configure the NAT policies for inbound and outbound traffic: - Configure NAT policy for inbound traffic:

- Enter a Name indicating inbound traffic (for example, inbound-web).Original Packet:

- In Source zones, click add and select untrust zone.

Destination:- select untrust Zone.

- Select any Interface.

- In Addresses, click the add (+) icon and select the public Elastic Load Balancer (ELB) address.

Choose any Service.Translated Packet:- In Translation, select Both.

- In Source Address Translation, select the Dynamic IP and Port translation type.

- In choice, select Interface Address.

- In Interface, select eth1(ethernet1/1).

- In Choice, select IP address.

- Set the Static IP address as the Translation Type.

- Select the destination Translated Address.

Save.Configure NAT Policy for Outbound traffic:- Enter a Name indicating outbound traffic (for example, outbound-internet).Original Packet:

- In Source zones, click add and select trust zone.

- In Addresses, click the add (+) icon and select the app-vnet and the Kubernetes pods CIDR you want to secure.

Destination:- Select untrust destination zone.

- Select any interface.

Choose any Service.Translated Packet:- In Translation, select Source Address Only.

- In Source Address Translation, select the Dynamic IP and Port translation type.

- In choice, select Interface Address.

- In Interface, select eth2(ethernet1/2).

- In Choice, select IP address.

Save.Routers

Configure private and public virtual routers: Azure health probe fails with a single virtual router (VR). Create multiple VRs to ensure probe success.- Configure routing in Panorama (Network → Logical Routers → Router Settings:Enter a Name indicating private and public routers (for example, lr-private and lr-public).In Interfaces, select eth1(ethernet1/1) for lr-private route and eth2(ethernet1/2) for lr-public route.Refer the section on Interfaces to see how to configure the $eth1 and $eth2 interfaces.In Advanced Settings, select Edit to configure the IPV4 Static Routes for lr-private and lr-public.

- Select Add Static Route and add the following routes:Application routing:

- Enter a Name (for example, app-vnet).

- In Destination, enter the CIDR address of your application.

- In Next Hop:

- For lr-private, in the IP Address

field, enter the gateway IP address of the private

interface (eth1/1).The gateway IP address is the first usable IP in the subnet's range (example, 192.168.1.1 for a /24 subnet). To find it, go to Azure Portal > Virtual Networks > [Your Virtual Network] > Subnets > [Private Subnet].

- For lr-public, in the Next Router field, select `lr-public`.

- For lr-private, in the IP Address

field, enter the gateway IP address of the private

interface (eth1/1).

- In Interface, select eth1(ethernet1/1) subnet for `lr-private` and None for `lr-public`.

Default routing:- Enter a Name.

- In Destination, enter 0.0.0.0/0.

- In Next Hop:

- For lr-private, in the Next Router field, enter `lr-private`.

- For lr-public, in the IP Address field, enter the gateway IP address of the `lr-public` interface (eth1/2).

- In Interface, choose None for `lr-private` and eth2(ethernet1/2) for `lr-public`.

- Add or Update.

Azure Load Balancer’s health probe:- Enter a Name.

- In Destination, enter the IP address of the Azure Load Balancer’s health probe (168.63.129.16/32).

- In Next Hop, select IP Address for

vr-private and vr-public.

- In IP Address, enter the gateway IP address of the corresponding interfaces.

- In Interface, select eth1(ethernet1/1) for lr-private and eth2(ethernet1/2) for lr-public.

Select Add.Select Save.Security Policy

- Add a security policy.Ensure the policy allows health checks from the Azure Load Balancer (LB) pool to the internal LB IP from Panorama. Check session IDs to ensure the firewall responds correctly on the designated interfaces.Select Commit → Commit and Push, to push the policy configurations to the Prisma AIRS AI Runtime Firewall.

Configurations to Secure VM Workloads

- Log in to the Panorama Web InterfaceConfigure routes for vNet endpoints as explained in the Routers section above to ensure there is a route to your application.Select Commit and Push to Devices to push the policy configurations to your Prisma AIRS AI Runtime Firewall managed by Panorama.Create or update the NAT policy (refer to the NAT section above) to secure the VM workloads. Set the source address of the application you want to secure.

Configurations to Secure Kubernetes Clusters

- Log in to the Panorama Web InterfaceConfigure static routes (refer to the routes section above) on the Logical Router for Kubernetes workloads.Follow the below configurations for pod and service subnets static routes for pod for the Kubernetes workloads:

- Pod Subnet and Service subnet for lr-private:

Route Type Name Destination Next Hop Next Hop Value Interface Pod subnet pod_route Pod IPV4 range CIDR IP Address Subnet Gateway IP address eth1(ethernet1/1) Service subnet service_route 172.16.0.0/24 IP Address Subnet Gateway IP address eth1(ethernet1/1) - Pod Subnet and Service subnet for lr-public:

Route Type Name Destination Next Hop Next Hop Value Interface Pod subnet pod_route Pod IPV4 range CIDR Next Router lr-public None Service subnet service_route 172.16.0.0/24 Next Router lr-public None

Refer to the NAT policy in the above section to secure the Kubernetes clusters and set the source address of the Kubernetes pods CIDR you want to secure.Select Commit and Push to Devices to push the policy configurations to your Prisma AIRS AI Runtime Firewall managed by Panorama.Secure a Kubernetes Application with Helm

This section covers how to install and configure the Helm chart to secure your Kubernetes applications based on the protection level you selected during deployment. The Helm chart installation process and directory structure vary depending on whether you selected VPC-level protection or namespace-level protection with traffic steering inspection. VPC-level protection secures all applications within the VPC, while namespace-level protection with traffic inspection provides granular control over specific application traffic flows and CIDR-based inspection rules.Your deployment configuration determines the specific Helm chart structure and commands required for your environment.- Navigate to the downloaded tar file and extract the contents:tar -xvzf <your-terraform-download.tar.gz>Navigate to the appropriate Helm directory based on your deployment configuration:

- For VPC-level

security:cd <unzipped-folder>/architecture/helm

- For namespace-level security with traffic steering inspection:

cd <unzipped-folder>/architecture/helm-<complete-app-name-path>Navigate to each Helm application folder. When you configure traffic steering inspection, separate Helm charts are generated for each protected namespace, allowing granular security policies per application.Install the Helm chart using the appropriate command:- For VPC-level

security:helm install ai-runtime-security helm --namespace kube-system --values helm/values.yaml

- For namespace-level security with traffic steering inspection:

Repeat this command for each namespace-specific Helm chart generated during the deployment process.helm install ai-runtime-security helm-<complete-app-name-path> --namespace kube-system --values helm-<complete-app-name-path>/values.yamlThis creates a container network interface (CNI), but doesn’t protect the container traffic until you annotate the application `yaml` or `namespace`.Enable "Bring your own Azure virtual network" to discover Kubernetes-related vnets:- In Azure Portal, navigate to Kubernetes services → [Your Cluster]→ Settings→ Networking.

- Under Network configuration, select Azure CNI as the Network plugin, then enable Bring your own Azure virtual network.

Verify the Helm installation:#List all Helm releases helm list -A #Ensure the output shows your installation with details such as: NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ai-runtime-security kube-system 1 2024-08-13 07:00 PDT deployed ai-runtime-security-0.1.0 11.2.2Check the pod status:

Verify that the result of the above command lists the pods with names similar to `pan-cni-*****`.kubectl get pods -ACheck the endpoint slices:kubectl get endpointslice -n kube-system #Confirm that the output shows an ILB IP address: NAME ADDRESSTYPE PORTS ENDPOINTS AGE my-endpointslice IPv4 80/TCP 10.2xx.0.1,10.2xx.0.2 12hVerify the Kubernetes resources were created properly:a. Check the service accounts kubectl get serviceaccounts -n kube-system | grep pan b. Check the secrets kubectl get secrets -n kube-system | grep pan c. Check the services: `kubectl get svc -n kube-system | grep pan`You should see resources like pan-cni-sa (service accounts), pan-plugin-user-secret (secrets), and pan-ngfw-svc (service).Annotate at the pod level in your application yaml so that the traffic from the pod is redirected to the Prisma AIRS AI Runtime Firewall for inspection.Annotate the pod using the below command:- For VPC-level

security:kubectl annotate namespace <namespace-to-be-annotated> paloaltonetworks.com/firewall=pan-fw

- For namespace-level security with traffic steering inspection:

Ensure every pod has this annotation to be moved to the ‘protected’ state across all cloud environments.kubectl annotate pods --all paloaltonetworks.com/subnetfirewall=ns-secure/bypassfirewallRestart the existing application pods after applying Helm and annotating the pods for all changes to take effect. This enables the firewall to inspect the pod traffic and secure the containers. - For namespace-level security with traffic steering inspection:

AWS

Prisma AIRS AI Runtime Firewall post deployment configurations in Panorama and AWS to protect VM workloads and Kubernetes clusters.Where Can I Use This? What Do I Need? - Secure VMs and Kubernetes Clusters in Panorama

Configure Panorama

Interfaces

- Log in to the Panorama Web Interface.Navigate to Network > Interfaces.Set the Configuration Scope to your AI Runtime Security folder.Select Add Interface.

- In the Ethernet tab, Configure a Layer 3 Interface for eth1/1(trust):

- In Interface Name, enter eth1/1.

- Select Layer3Interface type.

- In Logical Routers, select `vr-private` for eth1/1.

- In Zone, select trust for eth1/1.

- Select DHCP Client type under IPV4 address.

- Enable IPV4 for both eth1/1.

- Select Advanced Settings > Other Info.

- Select a Management Profile switch HTTPS enabled under Administrative Management Services or create a new one:

- Add.

Zone

- Configure Zones (Network → Zones).Select Add Zone.Enter a Name.Select Layer3 Interface type.In Interfaces, add $eth1 interface for trust zone.

- For namespace-level security with traffic steering inspection:

- For namespace-level security with traffic steering inspection:

- For namespace-level security with traffic steering inspection:

- For namespace-level security with traffic steering inspection: