Strata Logging Service

Using Query Builder

Table of Contents

Expand All

|

Collapse All

Strata Logging Service Docs

Using Query Builder

Use queries to identify the exact log records you want Strata Logging Service

to retrieve.

| Where Can I Use This? | What Do I Need? |

|---|---|

| One of these:

|

Strata Logging Service helps you build queries by offering suggestions as to what

you can specify next in your query. Queries are Boolean expressions that identify the

log records Strata Logging Service will retrieve for the specified log record

type. You use them as an addition to the log record type and time range information that

you are always required to provide. Use queries to narrow the retrieval set to the exact

records you want.

You can select field names, boolean operators, and equality and pattern matching

operators as you build your query. You can also use type-down to specify field names and

operators. Finally, you can left-click on a table cell to add that column and value,

with an equality operator, to the query.

Query Syntax

Specify queries using match statements. These statements can be either an equality or

pattern matching expression. You can optionally combine these statements using the

Boolean operators: AND or

OR.

<match_statement> [<boolean> <match_statement>] ...

For example:

source_user LIKE 'paloalto%' AND action.value = 'deny'

A query can be at most 4096 characters long. The actual field name that you use for

your filters are not identical to the names shown in the column header. Also, the

data displayed in the log table might not always be the identical value you want to

use in your queries. For example, the BYTES field shows

values rounded to the nearest byte or kilobyte. To obtain the exact bytes_total

value, use the add-to-search feature provided by the query builder.

The filter evaluates queries according to the standard order of precedence for logical

operators. However, you can change the order of operations by grouping

terms in parentheses.

It is an error to create a query with identical start and end times.

|

Expression Type

|

Definition

|

|---|---|

|

Numeric comparison

|

Equality operators are described below.

|

|

String comparison

|

|

|

Pattern matching

|

Pattern matching is supported only for fields that contain

strings or IP addresses.

For strings and IP addresses, % may be

provided as a wild card character at any location in the value.

A pattern matching expression that does not provide a wild card

returns the identical log lines as an equality comparison.

|

You must use single quotes with your string values: '<value>'. Double

quotes are illegal: "<value>".

Supported Operators

When building a query, you can choose from a set of operators. The following table

describes when to use each operator and lists its compatible values.

| Operator | When to Use it | Possible Values |

|---|---|---|

| = | Find logs that contain an exact value. |

bytes_total = 270

action.value = 'allow'

Full: src_ip.value = “192.1.1.10/32”

Subnet Range: src_ip.value = “192.1.1.10/24”

time_generated = '2022-03-29 12:57:14'

|

| != or <> | Find logs that do not contain anexact value. |

bytes_total != 270

bytes_total <> 270

action.value != 'allow'

action.value <> 'allow'

Full: src_ip.value != “192.1.1.10/32”

Subnet range: src_ip.value <>

“192.1.1.10/24”

time_generated != '2022-03-29 12:57:14'

time_generated <> '2022-03-29 12:57:14'

|

| < | Find logs with data less than a value. |

bytes_total < 270

time_generated < '2022-03-29 12:57:14'

|

| <= | Find logs with data less than or equal to a value. |

bytes_total <= 270

time_generated <= '2022-03-29 12:57:14'

|

| > | Find logs with data greater than a value. |

bytes_total < 270

time_generated > '2022-03-29 12:57:14'

|

| >= | Find logs with data greater than or equal to a value. |

bytes_total <= 270

time_generated >= '2022-03-29 12:57:14'

|

| LIKE | Find logs with data that matches a string pattern. LIKE is not

supported for fields such as action,

tunnel, or

proto that have limited possible

values. |

source_user_info.name LIKE “usern_me”

You can use either _ or

% as wildcard characters. |

| AND | Find logs that satisfy multiple search terms at once. |

bytes_total = 270 AND

source_user_info.name LIKE “usern_me” AND src_ip.value !=

“192.1.1.10/24”

|

| OR | Find logs that satisfy at least one of multiple search terms. |

bytes_total = 270 OR source_user_info.name LIKE

“usern_me” OR src_ip.value != “192.1.1.10/24”

|

| () | Specify the priority in which search terms are evaluated. |

bytes_total = 270 AND (source_user_info.name LIKE

“usern_me” OR src_ip.value != “192.1.1.10/24”)

|

Supported Characters

These are the characters that you can use when building a query using Query Builder.

| Alphanumeric characters | All letters, both uppercase and lowercase and numbers A-Z, a-z,

0-9 |

| Special characters | underscore (_), black slash (\), forward slash (/) ampersand (&), at symbol (@), percent symbol (%), hash symbol (#), dollar symbol ($), tilde (~), asterisk (*), pipe (|), less than and greater than sign (< >), plus sign (+), comma (,), period (.), question mark (?), exclamation mark (!), colon (:), dash, or hyphen (-), equal (=) |

| White space characters | space |

About Field Names

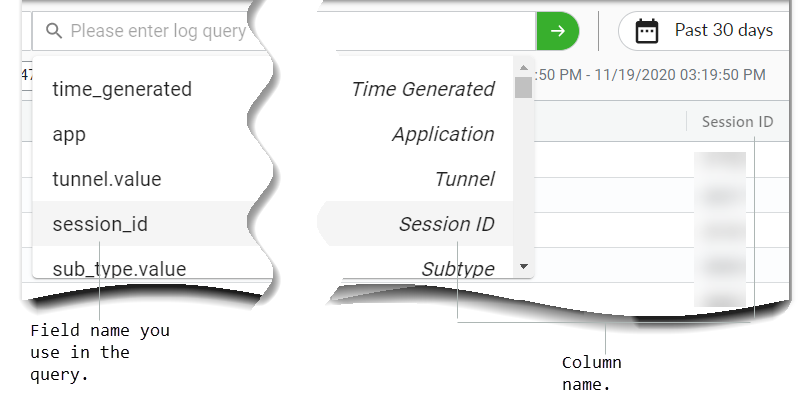

The field names that you use in your queries are sometimes, but not always, identical to the

names shown in the log record column headers. The field name that you must use is

the log record field name as it is stored in Strata Logging Service.

There are two ways to obtain this field name:

- Click into the user interface query field to see a drop-down list of available field names for the selected log type. On the right-hand side of this drop-down list is the corresponding column name.

![]()

- The Schema Reference guide provides a mapping of the log column name, as shown in the user interface, to the corresponding log record field name.

Build Queries

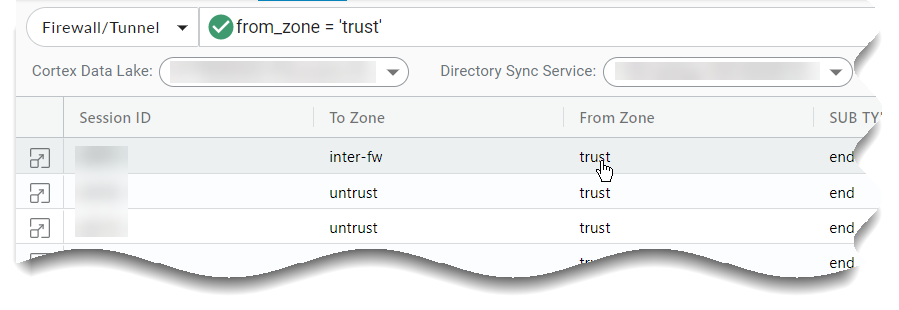

- Do one of the following to build a query:

- Click into the query field to see the list of available field names.

![]()

- You can left-click on a table cell to add that column and value,

with an equality operator, to the query.

![]()

- Click into the query field to see the list of available field names.

- Click to select the field that you want, or type down until you find the right field.

- The user interface immediately displays the operators that are appropriate

for the field's data type.

![]()

- Continue this point-click-and-type activity until your query is complete.

Click or press Enter to retrieve log records.

![]() You have the option to cancel a query you no longer want to run with the Cancel option

You have the option to cancel a query you no longer want to run with the Cancel option